Ready to learn Data Science? Browse courses like Data Science Training and Certification developed by industry thought leaders and Experfy in Harvard Innovation Lab.

Encoding my workflow saves time and effort over the long-run, but it also requires me to be explicit about what my workflow is in the first place.

I think I’m like most data scientists in that there are certain things I tend to do repeatedly across projects: there are certain patterns I check for, or certain things I plot, or certain relationships I try to understand. Sometimes it’s because I’ve made a mistake often enough that I find it pays to automatically guard against it. Sometimes it’s because I just find that, personally, certain ways of looking at a problem help me wrap my mind around it. In any case, large parts of my workflow for any given project very often look very similar to large parts of my workflows for previous projects. Not surprisingly, I’ve found it useful to reduce the overhead of these repetitive activities.

While I’ve understood the value of reducing overhead through automation ever since I learned how to code, I never got much advice on how to do it. It’s just something I picked up. In this post, I try to summarize some of the things I’ve learned, and I’ll use some code from a recently-automated part of my workflow as an example.

Logical beauty

Good design advice has been around at least since Vitruvius wrote Ten Books on Architecture (still a worthwhile read), where he said that good design achieves firmness, usefulness, and delight. It’s relatively easy to translate the first two of those ideals to workflow automation design: whatever we build, it should consistently do the job we want it to do at the scale, speed, and quality that we need. But I think it’s the last ideal — delight — that most often makes the difference between a workable design and a good design. Fred Brooks argues that delight, in the context of technical design, should be thought of as “logical beauty”, and argues that a logically beautiful product is one that minimizes mental effort.

I think minimization of mental effort is an interesting goal for a data science workflow, since many recent embarrassments in the data industry seem to have arisen from practitioners not expending enough mental effort thinking about the potential consequences of their design decisions. So we need to carefully select which areas of mental effort to minimize. That means the first step of a good design is, no matter how trite it sounds, to decide what we want to accomplish.

Think about driving a car. I drive a car to go from where I’m going as efficiently as possible. A well-designed car should allow me to reserve as much of my attention as possible for those things most directly related to accomplishing my purpose. That means minimizing the mental effort on everything else. In other words, while I’m driving, I should be able to focus on making my turn at the right place, on avoiding things like pedestrians or lampposts, and things like that. I should not be focused on making sure the engine runs, or on shifting gears, or on how the brakes work. If I used the car for another purpose I might focus on those things, but they are not proper to the accomplishment of the particular purpose I have chosen. A good design shouldn’t distract me or prevent me from paying attention to the things that could prevent from from accomplishing my purpose.

Propriety is a somewhat old-fashioned way of talking about relevance. Brooks defines propriety in design as “do not introduce what is immaterial.” I’ve found it useful to think about propriety in terms of the tradeoffs I used in the analogy. If doing something ensures that I am not prevented from accomplishing my purpose, it is proper to that purpose. If doing something just facilitates the the accomplishment of the purpose, it’s not proper to that purpose. Failing to avoiding pedestrians or lampposts will necessarily prevent me from getting where I want to go. That is true if I travel by automobile, foot, skateboard, bike, airplane, or train. Failing to have a combustion engine won’t necessarily prevent me from getting where I want to go; therefore, it’s not proper to the design, no matter how convenient it is a matter of implementation. So after we’ve defined our purpose, define what’s proper to that purpose: as a rule of thumb, if it can move us forward, it may or may not be proper; if it can keep us from moving forward, it’s proper. Good design minimizes the mental effort expended on tasks that are not proper to our intended purpose.

Orthogonality is another component of logical beauty. Brooks summarizes this as “do not link what is independent”. In other words: my ability to avoid pedestrians and lamp posts partially depends upon being able to see those things, and partially upon being able to maneuver the car to avoid them. A windshield’s functionality and a steering wheel’s functionality should be independent of one another. A design that failed to maintain that independence would be a poor design. So after we define the proper components of our workflow, we minimize dependencies. Good design keeps proper components separate from components that aren’t proper so we don’t accidentally minimize the mental effort we expend on the the things that actually need our full attention. Likewise, good design keeps non-proper components from each other so we don’t accidentally minimize mental effort in ways we didn’t anticipate.

Generality is the last principle I’ll talk about before moving on to the example. Brooks summarizes it as “do not restrict what is inherent.” In other words, a tire (or engine or wiring, etc.) that fits one car shouldn’t be designed to fit onlythat car without a very good reason. Good design demands a reason before restricting the use cases to which a piece of functionality can apply. This makes it easier to accommodate unanticipated needs without requiring us to rebuild the whole product.

So, to summarize:

- Decide what purpose we want to accomplish.

- Identify which tasks are proper to the accomplishment of that purpose.

- Minimize dependencies by separating proper tasks from non-proper tasks, and non-proper tasks from one another.

- Plan each task to be as general-purpose as possible while still maintaining propriety or orthogonality.

Designs that follow the above principles tend to be logically beautiful, in that they minimize our mental effort in all the right places.

A minimalist example of workflow design

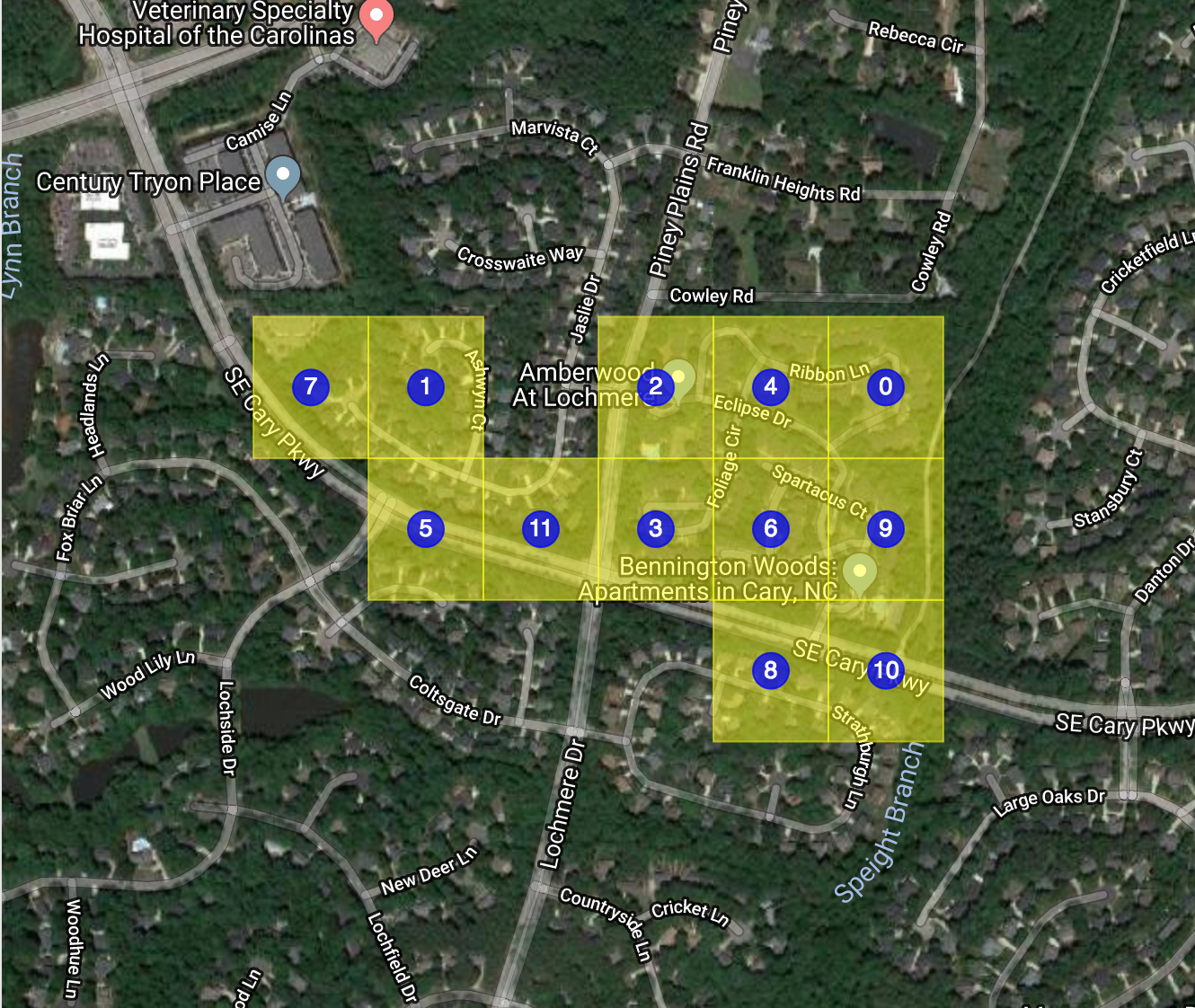

I work a lot with geolocation data, and I often have the need to plot that data. Sometimes I need to plot shapes, and other times I need points. Sometimes I need to see those objects against the background of a satellite image to get some rough context, sometimes I need the more detailed context of roads or labels. I normally have to use some form of this workflow several times a week. Sometimes I have to go through it hundreds of times a day. At any rate, I have to do it often enough that it’s a good candidate for minimizing mental effort. So I only have one purpose: graphically represent longitude and latitude pairs on a picture that represents the real-world context of those coordinates. That purpose defines propriety: anything that doesn’t actually put shapes on the picture is not proper to my purpose, and therefore mental effort expended on that thing should be minimized. In the case of the current example, defining purpose and propriety were relatively easy. That’s not always the case.

Now I need to worry about orthogonality. Imagine speaking to someone with absolutely no technical expertise. I usually picture either one of my earliest managers described everything involving code as “new-fangled”, or my mother who still refuses to get an email address, or a college freshman majoring in the humanities. You get the picture. My task creates a set of questions that my imaginary novice would see as being both necessary and sufficient to create my desired outcome. If my imaginary novice can say “but why do you need the answer to X?”, that means I’ve included an unnecessary question. If my imaginary novice can say “ but I don’t understand how the answers to X, Y, and Z will lead to the outcome”, that means I’m either missing a question or haven’t asked the right question. The reason I like to use an imaginary novice as a sounding board is because I tend to get too technical too fast when I try to design everything. Because I know how to implement things, my brain jumps to implementation questions sooner than it should. If you have a real flesh-and-blood novice who is willing and able to be your sounding board, use that person instead of an imaginary one.

If my goal is to get shapes on a picture, then I have only three questions:

- Where do I get the shapes?

- Where do I get the picture?

- How do I get the shapes on the picture?

Pretty basic questions, right? But these basic questions force me to think in terms of foundational building blocks, which makes it easier to preserve orthogonality. If whatever I eventually build operates in a way that I can’t change how I get shapes without changing how I put those shapes on a picture, then I’ve probably built the thing wrong. The different pieces of functionality should be as independent as possible.

Now that I have orthogonal components, I want to make them as general as possible. For that, I need use cases. I’m already biased with preferred ways of doing of the three things I need to do, but I’ll be better off in the long run if I can think of as many ways — preferred or not — as I can. I might not implement support for all the use cases I list: that will depend on the constraints of the project (mostly how much time, how many resources, and how many competing priorities I have). It’s still best to know as many use cases as possible up front — often, in the course of implementing support for critical use cases, it’s pretty trivial to support additional, as-yet-not-needed use cases simply as a matter of course. This can reduce frustration and wasted time down the road. Here are a bunch of use-cases for the current example:

Where do I get the shapes?

Where do I get the picture?

How do I get the shapes on the picture?

- Bokeh

- GeoViews/HoloViews

- Matplotlib

- Leaflet.js (via Folium)

The list isn’t exhaustive, of course. If I had more time to think about it, I could build out a more complete list, and would probably be better off for it. But as I have limited time and other things to do, I’m content that I have at least two answers for every question. All I’m really trying to do is avoid a myopic focus on only my immediate needs.

From design to implementation

You can jump to the bottom of this section to see the full code I implemented, but it might be helpful to read through this discussion first to understand what I did.

I chose to rely entirely on the Bokeh library to plot the shapes on the pictures because I already know and like that library — it was easy to get something up and running quickly. Likewise, I chose to rely entirely on Google Maps to get the picture because it provides a single source for satellite images, maps, and annotated versions of both of those two things, and because Bokeh already offers a convenient way to call that Google Maps API.

I did very little to minimize my mental effort for those parts of my workflow, because crafting the look and feel of my visualization — choosing the background image, deciding what shapes to plot, and styling and annotating those shapes — is all proper to my purpose, so I want to maintain as much granular control over those things as possible.

The Python class I implemented has a `prepare_plot` method, which for the most part just takes optional keyword arguments that get passed to a Bokeh `GMapPlot` object that the method instantiates:

The class also has an `add_layer` method for placing shapes on the picture:

This method call has only two required arguments. One is a label that tells the method what data source the shape should reference (again, more on that later). The other is a Bokeh model — the two models I use most often are `Patches`, for plotting polygons, and `Circle`, for plotting coordinate pairs. There is an optional `tooltips` argument that takes either a string or a list of tuples to create tooltips that should appear when a mouse hovers over a shape. All other keyword argument are passed to the Bokeh model, which gives me granular control over how the shape looks. Most Bokeh models allow both the border and the area of a shape to be styled differently. For example, if I wanted a red dot with a black border, I would set `fill_color` to red and `line_color` to black. Likewise, if I wanted the fill to be 50% transparent but the border to be 0% transparent, I would set `fill_alpha` to 0.5 and `line_alpha` to 1.0. Often, I want fill and line to be styled the same way, so the `add_layer` method accepts additional keywords. If I set `alpha` to 0.5, then both `fill_alpha` and `line_alpha` will be set to 0.5. It’s a small reduction in mental effort, but it’s enough of a reduction to be convenient.

The majority of the mental-effort-reduction work I did, however, had to do with preparing the data for plotting. All four of the use cases I mentioned for getting shapes — longitude/latitude pairs, well-known text, shapely objects, and geohashes — are use case that I regularly encounter in my daily work. I found that a lot of my time investigating data wasn’t spent on actually trying to understand the plot, but rather on getting the data into the format I needed. I especially incurred a lot of overhead when I had to switch data source types part way through an analysis (for example, I may have set up a workflow to plot geo-coordinate pairs, and then suddenly found I needed to plot geohashes; that required me to restructure part of my workflow).

The `add source` method requires data and a label:

The data can be a list containing any one of the four types of input values I’ve mentioned, or it can be a dataframe where one column is comprised of those input values (and that column must be explicitly specified). This method performs four basic functions:

- It calls the `_process_input_value`, which automatically validates the input type (geohash vs. coordinate pair, etc.) and formats it into a list of x coordinates and a list of y coordinates. In the case of well-known text or a shapely object representing a MultiPolygon or some other collection of multiple geometries, it will format a list of lists. The `_process_input_value` method serves as a switchboard, completely eliminating the overhead of appropriately formatting input values, assuming the values are one of the supported types.

- It appends metadata to the input values. In the case of a DataFrame input, each column of the DataFrame that does not contain the input values will be used as metadata. In the case of a list input, any keyword arguments will be used as metadata (assuming the argument is a list of the same length as the input value list.

- It expands the source DataFrame to accomodate multi-shapes. If an input value is something like a MultiPolygon, it will split that out into individual polygons, and append the MultiPolygon’s metadata to each individual polygon. This is similar to the concept of exploding an array in SQL.

- After all of the above processing, it calls `_set_coordinate_bounds`, which updates the plot bounds (minimum and maximum x and y values). They bounds are referenced when determining the Google Maps zoom level.

The class does a bunch of other things, but for the most part, the structure of the class itself is nothing more than an instantiation of the three key parts of my workflow: get shapes, get a picture, put the shapes on the picture. Because I codified that workflow using a few sound design principles (purpose → propriety → orthogonality → generality), it’s relatively easy to extend the class to accommodate new needs. For example, I’d like to expand the class to use WMTS tiles instead of Google Maps. Because of the way the class is designed, I can do that more or less with changes only to the `prepare_plot` method, and nothing else. If I wanted to render the plot using Leaflet or Matplotlib or something else, that would require some more extensive renovation, but still the effects wouldn’t ripple through the whole codebase.

The biggest benefit, however, is less a matter of how I wrote the code and more the fact that I wrote any code at all. Yes, encoding my workflow saves time and effort over the long-run, but it also requires me to be explicit about what my workflow is in the first place. In writing this code, I identified several things about my workflow that really made me unhappy. For example, I hated how much time it took to manage the data when I wanted to plot polygons instead of points (or points instead of polygons), so I changed `add_source` method to always assume polygons and the `add_layer` method to automatically calculate a centroid and update the data source whenever a Bokeh model is used that assumes point data but references a data sources that contains polygon data. I had baked assumptions about the polygon vs. point structure of my data into my workflow without realizing it. By codifying my workflow, I recognized that assumption, decided I didn’t like it, and removed it.

Codifying my workflow makes my workflow better, and making my workflow better makes me a better coder. And in explicitly thinking about the design of both the code and the workflow, I’ve made it easier to repurpose old work for new problems, and to share my work with colleagues.

The code

I suppose it should go without saying that this code isn’t meant to be a perfect representation of the design principles I outlined earlier. Design is a direction, not a destination. I’m painfully aware of how much the code could stand to be improved!