It is incredible how fast data processing tools and technologies are evolving. And with it, the nature of the data engineering discipline is changing as well. Tools I am using today are very different from what I used ten or even five years ago, however, many lessons learned are still relevant today.

I have started to work in the data space long before data engineering became a thing and data scientist became the sexiest job of the 21st century. I ‘officially’ became a big data engineer six years ago, and I know firsthand the challenges developers with a background in “traditional” data development have going through this journey. Of course, this transition is not easy for software engineers either, it is just different.

Even though technologies keep changing — and that’s the reality for anyone working in the tech industry — some of the skills I had to learn are still relevant, but often overlooked by data developers who are just starting to make the transition to data engineering. These usually are the skills that software developers often take for granted.

In this post, I will talk about the evolution of data engineering and what skills “traditional” data developers might need to learn today (Hint: it is not Hadoop).

The birth of the data engineer.

Data teams before the Big Data craze were composed of business intelligence and ETL developers. Typical BI / ETL developer activities involved moving data sets from location A to location B (ETL) and building the web-hosted dashboards with that data (BI). Specialised technologies existed for each of those activities, with the knowledge concentrated within the IT department. However, apart from that, BI and ETL development had very little to do with software engineering, the discipline which was maturing heavily at the beginning of the century.

As the data volumes grew and interest in data analytics increased, in the past ten years, new technologies were invented. Some of them died, and others became widely adopted, that in turn changed demands in skills and teams’ structures. As modern BI tools allowed analysts and business people to create dashboards with minimal support from IT teams, data engineering became a new discipline, applying software engineering principles to ETL development using a new set of tools.

The challenges.

Creating a data pipeline may sound easy, but at big data scale, this meant bringing together a dozen different technologies (or more!). A data engineer had to understand a myriad of technologies in-depth, pick the right tool for the job and write code in Scala, Java or Python to create resilient and scalable solutions. A data engineer had to know their data to be able to create jobs which benefit from the power of distributed processing. A data engineer had to understand the infrastructure to be able to identify reasons for failed jobs.

Conceptually, many of those data pipelines were typical ETL jobs — collecting data sets from a number of data sources, putting them in a centralised data store ready for analytics and transforming them for business intelligence or machine learning. However, “traditional” ETL developers didn’t have the necessary skills to perform these tasks in the Big Data world.

Is it still the case today?

I have reviewed many articles describing what skills data engineers should have. Most of them advise learning technologies like Hadoop, Spark, Kafka, Hive, HBase, Cassandra, MongoDB, Oozie, Flink, Zookeeper, and the list goes on.

While I agree that it won’t hurt to know these technologies, I find that in many cases today, in 2020, it is enough to “know about them” — what particular use cases they are designed to solve, where they should or shouldn’t be used and what are the alternatives. Rapidly evolving cloud technology has given rise to a huge range of cloud-native applications and services in recent years. In the same way as modern BI tools made data analysis more accessible to the wider business several years ago, modern cloud-native data stack simplifies data ingestion and transformation tasks.

I do not think that technologies like Apache Spark will become any less popular in the next few years as they are great for complex data transformations.

Still, the high rate of adoption of cloud data warehouses such as Snowflake and Google BigQuery indicates that there are certain advantages they provide. One of them is that Spark requires highly specialised skills, whereas ETL solutions on top of cloud data platforms are heavily reliant on SQL skills even for big data — such roles are much easier to fill.

What skills do data developers need to have?

BI / ETL developers usually possess a strong understanding of database fundamentals, data modelling and SQL. These skills are still valuable today and mostly transferable to a modern data stack — which is leaner and easier to learn than the Hadoop ecosystem.

Below are three areas I often observe “traditional” data developers having gaps in their knowledge because, for a long time, they didn’t have tools and approaches software engineers did. Understanding and fixing those gaps will not take a lot of time, but might make a transition to a new set of tools much smoother.

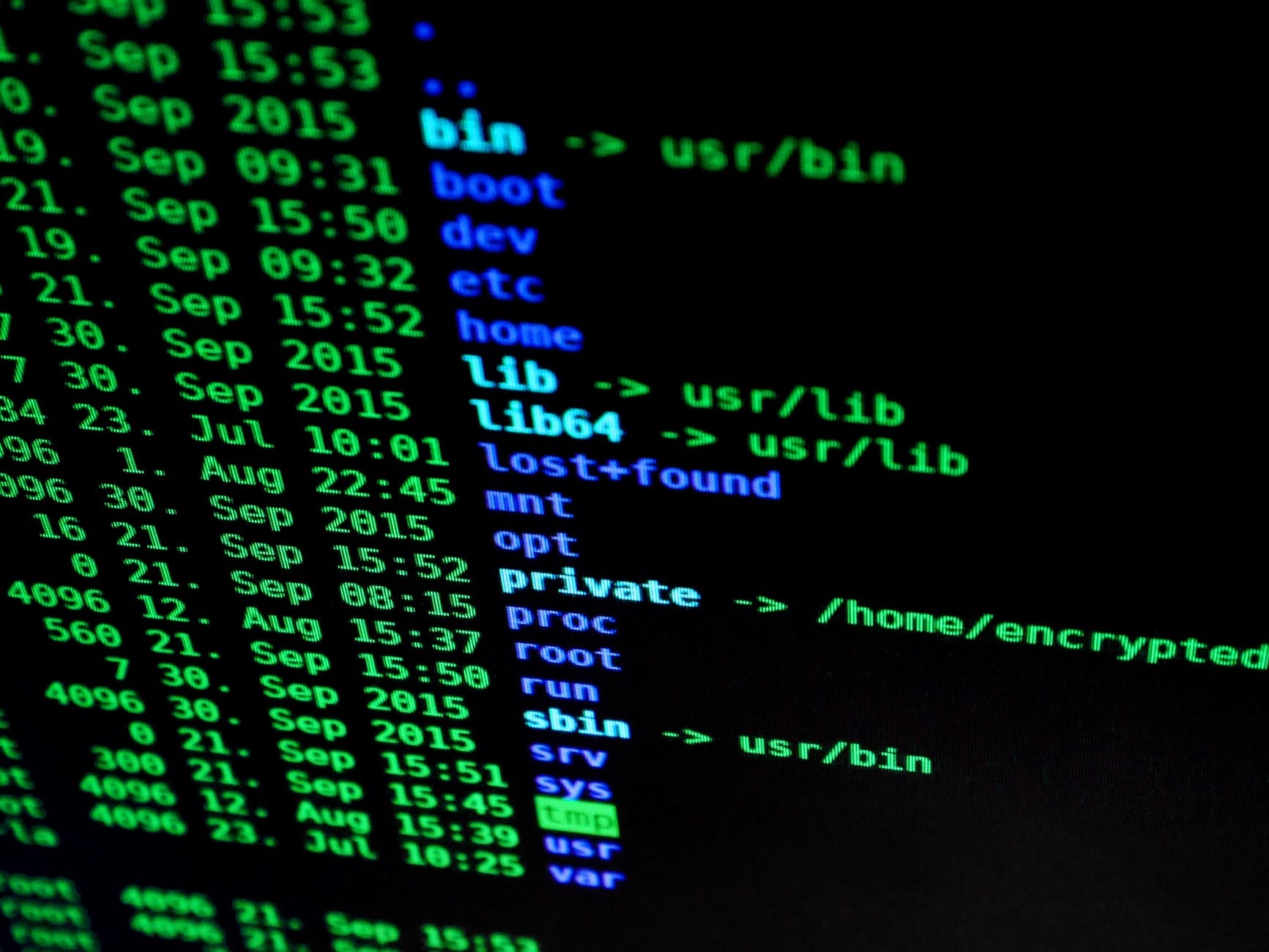

1. Version control (Git) and understanding of CI/CD pipeline

SQL code is a code, and as such, software engineering principles should be applied.

- It is important to know who, when and why changed the code

- Code should come with the tests which can be automatically run

- Code should be easily deployable to different environments

I am a big fan of DBT — an open-source tool which brings software engineering best practices to SQL world and simplifies all these steps. It does much more than that so I strongly advise to check it out.

2. Good understanding of the modern cloud data analytics stack

We tend to stick with the tools we know because they often make us more productive. However, many challenges we are facing are not unique, and often can be solved today more efficiently.

It might be intimidating trying to navigate in the cloud ecosystem at first. One workaround is to learn from other companies’ experiences.

Many successful startups are very open about their data stack and the lessons they learnt on their journey. These days, it is common to adopt a version of a cloud data warehouse and several other components for data ingestion (such as Fivetran or Segment) and data visualisation. Seeing a few architectures is usually enough to get a 10,000-foot view and know what to research further when needed — e.g. dealing with events or streaming data might be an entirely new concept.

3. Knowing a programming language in addition to SQL

As much as I love Scala, Python seems to be a safe bet to start with today. It is reasonably easy to pick up, loved by data scientists and supported pretty much by all components of cloud ecosystems. SQL is great for many data transformations, but sometimes it is easier to parse complex data structure with Python before ingesting it into a table or use it to automate specific steps in the data pipeline.

This is not an exhaustive list, and different companies might require different skills, what brings me to my last point …

Data processing tools and technologies have evolved massively over the last few years. Many of them have evolved to the point where they can easily scale as the data volume grows while working well with the “small data” too. That can significantly simplify both the data analytics stack and the skills required to use it.

Does it mean that the role of a data engineer is changing? I think so. It doesn’t mean it gets easier — business demands grow as technology advances. However, it seems that this role might become more specialised or split into a few different disciplines.

New tools allow data engineers to focus on core data infrastructure, performance optimisation, custom data ingestion pipelines and overall pipeline orchestration. At the same time, data transformation code in those pipelines can be owned by anyone who is comfortable with SQL. For example, analytics engineering is starting to become a thing. This role sits at the intersection of data engineering and data analytics and focuses on data transformation and data quality. Cloud data warehouse engineering is another one.

Regardless of whether the distinction in job titles will become widely adopted or not, I believe that “traditional” data developers possess many fundamental skills to be successful in many data engineering related activities today — strong SQL and data modelling are some of them. By understanding the modern cloud analytics data stack and how different components can be combined together, learning a programming language and getting used to version control, this transition can be reasonably seamless.