This post is written for those readers with little or no biology, but having data science skills and who are interested in working on biological domain problems.

My goal is for you to understand how you can tackle this problem without reading a textbook or spending 20 hours studying on Khan Academy.

Keep in mind that while many problems are neatly pre-digested into training and testing data sets, this is not always the case. This article is very much about the ideas that help you before you get to that point.

The Checklist:

- Pick your task. Understand it

- Understanding the data that solves your problem (via the Data Types of Modern Biology)

- Contextualize your project to understand your goals.

- Lastly, how and why you should collaborate

Pick your Task. Understand it.

Biology is the study of living organisms. It’s huge. Live with it. Lucky for us though, your problem is much more specific.

It’s an interesting question whether this is a function of the human preference for categorisation but if you go to the Wikipedia list of unsolved problems in biology you will see every problem neatly laid out into different categories, like “biochemistry”, “neurophysiology” and “ecology”.

First things first. Don’t think you have to study everything up front, this is often quite unnecessary. Learn more as you need it. Your time is valuable.

The rest of this article is about how you break down that literature into useful insights that will help with your solution.

If you don’t already have a problem in mind, you could go here and pick a problem.

Learning general skills is best done in the context of an immediate and real problem for which specific answers can be found.

The Data Types of Modern Biology

It is often tempting to spend most of our time training and evaluating models. On any data science project however, there’s likely to be payoffs to thinking about your data sources and data generating processes.

In Biology, there are huge returns to understanding your data.

Comparing Raw, Annotated and Research Data

Raw data is new. It hasn’t been processed.

Take DNA sequences for example. These represent information containing molecules which provide the blue-print for all life. We represent them with ordered combinations of four letters “A”,”T”,”C” and “G”.

These letters are not raw data though. In reality, we deduce them via experimental techniques that involve bouncing off DNA molecules in solution and that process has some error. Thus the true raw data may in fact be the wave-like spectra created in that experiment.

So in reality, the “rawness” of data is very subjective but it can be valuable to ask about in many contexts.

We can contrast raw data with annotated data. This data has had some level of editing performed on it.

Elaborating on the previous example. We can translate DNA sequences to Protein sequences (made up of 20 different letters instead) using a dictionary-like key.

If you saw a protein sequence derived from a DNA sequence, it would be a form of annotation on the DNA sequence and not the same data as protein sequenced directly (which does result from other analytical processes).

Annotations, as in other areas, can become more detailed over time.

If we define research as the process of using data to evaluate hypotheses that might be supported, then research itself can be thought of as an extreme form of annotation.

This year, a call was made to annotate research papers that might be relevant to covid-19 using Natural Language Processing (NLP), to enable us to mine existing literature for clues to aid in the global coronavirus response.

The raw data for these projects then becomes the text inside journal articles and which itself describes other types of data. An example paper is here.

The use of data types as a framework and language for describing biological data can be incredibly powerful, and these details can make or break the interpretation and validity of your final model.

Observational vs Experimental

Considering where your data comes from will determine the type of analysis and the reach of your insights. In science, we do experiments. But so often, we also don’t.

How would Darwin have proven evolution without observational data (going out and observing similarities between animals)?

Conversely, are we to ignore natural experiments when they occur? (when nature randomly treats otherwise identical entities differently).

Experimental data originates from intentionally changing one or more variables and observing how the world changes. This data is often created with a particular analysis method in mind and you will need to understand the logic behind this if you are to analyze this type of data.

Experimental data is awesome because it can often facilitate causal inference. It often lends itself well to supervised learning tasks.

Observational data is much more common. This includes any data where we haven’t systematically varied any variables of interest.

Causal inference is much harder, if not impossible, with observational data. Darwin may have succeeded because of natural experiments (see hyperlink above).

Supervised learning still works for observational data, but the language surrounding model interpretation becomes treacherous territory. This causes much confusion in the public domain about whether X or Y is good for your health or not.

Global vs Local

This distinction compares data accessible publicly, to data collected by a specific group like a lab or a company. I suspect thinking about this distinction is useful beyond just biological applications.

Global data comprises a vast array of online databases about molecules, species, medical conditions and more that have been collected and shared publicly. These datasets are critical repositories for public research and can be awesome ways to enrich local datasets through linking.

Learning how to leverage global data resources may well be one of the most exciting opportunities for data scientists to work with large non-commercial datasets currently.

Local data, however, is data that only you or your organization has. It may or may not be structured in similar ways to other datasets or be produced using the same methods.

This data can be very valuable if you are trying to compete with others academically or in business but might be less valuable if you are trying to create a general solution that needs to be applicable in many contexts.

The danger with building models on local data is that your model might be very hard for other people to use appropriately unless they can reconstruct your data collection process.

A further note: Local data doesn’t become public data just because it’s available online, although the details probably matter here. Global data repositories usually create relationships between data points that mean that there is a process required to submit additions to these repositories.

Context is Key

The criteria by which a problem is solved changes for every application of data science, so too in Biological Sciences.

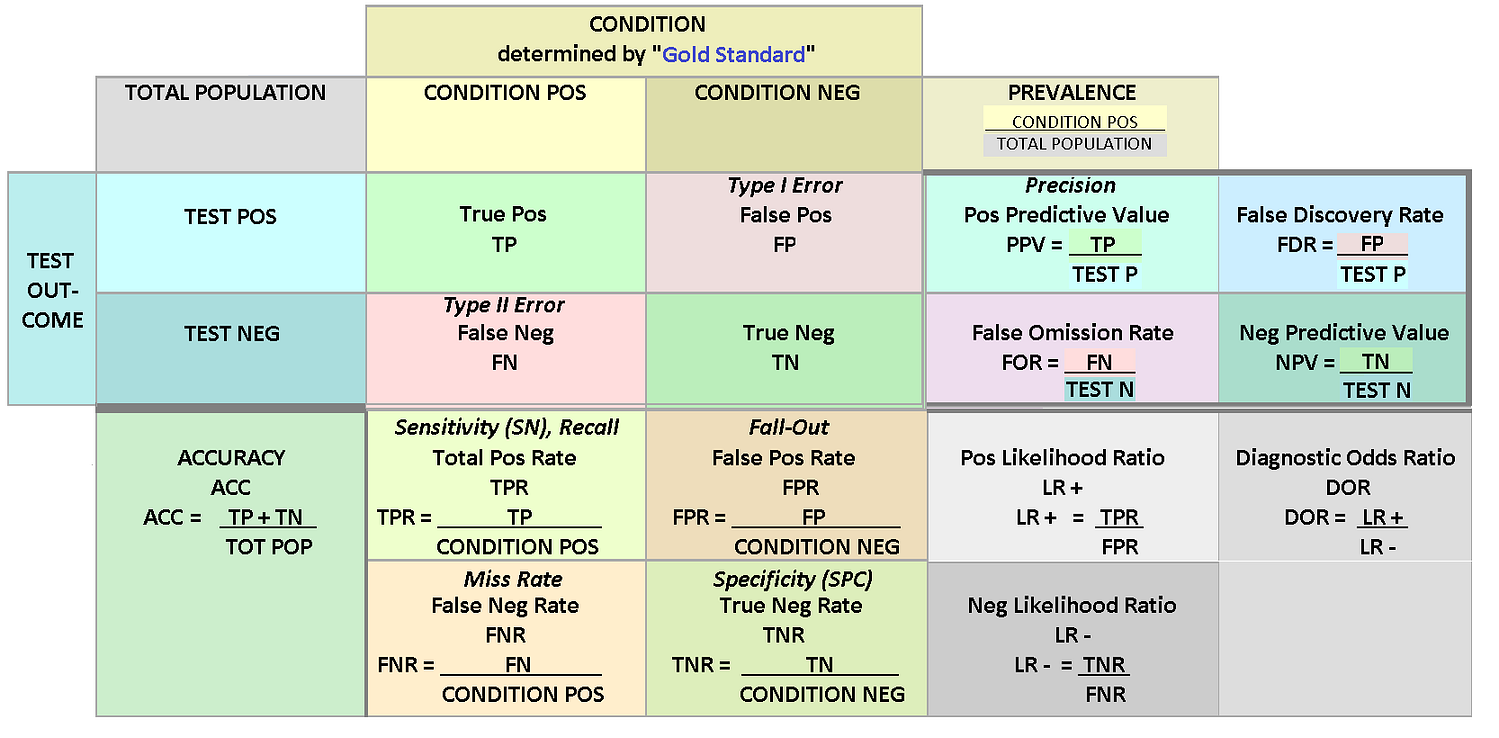

Evaluation Metricsare important considerations for any project, but the important metrics for biological projects will often be domain specific (hence why you should learn about the domain!).

For example, in many biomedical applications of data science, the false discovery rate will compose part of your evaluation criteria. If your algorithm says that a molecule is present that in fact isn’t, and a researcher believes you, they might build their next study around that result!

Model Interpretationis not trivial in biological problems (is it ever?). Depending on how you construct your model, you may or may not be able to explain why it makes the decisions that it makes. Sometimes, this is ok, and sometimes it isn’t.

Model interpretation can be as simple as keeping track of feature importances (in tree based models), coefficients in regression models. Often these values can be directly compared to existing theories or other models, thereby placing your results in context.

In biological domains, understanding and communicating the limitations of a model is very important.

Data science is fundamentally constrained by data, so I’d start there when considering what you can or can’t say about your model’s conclusions.

Was your data local? Or did you analyze a larger global dataset? The latter will allow greater generalizability. Was the data experimental? If so, causal interpretations may be on the table.

How annotated was the data you used to train your model? Could that bias your model?

Understanding the domain and your data is not accessory to your data science, it’s fundamental to knowing what your model can and can’t do.

You must Collaborate

This goes without saying.

If you want to improve and be challenged, the best way to do that is work with lots of different people. Moreover, this becomes especially salient if those people have subject matter expertise in problems that you are interested in.

I don’t think it’s just wishful thinking when I say that lots of people out there are willing to share their subject matter expertise.

Bear in mind, a person who could help you doesn’t need a PhD, only more experience, knowledge or education than you currently have.

Take a risk, ask for help, share a problem. It is hardly an indictment on your character to ask for help so you really have nothing to lose.

Next steps:

Hopefully, when you have put the effort into understanding these aspects of your problem, you will be in a much better place to solve it.

In my personal experience, at Mass Dynamics, thoroughly pinning down both the problem and solution criteria is immensely useful. Whether it’s for your collaborators, customers or the general public, making an effort to understand your problem domain will create real dividends and drive success for all involved.

So do what you’d usually do, crunch the data, feature engineer, feature select, find the latent space, create embeddings, train your models, evaluate and tune. Do all this, knowing what your goal is, the true solution criteria and with a helping hand to guide you through.

**Special thanks to Ben Harper and others for his help editing this article.