Ready to learn Data Science? Browse Data Science Training and Certification courses developed by industry thought leaders and Experfy in Harvard Innovation Lab.

Using the right evaluation metrics for your classification system is crucial. Otherwise, you could fall into the trap of thinking that your model performs well but in reality, it doesn’t. In this post, you will learn why it is trickier to evaluate classifiers, why a high classification accuracy is in most cases not as desirable as it sounds, what are the right evaluation metrics and when you should use them. You will also discover how you can create a classifier with virtually any precision you want.

Table of Contents:

- Why it is important?

- Confusion Matrix

- Precision and Recall

- F-Score

- Precision/Recall Tradeoff

- Precision/Recall Curve

- ROC AUC Curve and ROC AUC Score

- Summary

Why it is important?

Evaluating a classifier is often much more difficult than evaluating a regression algorithm. A good example is the famous MNIST dataset that contains images of handwritten digits from 0 to 9. If we would want to build a classifier that classifies a 6, the algorithm could classify every input as non-6 and get a 90% accuracy, because only about 10% of the images within the dataset are 6’s. This is a major issue in machine learning and the reason why you need to look at several evaluation metrics for your classification system.

Confusion Matrix

First, you can take a look at the Confusion Matrix which is also known as error matrix. It is a table that describes the performance of a supervised machine learning model on the testing data, where the true values are unknown. Each row of the matrix represents the instances in a predicted class while each column represents the instances in an actual class (and vice versa). It is called „confusion matrix“ because it makes it easy to spot where your system is confusing two classes.

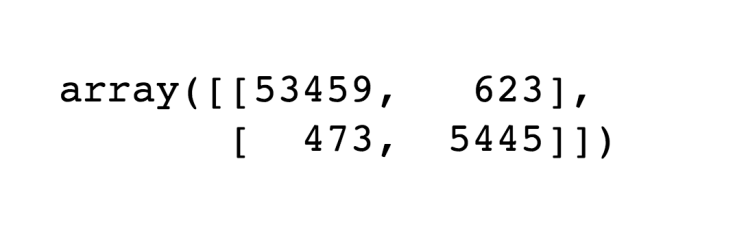

Below you can see the output of the „confusion_matrix()“ function of sklearn, used on the mnist dataset.

Each row represents an actual class and each column represents a predicted class.

The first row is about non 6 images (the negative class), where 53459 images were correctly classified as non-6s (called true negatives). The remaining 623 images were wrongly classified as 6s (false positives).

The second row represents the actual 6 images. 473 were wrongly classified as non-6s (false negatives). 5445 were correctly classified as 6 (true positives). Note that the perfect classifier would be right 100% of the time, which means he would have only true positives and true negatives.

Precision and Recall

A confusion matrix gives you a lot of information about how well your model does, but there’s a way to get even more, like computing the classifiers precision. This is basically the accuracy of the positive predictions and it is typically viewed together with the “recall”, which is the ratio of correctly detected positive instances.

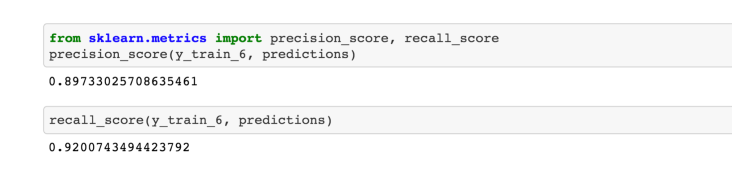

Fortunately, sklearn provides build-in functions to compute both of them:

Now we have a much better evaluation of our classifier. Our model classifies 89% of the time images correctly as a 6. The precision tells us that it predicted 92 % of the 6s as a 6. But there is still a better way!

F-Score

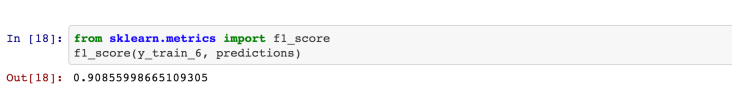

You can combine precision and recall into one single metric, called the F-score (also called F1-Score). The F-score is really useful if you want to compare 2 classifiers. It is computed using the harmonic mean of precision and recall, and gives much more weight to low values. As a result of that, the classifier will only get a high F-score, if both recall and precision are high. You can easily compute the F-Score with sklearn.

Below you can see that our model get a 90% F-Score:

But unfortunately, the F-score isn’t the holy grail and has its tradeoffs. It favors classifiers that have similar precision and recall. This is a problem because you sometimes want a high precision and sometimes a high recall. The thing is that an increasing precision results in a decreasing recall and vice versa. This is called the precision/recall tradeoff and we will cover it in the next section.

Precision/Recall Tradeoff

To illustrate this tradeoff a little bit better, I will give you examples of when you want a high precision and when you want a high recall.

high precision:

You probably want a high precision, if you trained a classifier to detect videos that are suited for children. This means you want a classifier that may reject a lot of videos that would be actually suited for kids, but never shows you a video that contains adult content. therefore only shows safe ones (e.g a high precision)

high recall:

An example where you would need a high recall, is if you train a classifier that detects people, who are trying to break into a building. It would be fine if the classifier has only 25 % precision (would result in some false alarms), as long as it has a 99% recall and alarms you nearly every time when someone tries to break in.

To understand this tradeoff even better, we will look at how the SGDClassifier makes it’s classification decisions in regards to the MNIST dataset. For each image it has to classify, it computes a score based on a decision function and it classifies the image as one number (when the score is bigger the than threshold) or another (when the score is smaller than the threshold).

The picture below shows digits, that are ordered from the lowest (left) to the highest score (right). Let’s suppose you have a classifier that should detect 5s and the threshold is positioned at the middle of the picture (at the central arrow). Then you would spot 4 true positives (actual 5s) and one false positive (actually a 6) on the right of it. The positioning of that threshold would result in an 80% precision (4 out of 5), but out of the six actual 5s on the picture, he would only identify 4 so the recall would be 67% (4 out of 6). If you would now move the threshold to the right arrow, it would result in a higher precision but in a lower recall and vice versa if you move the threshold to the left arrow.

Precision/Recall Curve

The trade-off between precision and recall can be observed using the precision-recall curve, and it lets you spot which threshold is the best.

Another way is to plot the precision and recall against each other:

In the image above you can clearly see that the recall is falling off sharply at a precision of around 95%. Therefore you probably want to choose to select the precision/recall tradeoff before that – maybe at around 85 %. Because of the two plots above, you are now able to choose a threshold, that gives you the best precision/recall tradeoff for your current machine learning problem. If you want for example a precision of 85%, you can easily look at the plot on the first image and see that you would need a threshold of around – 50,000.

ROC AUC Curve and ROC AUC Score

The ROC curve is another tool to evaluate and compare binary classifiers. It has a lot of similarities with the precision/recall curve, although it is quite different. It plots the true positive rate (also called recall) against the false positive rate (ratio of incorrectly classified negative instances), instead of plotting the precision versus the recall.

Of course, we also have a tradeoff here, because the classifier produces more false positives, the higher the true positive rate is. The red line in the middle is a purely random classifier and therefore your classifier should be as far away from it as possible.

The ROC curve provides also a way to compare two classifiers with each other, by measuring the area under the curve (called AUC). This is the ROC AUC score. Note that a classifier that is 100% correct, would have a ROC AUC of 1. A completely random classifier would have a score of 0.5. Below you can see the output of the mnist model:

Summary

Now we definitely learned a lot of stuff. We learned how to evaluate classifiers and with which tools. We also learned how to select the right precision/recall tradeoff and how to compare different classifiers with the ROC AUC curve or score. Now we know, how we can create a classifier with virtually any precision we want. We also learned that this is not as desirable as it sounds because a high precision is not very useful in combination with a low recall ratio. So the next time you hear someone talking about a precision or accuracy of 99%, you know that you should ask him about the other metrics we discussed in this post.