Ready to learn Data Science? Browse courses like Data Science Training and Certification developed by industry thought leaders and Experfy in Harvard Innovation Lab.

Data is growing at an astounding rate. Humanity is moving to a universe of sensors or “resolution revolution” where more granular data is being produced at a rate than can be truly analyzed and actioned upon. As we move into a world of IoT, Industrial IoT, social media, people analytics ultimately to automation, machine learning and artificial intelligence, the hot topics seem to focus on the end of the value chain of data science rather than the bulk of the process in data management. The hype in the “what could be” or artificial intelligence curing cancer supersedes the effective and tangible roadmap to get there. The industry must consider alleviating the bottlenecks of data management and leverage metadata management as a strategy baked into product management and innovation at the organizational level to unlock the value of data science.

This paper outlines the foundations of big data, new programming skills and changing environments, time required for data preparation and value in managing metadata management as a system or strategy directly correlated to success in business analytics, BI and data science. Metadata management will be defined as the factory in productionizing data science and the defining factor in yielding meaningful insights.

What is Big Data?

The term Big Data means different things to different people. What we call Big Data is the data measured in Terabytes, and not Gigabytes. Well, how much space is one Gigabyte? We need to understand how storage is measured. A bit, the smallest storage space, is equivalent to 1=0, and off =0. A byte, is 8 – 28 = 256 and 16 bits = double-byte = 256 X 256 = 65,536 bits. This goes forward to storage measurements using the international system of units, every 1,000 bytes (103) is given a distinct name, being Kilobyte (103); Megabyte (106); Gigabyte (109); Terabyte (1012); Petabyte (1015), Exabyte (1018), Zettabyte (1021) and Yottabyte (1024). The industry has seen that traditional databases struggle to keep up at the Terabyte level. However, what do these numbers mean? In a post from Business Insider in 2013, the company announced that Facebook has about 1.15 billion users and each user having over 200 pictures. That amounts to about 230,000,000,000 imagines, and if each image is about 3MB, then the picture database size at Facebook is 690,000,000,000,000,000 bytes or 690 Petabytes or PB. That same post also indicated that this size of the image storage increases by 350 million photos each day.1 Over a decade ago, it was published that Google processed 24 Petabytes (compare that to the 50 petabytes of all of the written works of mankind from the beginning of recorded history in all languages) of data per day, all suggesting the volume categorization of Big Data.

In addition, the velocity and processing issue is pushing new highs, and in 2014 ACI information group announced that every minute:

- Facebook users share nearly 2.5 million pieces of content

- Twitter users tweet nearly 300,000 times

- Instagram users post nearly 220,000 photos

- YouTube users upload 72 hours of new video content

- Apple users download nearly 50,000 apps

- Email users send over 200 million messages

- Amazon generates over $800,000 in online sales

We are dealing with data growth, and the 4 “V”s or characteristics of Big Data are data grows very fast (Velocity) such as 24 PB per day (Google); Data is in all kinds of formats, both structured and unstructured (Variety); Data size is humongous (Volume) such as 1.5 PB (Facebook); Data is not clean nor reliable (Veracity). We need to be able to process humongous amount of information from all kinds of sources at a fast pace. Companies are struggling to store, manage and analyze the data they do have, nonetheless take advantage of the emergence of data science. The question that many organizations are faced with is how to store these types of data with traditional computer architectures, disk storage and relational databases? We have come to realize that the answer is indeed no, and came the totally different approach to how we store the information and how to process it. Welcome to the world of Big Data, parallel processing and NoSQL.

With the inability to scale-up to meet the needs of ever-growing data, data growth required a scale-out approach due to coordination overhead, communication delay, remote file access, remote procedure calls, sharing disks, sharing memory, etc – all issues that any distributed system must deal with. The distributed system is multiple computers that work together cohesively with parallel programming support, distributed (or parallel) operating system, distributed file system and distributed fault tolerance. Welcome to the world of Hadoop and NoSQL.

Thus, Hadoop is a reliable, fault-tolerant, high performance distributed parallel programming framework for large scale data written in Java. Cloudera and Hortonworks have Hadoop environments to have a UI framework, SDK, Workflow, Scheduling, Metadata, Data Integration, Scheduling, Metadata, Languages, Compliers, Read/Write Access and Coordination. Hue, Oozie, Pig, Hive, Hbase, Flume, Scoop, Zookeeper are evolving into other preferred environments and languages. It’s interesting to note that Pig was created at Yahoo as an easier programming language to their limited skills within their company… using Hadoop to focus more on analyzing large data sets and spend less time having to write mapper and reducer programs. Like actual pigs, who eat almost anything, the Pig programming language is designed to handle any kind of data. The takeaway is that it is very difficult to automate or keep this orchestration of Big Data moving and flowing in the right direction, namely to the business or organization to answer questions. It must therefore be deduced that managing the Big Data orchestration is critical to gleaning insights. Insights or pattern exploration with unsupervised learning, machine learning, deep learning is the next frontier, but we must flow data constantly to leverage those techniques. This entire orchestration of Big Data is complex, but it assumes the music will continue to play if data is available to it if all those complexities are overcome.

The players that leverage Big Data are well-known, those being Google, Facebook, Apple and Amazon. They have so much data they can provide hyper-customized deals and have a deeper understanding of their customers and business, automatic pattern and trend detection for the metric, data-driven organization providing value. They have the skillsets to harness and deploy productionized data environments for near real-time changes in this ever-changing marketplace. In many cases, they have only scraped the surface with structured data at this rate, but will continue to make advances as they automate missing data and impute with success. However, if this is the case, the rest must look to data preparation not as a major bottleneck, but rather, as a strategic and fundamental imperative in feeding Big Data, Data Science and the business.

Data Preparation as Most Time-Consuming Task

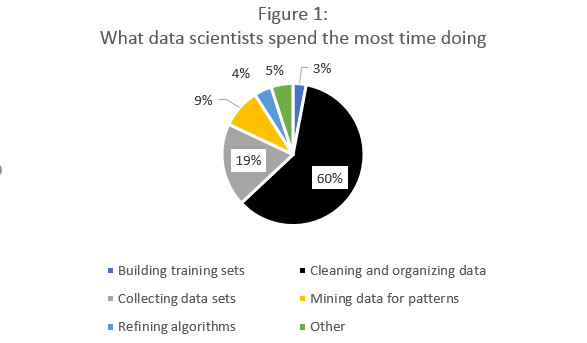

Data preparation accounts anywhere from 80-90% of the work of data scientists. They spend 60% of their time on cleaning and organizing data and 19% of their time on collecting data sets, meaning data scientists spend around a whopping 80% of their time preparing and cleaning their data for analysis.5, what I refer to as the black hole. The number is about the same for their least enjoyable parts of data science.

The black hole is a massive amount of time met with an equal proportion of disdain by those doing that work. And again, most of the love is given to the shiny presentation layer, the end-results of analytics or visualization. However, we must be able to serve the data to the business more quickly under an increasingly stressed data organization with Big Data volume and velocity. The answer lies in metadata. David Lyle, VP of Business Transformative Services at Informatica, wrote that “the difference between success and failure is proportional to the investment an organization makes in its metadata management system”.6 He goes on to state to automate the process to reuse the cleansed data or production through careful preparation, annotation, and semantically define all data to make it useable and trustworthy. These small patterns that are repeating further bogs down the machine towards information production. If we harness the power of metadata, we construct the factory with metadata as the solid foundation that all information tools and data discovery can stand upon. It is vital for organizations to meet the increasing need for analytics and decision making driven by data needed by marketing professionals.

Metadata Management as Core Competency to Data-Driven Organizations

With Big Data increasing and the time-consuming task of data preparation, the industry is looking for better ways to improve efficiency and speed up the change of data into meaningful information. With that said, when analyzing the data journey and the constituents data serves, metadata is the common recurring theme that enables an organization that replaces “data wrangling” with data discovery. One has to look at the opportunity costs of a limited time and value data scientists or data analysts spending his or her time on data preparation. Data Scientists or computer programmers are considered some of the highest paid jobs, yet we have their time allocated to tasks that truly do not affect the bottom line. The bulk of their time, as we have seen, are spent on potentially getting to those bottom line questions. BI groups put in lots of valuable time and effort to manually discover, find and understand metadata while the business loses precious time to market.

Metadata management must be a core competency, a place of innovation and of strategic importance. Organizations must regularly audit their metadata and data preparation processes in order to compete. In addition, data governance and stewardship are now considered vital. Those who see governance and security as places of innovation will lead the next-generation of organizations. Data regulations and sensitive data will need to be managed, and it all starts at a metadata level in order leverage, yet protect those it intends to serve.

Enabling visibility and control of metadata that is scattered across the enterprise data landscape is key to success. The key is tools and a management process that turns weeks of these manual processes into minutes and enables the business to move faster, allowing self-service capabilities for exploration. The process should allow to share and reuse the data and knowledge about the data. Metadata scanning can automatically gather from a wide variety of sources, including ETL, databases and reporting tools. Secondly, metadata should be stored and managed in a central repository to enable share and reuse data sets, data definitions, metadata and master data. Thirdly, metadata understanding, with smart algorithms, should model and index all types to enable the user to quickly locate and understand cross connections across connections. Fourthly, a central tool, namely a smart search engine using hundreds of crawlers to enable searches of all metadata to present results in seconds. Lastly, a visual mapping tool to create full lineage of the data journey as it flows through multi-vendor systems to assist in the data context to continually improve the management process.

David Stodder’s recommendations in Improving Data Preparation for Business Analytics in TDWI’s Q3 2016 Best Practices Report serves as a constructive guide on the overarching themes to create an environment for more productive users. Make shortening time to achieving business insight a data preparation improvement priority by focusing on reducing time how long data preparation takes to deliver valuable data by using innovative technologies and methods to higher levels of repeatability through shared data catalogs, glossaries, and metadata repositories as part of the data preparation process.7

While all the above-mentioned reasons are strong reasons to productionize metadata strategies with strong tools and thought leadership, the General Data Protection Regulation (GDPR) will come into effect in May of 2018. This means that all businesses and organizations that handle EU customer, citizen or employee data must comply with the guidelines imposed by GDPR.8 The regulation will require consent management, data breach notifications, the potential appointment of a data protection officer, privacy by default processes, privacy impact assessments as well as understanding the rights of data owners.9

Let’s look at those regulatory guidelines and what they mean in reality. Consent management will require the process data under the GDPR will be governed by the consent of the owner, and metadata enables to register and administer the consent. Data breach notifications require metadata to provide information on the creation date of the information, the location and name of the database comprised and when the data breach took place. If a data protection officer comes into being as a role or a consideration, the metadata repository will be the main source of information for processes and measures needed to be taken for protection of personal information. Privacy by default will require that only information that meets specific criteria for the overall purpose of the business, and metadata can assist in enforcing specific data processing are performed according to specific guidelines.10 Organizations will be required to perform Privacy Impact Assessments (PIA). Documentation will be greatly enhanced and accessible for PIAs with metadata and its governance. Lastly, the rights of data owners will increasingly become an issue and the time to start for 2018 is now to mitigate any business risk. Rising to the level of metadata management and process internally will allow one to deal with the complexity of compliance. As anything, if you harness change and that complexity, it can become of source of innovation and market leadership.

Over the years of discussions with various companies across various verticals, listed below is illustrative of the feedback from thought leaders as well as power users of metadata:

Claudia Imhoff, Ph.D., President Intelligent Solutions, Inc. states “A centralized metadata solution is needed in order to be a successful hunter-gatherer in the new complex world of data analytics”.

Bala Venkatraman, BI Expert states a metadata strategy and tool “transformed metadata from a nice-to-have to a must have” and continues, “democratization of the data landscape which in turn yields an overall increased efficiency for any BI team…”.

Revital Mor, Head of BI for Harel Insurance states “easy way for mapping data flow from end to end. It’s an important and necessary for any organization that values data accuracy”.

Consider some typical scenarios and tangible outcomes below as a summation and case for action:

Business Challenge #1: A business user discovered inaccurate information on revenue reports and loses precious time to business waiting for the BI group to respond.

BI Challenge #1: To reduce the amount of time and resources required to locate the source of inaccurate reports and correct the problem, while maintaining accuracy.

How BI worked prior to Metadata tools and strategic imperative #1: In order to identify the source of the problem, BI groups had to manually trace the data to discover and understand all the hops that the data went through to land in a specific report. They had to analyze which database tables and ETL processes were involved in and affected by the particular inaccuracy and correlate the metadata to the other reports that were affected – a very expensive, error-prone and time-consuming process.

Metadata tools and strategic solution #1: Enabling visibility and control of metadata, it can assist the information team to discover all related ETL processes and tables use to produce a report in a matter of seconds. It can compare multiple reports to show specific metadata items, fix the problem with all the metadata centralized in one place with a search that is simple, accurate and effective.

Value to the organization #1: Cut the metadata search and tracing costs by more than 50% with a faster time-to-market than initially estimated with little to no business disruptions or production issues when fixing the problem.

Business Challenge #2: New regulation calling for companies for change HR headcount reporting from monthly to daily must be implemented accurately and quickly, without business disruptions.

BI Challenge #2: The BI group must quickly and accurately locate the formula calculating the headcount in reports, database tables, ETL processes, views and stored procedures – without additional manpower. The BI group must also understand all possible impacts of making the change.

How BI worked prior to Metadata tools and strategic imperative #2: In order to locate the specific calculation, BI had to manually map out the entire BI landscape. With metadata scattered all over the place, this is a very time consuming and therefore costly process, which can require multiple development and design cycles before completing the change.

Metadata tools and strategic solution #1: Enable BI to make the change easily in a fraction of the time with cross-platform metadata search engine. Easily and quickly map out the entire BI landscape with a detailed visual map showing where the calculation is being made in every system; discover the exact ETL processes and tables that make up a report; show all objects related to the calculation instances throughout the entire landscape on one screen; and implement the change quickly and accurately.

Value to the organization #2: Cut the project costs by more than 50%; complete project faster than originally estimated; elimination of overtime hours or additional staff; zero business disruptions or production issues.

References

By: Gal Ziton, CTO & Co-Founder, Octopai & Dan Yarmoluk, ATEK Access Technologies, All Things Data Podcast