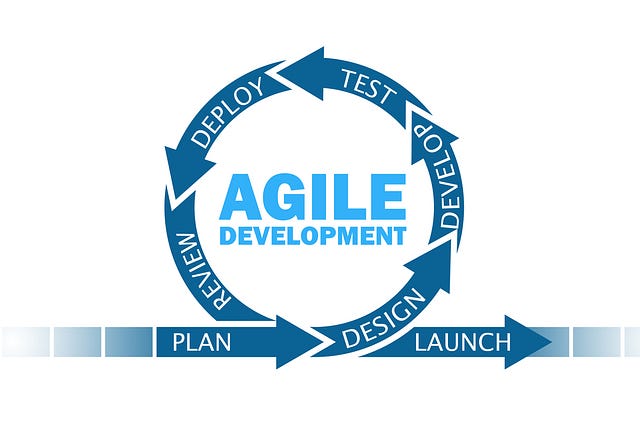

A few days ago I read two great posts on agile data science project management by Shay Palachy and Ori Cohen. These posts discuss the different phases of data science projects and how are they different from regular software projects. These posts have inspired me to write my own view on agile data science with a focus on the research methodology and not on the whole project phases.

Agile data science research is hard, how can you give a time estimation when you are not sure that your problem is solvable? how can you plan your sprint before looking at the data? You probably can’t. Agile data science requires many adjustments, In this post, I am going to share some of the best practices that work best for me for agile data science research.

Set the project goals

Every machine learning project should start by defining the goals of the project. We must define what is a good result in order to know when to stop the research and move forward to the next problem. This phase is usually done with the business stakeholders.

The goal is defined by 3 questions:

- What is the KPI that we are optimizing? This is maybe the most important question in the project, the KPI must be measurable with a test set but also as correlative as possible to the business KPI.

- What is the evaluation method? What is the size of the test-set? Do we need time series split/group split? Do we need an online test?

- What is the minimum valuable KPI? Sometimes the machine learning model will replace some simple heuristic an even 65% percent accuracy will be very valuable for the business. We need to define what is a success.

Always compare to a baseline model

What is a good performance is a pretty hard question which is heavily based on how hard is the problem and what are the business needs. My advice is to start your modeling by building a simple baseline model, it can be a simple machine learning model with basic features or even a business rule (heuristics) like the average label in an important category. This way we can measure our performance in comparison to the baseline and monitor our improvement in the task.

Start with a simple model

Iterations are one of the core characteristics of agile development. In a data science project, we don’t iterate on features like the engineering team, we iterate on models. Starting with a simple model with a small number of features and making it more and more complex iteratively has many advantages. You can stop at any point when your model is good enough and save time and complexity. You know exactly how every change you made has affected the model performance and this gives you intuition for your next experiments and maybe most importantly, by adding complexity iteratively you can debug your model for bugs and data leakages much easier and faster.

Plan sub-goals

Planning research projects is hard because they have a very large amount of uncertainty. From my experience, it is best to plan your projects using subgoals, for example, data exploration, data cleaning, dataset building, feature engineering, and modeling are small parts of the research that you can plan at least a few weeks forward. These sub-goals can bring value on their own without the final model. For example, after data exploration, the data scientist can bring actionable insights for the business people and data set cleaning and building can help other data scientist and analysts for their own projects immediately.

Fail fast

Failing fast is maybe my most important point and probably the hardest to do. At each iteration, you must ask yourself what is the probability that the model performance will reach the minimum valuable KPI? I think that making the model more complex iteratively really helps in this part. Adding more features and trying more models usually gives incremental improvements. If your model performance is 70% and your minimum valuable KPI is 90% you are probably not going to get there, so, you need to stop your project and move to the next problem or change something drastic like changing your label or tagging much more data. I am not saying that you shouldn’t try to solve very hard problems, just make sure that you are not wasting time on methods that probably won’t reach your project goals.

Move to production ASAP

My last advice is deploying your model in production at the earliest point or a little after the point that your model is valuable. I know that maybe your final model will have totally different features and a lot of the work will be wasted. But, first, your model gives value, why wait? Secondly and more importantly, in many cases, the production has its own constraints, some features are not available at the production systems, some features are in different formats, maybe your model is to slow or uses to much RAM etc. Solving these problems early can save a lot of unrealistic modeling time.