Ready to learn Data Science? Browse courses like Data Science Training and Certification developed by industry thought leaders and Experfy in Harvard Innovation Lab.

It is easy to forget how much data is stored in the conversations we have every day. With the evolution of the digital landscape, tapping into text, or Natural Language Processing (NLP), is a growing field in artificial intelligence and machine learning. This article covers the common pre-processing concepts applied to NLP problems.

Text can come in a variety of forms from a list of individual words, to sentences to multiple paragraphs with special characters (like tweets for example). Like any data science problem, understand the questions that are being asked will inform what steps may be employed to transform words into numerical features that work with machine learning algorithms.

The History of NPL

>

When I was a kid sci-fi almost always had a computer that you could bark orders and have them understood and sometime, but not always, executed. At the time the technology seemed far away in the future but today I carry a phone in my pocket that is smaller and more powerful than any of those imagined. The history of speech-to-text is a complicated and long but was the seed for much of NPL.

Early efforts required large amounts of manually coded vocabulary and linguistic rules. The first automatic translations from English to Russian in 1954 at Georgetown were limited to a handful of sentences.

By 1964 the first chatbot ELIZA was created at MIT. Built on pattern matching and substitution it mimicked the therapy process by asking open-ended questions. While it seemed to replicate awareness, it had no true contextualization of the conversation. Even with these limited capabilities many were surprised by how human the interactions felt.

Much of the growth in the field really started in the 1980’s with the introduction of machine learning algorithms. Researchers moved from the more rigid Transformational Grammar models into looser probabilistic relationships described in Cache Language Models which allowed more rapid scaling and handled unfamiliar inputs with greater ease.

Through the 90’s the exponential increase of computing power helped forge advancement but it wasn’t until 2006 when IBM’s Watson went on Jeopardy that the progress of computer intelligence was visible to the general public. For me it was the introduction of Siri on the iPhone in 2011 that made me realize the potential.

The current NLP landscape could easily be its own article. Unprecedented investment from private companies and a general open source attitude has expanded something that was largely exclusive to much larger audience and application. One fascinating example is in the field of translations where Google is working on translating any language on the fly (even if some of the bugs in the user experience needs to be worked out).

The Importance of Pre-Processing

>

None of the magic described above happens without a lot of work on the back end. Transforming text into something an algorithm can digest it a complicated process. There are four different parts:

Cleaning consist of getting rid of the less useful parts of text through stopword removal, dealing with capitalization and characters and other details.

Annotation consists of the application of a scheme to texts. Annotations may include structural markup and part-of-speech tagging.

Normalization consists of the translation (mapping) of terms in the scheme or linguistic reductions through Stemming, Lemmazation and other forms of standardization.

Analysis consists of statistically probing, manipulating and generalizing from the dataset for feature analysis.

The Tools

>

There are a variety of pre-processing methods. The list below is far from exclusive but it does give an idea of where to start. It is important to realize, like with all data problems, converting anything into a format for machine learning reduces it to a generalized state which means losing some of the fidelity of the data along the way. The true art is understand the pros and cons to each to carefully chose the right methods.

Capitalization

>

Text often has a variety of capitalization reflecting the beginning of sentences, proper nouns emphasis. The most common approach is to reduce everything to lower case for simplicity but it is important to remember that some words, like “US” to “us”, can change meanings when reduced to the lower case.

Stopword

>

A majority of the words in a given text are connecting parts of a sentence rather than showing subjects, objects or intent. Word like “the” or “and” cab be removed by comparing text to a list of stopword.

IN:

[‘He’, ‘did’, ‘not’, ‘try’, ‘to’, ‘navigate’, ‘after’, ‘the’, ‘first’, ‘bold’, ‘flight’, ‘,’,’for’, ‘the’, ‘reaction’, ‘had’, ‘taken’, ‘something’, ‘out’, ‘of’, ‘his’, ‘soul’, ‘.’]

OUT:

[‘try’, ‘navigate’, ‘first’, ‘bold’, ‘flight’, ‘,’, ‘reaction’, ‘taken’, ‘something’, ‘soul’, ‘.’]

In the example above it reduced the list of 23 words to 11, but it is important to note that the word “not” was dropped which depending on what I am working on could be a large problem. One might created their own stopword dictionary manually or utilize prebuilt libraries depending on the sensitivity required.

Tokenization

>

Tokenization describes splitting paragraphs into sentences, or sentences into individual words. For the former Sentence Boundary Disambiguation (SBD) can be applied to create a list of individual sentences. This relies on a pre-trained, language specific algorithms like the Punkt Models from NLTK.

Sentences can be split into individual words and punctuation through a similar process. Most commonly this split across white spaces, for example:

IN:

“He did not try to navigate after the first bold flight, for the reaction had taken something out of his soul.”

OUT:

[‘He’, ‘did’, ‘not’, ‘try’, ‘to’, ‘navigate’, ‘after’, ‘the’, ‘first’, ‘bold’, ‘flight’, ‘,’,’for’, ‘the’, ‘reaction’, ‘had’, ‘taken’, ‘something’, ‘out’, ‘of’, ‘his’, ‘soul’, ‘.’]

There are occasions that this can cause problems when a word is abbreviated, truncated or is possessive. Proper nouns may also suffer in the case of names that use punctuation (like O’Neil).

Parts of Speech Tagging

>

Understand parts of speech can make difference in determining the meaning of a sentence. Part of Speech (POS) often requires look at the proceeding and following words and combined with either a rule-based or stochastic method. It can than be combined with other processes for more feature engineering.

IN:

[‘And’, ‘from’, ‘their’, ‘high’, ‘summits’, ‘,’, ‘one’, ‘by’, ‘one’, ‘,’, ‘drop’,’everlasting’, ‘dews’, ‘.’]

OUT:

[(‘And’, ‘CC’),

(‘from’, ‘IN’),

(‘their’, ‘PRP$’),

(‘high’, ‘JJ’),

(‘summits’, ‘NNS’),

(‘,’, ‘,’),

(‘one’, ‘CD’),

(‘by’, ‘IN’),

(‘one’, ‘CD’),

(‘,’, ‘,’),

(‘drop’, ‘NN’),

(‘everlasting’, ‘VBG’),

(‘dews’, ‘NNS’),

(‘.’, ‘.’)]

Definitions of Parts of Speech

(‘their’, ‘PRP$’) PRP$: pronoun, possessive

her his mine my our ours their thy your

Stemming

>

Much of natural language machine learning is about sentiment of the text. Stemming is a process where words are reduced to a root by removing inflection through dropping unnecessary characters, usually a suffix. There are several stemming models, including Porter and Snowball. The results can be used to identify relationships and commonalities across large datasets.

IN:

[“It never once occurred to me that the fumbling might be a mere mistake.”]

OUT:

[‘it’, ‘never’, ‘onc’, ‘occur’, ‘to’, ‘me’, ‘that’, ‘the’, ‘fumbl’, ‘might’, ‘be’, ‘a’, ‘mere’, ‘mistake.’],

It is easy to see where reductions may produce a “root” word that isn’t an actual word. This doesn’t necessarily adversely affect its efficiency, but there is a danger of “overstemming” were words like “universe” and “university” are reduced to the same root of “univers”.

Lemmazation

>

Lemmazation is an alternative approach from stemming to removing inflection. By determining the part of speech and utilizing WordNet’s lexical database of English, lemmazation can get better results.

The stemmed form of leafs is: leaf

The stemmed form of leaves is: leav

The lemmatized form of leafs is: leaf

The lemmatized form of leaves is: leaf

Lemmazation is a more intensive and therefor slower process, but more accurate. Stemming may be more useful in queries for databases whereas lemmazation may work much better when trying to determine text sentiment.

Count / Density

>

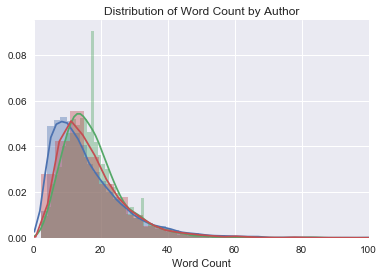

Perhaps one of the more basic tools for feature engineering, adding word count, sentence count, punctuation counts and Industry specific word counts can greatly help in prediction or classification. There are multiple statistical approaches whose relevance are heavily dependent on context.

Word Embedding/Text Vectors

>

Word embedding is the modern way of representing words as vectors. The aim of word embedding is to redefine the high dimensional word features into low dimensional feature vectors. In other words it represents words at an X and Y vector coordinate where related words, based on a corpus of relationships, are placed closer together. Word2Vec and GloVe are the most common models to convert text to vectors.

Conclusion

>

While this is far from a comprehensive list, preparing text is a complicated art which requires choosing the optimal tools given both the data and the question you are asking. Many pre-built libraries and services are there to help but some may require manually mapping terms and words.

Once a dataset is ready supervised and unsupervised machine learning techniques can be applied. From my initial experiments, which will be it’s own article, there is a sharp difference in applying preprocessing techniques on a single string compared to large dataframes. Tuning the steps for optimal efficiency will be key to remain flexible in the face of scaling.

Clap if you liked the article, follow if you are interested to see more on Natural Language Processing!

Additional Resources

Originally appeared in Towards Data Science