Ready to learn Data Science? Browse Data Science Training and Certification courses developed by industry thought leaders and Experfy in Harvard Innovation Lab.

Beyond Numerical and Categorical

In this article, I propose a more useful taxonomy of data groupings for machine learning practitioners: the 7 Data Types.

Online courses, tutorials, and articles on encoding, imputing, and feature engineering for machine learning generally treat data as either categorical or numeric. Binary and time series data sometimes get called out and, once in a while, the term ordinal sneaks into the conversation. However, a more refined framework is needed to provide a richer common lexicon for thinking and communicating about data in machine learning.

A framework along the lines of the one I propose in this article should lead practitioners, especially newer practitioners, to develop better models faster. With 7 Data Types to reference we should all be able to more quickly evaluate and discuss the encoding options and imputation strategies available.

TL;DR;

Think and talk about each of your features as one of the following seven data types to save time and transfer knowledge:

- Useless

- Nominal

- Binary

- Ordinal

- Count

- Time

- Interval

The current state

In the machine learning world, data is nearly always split into two groups: numerical and categorical.

Numerical data is used to mean anything represented by numbers (floating point or integer). Categorical data generally means everything else and in particular discrete labeled groups are often called out. These two primary groupings — numerical and categorical — are used inconsistently and don’t provide much direction as to how the data should be manipulated.

Data generally needs to be put into numeric form for machine learning algorithms to use the data to make predictions. In machine learning guides categorical string data is usually one-hot-encoded (aka dummy encoded). Dan Becker refers to it as “The Standard Approach for Categorical Data” in Kaggle’s Machine Learning tutorial series.

Oftentimes in tutorials it is assumed that all data that arrived in numeric form is ready to be used as is and that all string data needs one-hot-encoded. While many tutorials do dig a bit deeper into the types of data (such as treating time, binary, or text data specially), these deeper dives often are not done in a systematic way. In fact, I have found no clear guidelines for transforming the data based on a taxonomy like the one proposed in this article. If you know of such data science resources, please share in the comments 🙂

Coming to machine learning after having been trained in social science methods and statistics in graduate school, I found it surprising that there wasn’t much talk about ordinal data. For example, I kept finding myself trying to figure out the best way to encode and impute ordinal scale data in string form and nominal (truly categorical) data in numeric form. Without clear and consistent categories for types of data, this took more time than necessary.

The 7 Data Types was inspired by Steven’s typology of measurement scales and my own observations about the types of data that need special consideration for machine learning models. Let’s first look at the measurement scales in use and where they came from before introducing the 7 Data Types.

Stevens’ typology of measurement scales

Stanley Smith Stevens

In the 1960s Harvard Psychologist Stanley Smith Stevens created four measurement scales for data: ratio, interval, ordinal, and nominal.

- Ratio (equal spaces between values and a meaningful zero value — mean makes sense)

- Interval (equal spaces between values, but no meaningful zero value — mean makes sense)

- Ordinal (first, second, third values, but not equal space between first and second and second and third — median makes sense)

- Nominal (no numerical relationship between the different categories — mean and median are meaningless)

Steven’s typology became extremely popular, especially in the social sciences. Since then, other researchers have further expanded the number of scales (Mosteller & Tukey) including as many as ten categories (Chrisman). Nonetheless, Steven’s typology has reigned in the social sciences and is occasionally referenced in data science, (e.g. here), despite not providing clear guidance in many cases.

Other machine learning and data science practitioners have adopted parts of Steven’s typology in various ways, leading to a variety of nomenclatures. For example, Hastie, Tibshirani, and Friedman in The Elements of Statistical Learning 2nd Ed. combine ratio and interval into quantitative and break out ordinal and categorical in one example (p. 504). Elsewhere Hastie et. al. refer to ordinal as ordered categorical variables and categorical variables as qualitative, discrete, or factors (p. 10). Statistics for Dummies breaks the types of data into numerical, ordinal, and categorical — lumping ratio with intervalunder numerical. DataCamp refers to continuous, ordinal, and nominal data types in this tutorial.

A classification that occasionally comes up in statistics is between discrete and continuous variables. Discrete data has distinct values while continuous data has an infinite number of potential values in a range.

But generally in machine learning numerical and categorical is the divide that you’ll see (e.g. here). The popular Pandas library’s lumps together ordinal and nominal data in it’s optional Category dtype. Overall, the current lexicon for machine learning data types is inconsistent and confusing. And what is unclear is slow to learn. What can be done to improve things?

7 Primary Data Types for machine learning

Although it may seem like a bold goal to improve the lexicon of data types in machine learning, I hope that this article will provide a useful taxonomy of groups that for more actionable steps for data scientists. By providing clear categories, I hope to help my colleagues, especially newcomers, more quickly build models and discover new options for improving model performance.

I propose the following taxonomy of 7 Data Types most useful for machine learning practitioners:

- Useless

- Nominal

- Binary

- Ordinal

- Count

- Time

- Interval

1. Useless

Useless data is unique, discrete data with no potential relationship with the outcome variable. A useless feature has high cardinality. An example would be bank account numbers that were generated randomly.

That’s useless for machine learning, but kind of cool

If a feature consists of unique values with no order and no meaning, that feature is useless and need not be included when fitting a model.

2. Nominal

Nominal data is made of discrete values with no numerical relationship between the different categories — mean and median are meaningless. Animal species is one example. For example, pig is not higher than bird and lower than fish.

Nominal data: animal groups

Nationality is another example of nominal data. There is group membership with no numeric order — being French, Mexican, or Japanese does not in itself imply an ordered relationship.

You can one-hot-encode or hash nominal features. Do not ordinal encode them because the relationship between the groups cannot be reduced to a monotonic function. The assigning of values would be random.

3. Ordinal

Ordinal data are discrete integers that can be ranked or sorted. A defining characteristic is that the distance between any two numbers is not known.

For example, the distance between first and second may not be the same as the distance between second and third. Think of a 10k race. The winner might have run 30:00 minutes, second place might have run 30:01 minutes and third place might have run 400:00 minutes. Without the time data, we don’t know the relative distance between the ranks.

Broadly speaking, ordinal data can be encoded one of three ways. It can be assumed to be close enough to interval data, with relatively equal magnitudes between the values, to treat it as such. Social scientists make this assumption all the time with Likert scales. For instance, on a scale from 1 to 7, 1 being extremely unlikely, 4 being neither likely nor unlikely and 7 being extremely likely, how likely are you to recommend this movie to a friend? Here the difference between 3 and 4 and the difference between 6 and 7 can be reasonably assumed to be similar.

A second option is to treat ordinal data as nominal data, where each category has no relationship to any other. One-hot encoding it or a similar scheme can be used in that case.

A third option that will be explored in more detail in a future article is something like reverse Helmert coding, which can be used to encode various potential magnitudes between the values.

There’s merit in categorizing ordinal data as it’s own type of data.

4. Binary

Binary data is discrete data that can be in only one of two categories — either yes or no, 1 or 0, off or on, etc. Binary can be thought of as a special case of ordinal, nominal, count, or interval data.

Binary data is a very common outcome variable in machine learning classification problems. For example, we may want to create a supervised learning model to predict whether a tumor is malignant or benign.

Binary data is common and merits its own category when thinking about your data.

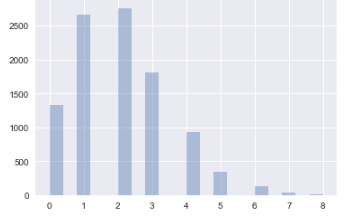

5. Count

Count data is discrete whole number data — no negative numbers here. Count data often has many small values, such as zero and one.

Count data often follows a Poisson distribution.

Poisson distribution drawn from random numbers

Count data is usually treated similarly to interval data, but it is unique enough and widespread enough to merit its own category.

6. Time

Time data is a cyclical, repeating continuous form of data. The relevant time features can be any period— daily, weekly, monthly, annual, etc.

Time series data often takes some wrangling and manipulation to create features with the periods that might be meaningful for your model. The Pandas python library was designed with time data in mind. Financial and marketing data often has a time component that is very important to capture in a model

Missing time data is often filled using unique methods appropriate to seasonal or daily data (e.g. SARIMAX). Time series data is definitely worth thinking about in its own separate mental bucket.

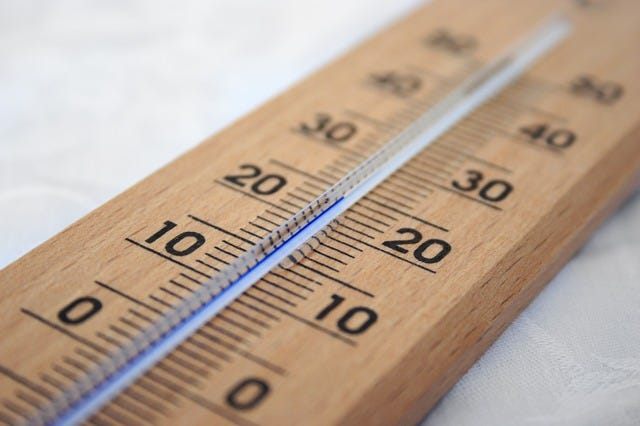

7. Interval

Interval data has equal spaces between the numbers and does not represent a temporal pattern. Examples include percentages, temperatures, and income.

Interval data is the most precise measurement scale data and very common. Although each value is a discrete number, e.g. 3.1 miles, it doesn’t generally matter for machine learning purposes whether it is a continuous scale (e.g. infinitely smaller measurement sizes are possible) nor does it matter whether there is an absolute zero.

Interval data is generally easy to work with but you may want to create bins to cut down on the number of ranges.

And there you have the 7 Data Types.

- Useless

- Nominal

- Binary

- Ordinal

- Count

- Time

- Interval

Although many experienced machine learning practitioners certainly do think of some of the types of data described with these labels differently in practice, a clear taxonomy for the field is lacking. I posit that using the taxonomy above will help folks more quickly evaluate options for encoding, imputing, and analyzing their data.

Note that most of these seven categories could show up in your raw data in most any form. We aren’t talking about float64 vs bool: the Python type or (Numpy or Pandas dtype) is not the same as the type of data discussed here.

Are these the right seven categories?

Typologies were invented to be debated 🙂

While constructing the 7 Data Types I asked myself if it was really useful to separate count data from interval data. In the end, I believe it is because text data is such a common form of count data and because count data does have some common different statistical methods.

Similarly, binary data could be seen as a subtype of all the higher scale types. Nonetheless binary data is quite common in machine learning and binary outcome variables have some potential machine learning algorithms that other multi-classification tasks do not. You also don’t need to take extra steps with encoding binary data.

If you would advocate for a different taxonomy, please do so in the comments.

How will I remember these 7 Data Types?

- Useless

- Nominal

- Binary

- Ordinal

- Count

- Time

- Interval

Remembering is the key to learning. RNBOCTI doesn’t exactly roll of the tongue.

One of the eight or nine planets

Taking a cue from the 9 planets mnemonic, let’s make a mnemonic to help remember the 7 Data Types.

Ugly Noisy Bonobos and Old Cats Take Ice?

or

Uppity Noisy Boys Often Can’t Take Instruction

or

Under New Beds Old Cows Turn In

You can probably do better:) Come up with your own mnemonic and share it in the comments.

Should I spread the word about the 7 Data Types?

Definitely.

In this article I made the case for classifying types of data as one of seven categories to create a more coherent lexicon for thinking about and communicating about data in data science. These categories have practical application for thinking about encoding options and imputation strategies that I’ll explore in future articles.

When we all use the same terms to mean the same thing we save time learning and transferring knowledge. Doesn’t that sound wonderful?