Ready to learn Machine Learning? Browse Machine Learning Training and Certification courses developed by industry thought leaders and Experfy in Harvard Innovation Lab.

I wanted to figure out where I should train my deep learning models online for the lowest cost and least hassle. I wasn’t able to find a good comparison of GPU cloud service providers, so I decided to make my own.

Feel free to skip to the pretty charts if you know all about GPUs and TPUs and just want the results.

I’m not looking at serving models in this article, but I might in the future. Follow me to make sure you don’t miss out.

Deep Learning Chip Options

Let’s briefly look at the types of chips available for deep learning. I’ll simplify the major offerings by comparing them to Ford cars.

CPUs alone are really slow for deep learning. You do not want to use them. They are fine for many machine learning tasks, just not deep learning. The CPU is the horse and buggy of deep learning.

Horse and Buggy

GPUs are much faster than CPUs for most deep learning computations. NVDIA makes most of the GPUs on the market. The next few chips we’ll discuss are NVDIA GPUs.

One NVIDIA K80 is about the minimum you need to get started with deep learning and not have excruciatingly slow training times. The K80 is like the Ford Model A — a whole new way to get around.

Ford Model A

NVIDIA P4s are faster than K80s. They are like the Ford Fiesta. Definitely an improvement over a Model A. They aren’t super common.

Ford Fiesta. Credit; By Rudolf Stricker CC-BY-SA-3.0 (http://creativecommons.org/licenses/by-sa/3.0/), from Wikimedia Commons

The P100 is a step up from the Fiesta. It’s a pretty fast chip. Totally fine for most deep learning applications.

Ford Taurus. Credit: ford.com

NVDIA also makes a number of consumer grade GPUs often used for gaming or cryptocurrency mining. Those GPUs generally work okay, but aren’t often found in cloud service providers.

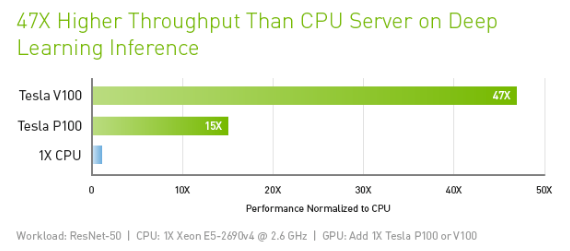

The fastest NVDIA GPU on the market today is the Tesla V100 — no relation to the Tesla car company. The V100 is about 3x faster than the P100.

https://www.nvidia.com/en-us/data-center/tesla-v100/

The V100 is like the Ford Mustang: fast. It’s your best option if you are using PyTorch right now.

Ford Mustang. Credit: Ford.com

If you are on Google Cloud and using TensorFlow/Keras you can also use Tensor Processing Units — TPUs. Google Cloud, Google Colab, and PaperSpace (using Google Cloud’s machines) have TPU v2s available. They are like the Ford GT race car for matrix computations.

Ford GT. Credit: Ford.com

TPU v3s are available to the public only on Google Cloud. TPU v3s are the fastest chips you can find for deep learning today. They are great for training Keras/TensorFlow deep learning models. They are like a jet car.

Jet car. Credit: By Gt diesel — Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=30853801

If you want to speed up training, you can add multiple GPUs or TPUs. However, you’ll pay more as you increase the quantity.

Frameworks

There are several options for deep learning frameworks. I wrote an article about which are most popular and most in demand available here:

TensorFlow has the most marketshare, Keras is the established high level API also developed by Google that runs on TensorFlow and several other frameworks. PyTorch is the pythonic, Facebook-backed cool kid and FastAI is the higher-level API for PyTorch that makes it easy to train world class models in a few lines of code.

The models were trained with PyTorch v1.0 Preview with FastAI 1.0. PyTorch v1.0 Preview and FastAI v1.0 are bleeding edge, but allow you do to really cool things very quickly.

The Contestants

I tested cloud providers with GPUs who have a strong value proposition for a large subset of deep learning practitioners. Requirements for inclusion:

- Pay as you go in hourly or smaller increments.

- Installation and configuration can’t be awful. Nonetheless, I included AWS because they have such a large market share.

Without further ado, if you are starting out in deep learning here are the cloud providers you need to know.

Google Colab

Google Colab is free. It’s a Jupyter notebook system with nice UX. It integrates with GitHub and Google Drive. Colab also allows collaborators to leave comments in the notebook.

Colab is super fast to get started with for Keras or TensorFlow, which isn’t surprising given those open source libraries were homegrown by Google. Pytorch v1.0 Preview takes some configuration and is a bit buggy. I had to turn off parallelism for training with FastAI v1 to save memory when using Resnet50 with decent-size resolution images.

Colab has both GPUs and TPUs available. You can’t use TPUs with PyTorch as of this writing. However, TPU support for PyTorch (and thus FastAI) is in the works — Google engineers are prototyping it now — as of October 25, 2018.

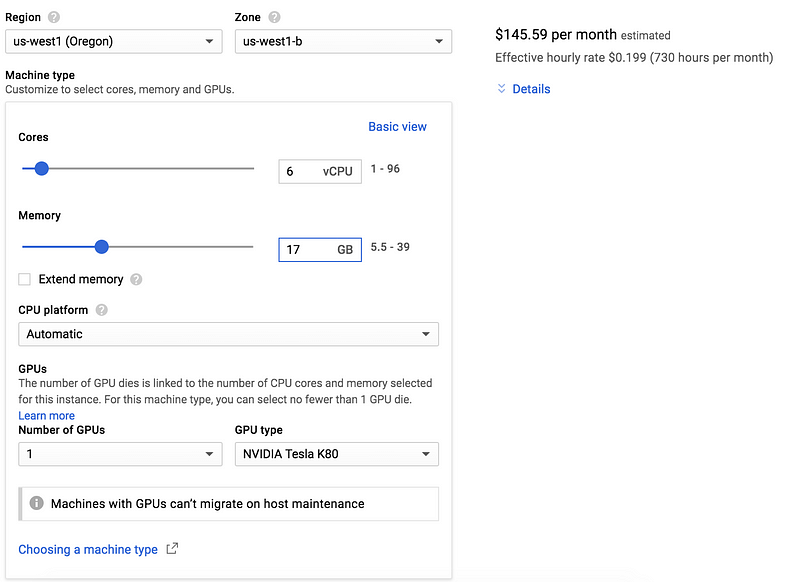

Google Cloud

Google Cloud Compute Engine is a more powerful and customizable cloud option than Colab. Like AWS, it requires some configuration, a frustrating number of quota increases, and the total bill is a bit tougher to estimate. Unlike AWS, the Google Cloud interface is easier to use, more easily configured, and TPUs are available for TensorFlow/Keras projects.

Pricing changes as you change Google Cloud instance settings

Preemptible instances save a lot of money, but risk being interrupted — they are fine for most learning tasks.

Google Cloud is a great place to do your deep learning if you are eligible for the $300 initial credit and don’t mind some configuration. If you scale, their pricing, security, server options, and ease of use make them a frontrunner.

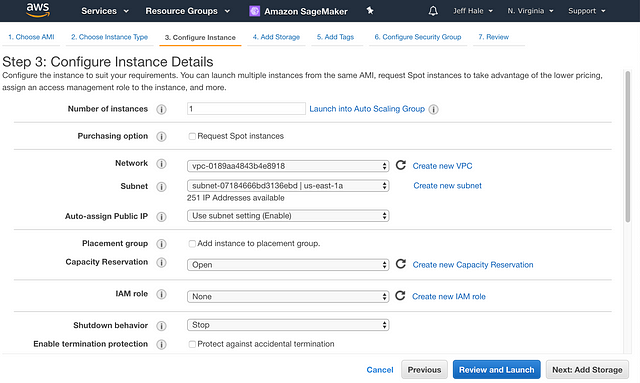

AWS

AWS EC2 is not the easiest thing to configure, but it is so popular that every deep learning practitioner should go through the configuration pain at some point. In my article looking at the most popular tools in job listings for data scientists, AWS finished in the top 10.

TAWS’s entry level GPU offering is p2.xlarge — AWS speak for an NVDIA Tesla K80 with 4 CPUs and 61 RAM. You can save money with spot pricing for $0.28 per hour as of late October 2018. Although there is a risk that no instances are available. Nearly every time I’ve tried for a spot instance at just above a recent price I received one.

AWS instance configuration fun

AWS is scalable, secure, and reliable. It runs much of the internet. Unfortunately, it’s also a pain to setup. I suggest you not start your deep learning journey with AWS unless you are already familiar with the service or are an experienced programmer.

Paperspace

It’s super quick to get a Jupyter Notebook running on a Paperspace Gradient. It’s also easy to set up PyTorch v1.0 Preview with FastAI v1.0.

Paperspace has Google’s TPUv2s available — it’s is the only company other than Google where I’ve seen the ability to rent them. There’s a good post on that functionality here. The TPUs are a bit pricey at $6.50 compared to Google Cloud — where you can get preemptible TPU V3s for less than half that.

Paperspace’s website indicates that they take security seriously. They have an SLA. The NYC-based, Y-combinator alum company recently raised a $13M round. Paperspace has its own servers and uses other folks’ servers too. They are legit.

vast.ai

vast.ai is a GPU sharing economy option. It is a marketplace to match folks who need GPUs with third party individuals who list their machines on vast.ai. It has a really nice dashboard and really nice prices.

The third-party whose machine you are using theoretically could get to your data, although in practice that’s not easy. See the discussion here.

Also the uptime likely will be lower than with a cloud infrastructure company. For example, the power or internet could go out at the source — which could be some person’s house. It’s fine for dog and cat classification, but not fine for patient medical records.

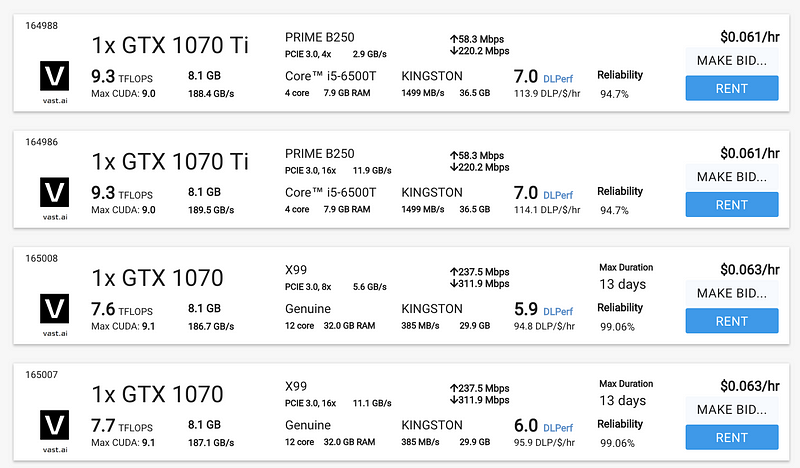

Vast.ai has more info on the tech specs of the machines on their dashboard than I have seen from any other cloud provider. For $0.065 an hour I used a GTX 1070 Ti with about 99.5% expected uptime. That’s 6.5 cents per hour! It was quick to set up the latest PyTorch version. I don’t recommend making a bid for a lower price, just rent at the current price or you’re likely to lose your machine.

vast.ai Options

Other than the limited free options of Kaggle and Google Colab, vast.ai is the lowest cost option for decent performance.

Other Options

I looked at many other GPU options. I didn’t test every service on my list of known GPU cloud providers — because many of them were either not easy to setup quickly or not cost competitive.

If you’re studying machine learning, then you should spend some time on Kaggle. It has a great learning community with tutorials, contests, and lots of datasets. Kaggle provides free K80 GPUs with 12GB VRAM with 2 CPU cores with 6GB RAM for 12 hours max. I find the site to be laggy, and their notebooks don’t have many of the shortcuts the Jupyter Notebooks usually have.

Additionally, uploading custom packages and working with files on Kaggle is tough. I suggest you not use Kaggle for deep learning training, but do engage with it for lots of other machine learning goodness.

I tried to use both IBM Watson and Microsoft Azure. They both failed the easy to setup test miserably. I spent 30 minutes with each of them before deciding that was enough. Life it too short for unnecessary complexity when there are good alternatives.

Many of the services with quick deploys have good customer service and good UX, but don’t even mention data security or data privacy. VectorDash and Salamander are both quick deploys and fairly cost effective. Crestle is quick to deploy but pricier. FloydHub was much more expensive with a K80 cost over $1.00 per hour. snark.ai prices are inexpensive at under $.10 for basic machines, but I kept getting errors at startup and chat help hadn’t responded after two days (although they responded to an earlier question quickly).

Amazon SageMaker is more user friendly than AWS EC2, but not cost competitive with the other options in this list. It costs $1.26 for a basic K80 GPU setup like the p2.xlarge! It also requires quota increases to use, which can take days, I found.

Every cloud company should talk about security, SLAs, and data privacy prominently. Google, AWS, and PaperSpace do this, but many of the smaller, newer companies do not.

Experiments

I trained a deep learning model on the popular cats vs. dogs image classification task using FastAI v1.0 in a Jupyter Notebook. This test provided meaningful time differentiation between the cloud provider instances. I used FastAI because it is able to train models on this task to extremely good validation set accuracy (about 99.5% generally) quickly, with few lines of code.

I measured time using the %%time magic command. Wall time was compared because that was the time Google Colab would output. Wall time was summed for the three operations: training with fit_one_cycle, unfreezing and fitting again with fit_one_cycle, and predicting with test time augmentation. ResNet50 was used for transfer learning. Note that the time for the initial training was taken on the second run, so that the time of downloading ResNet50 wouldn’t be included in the time calculation. The code I used to train the model was adapted from FastAI’s docs and is available here.

Results

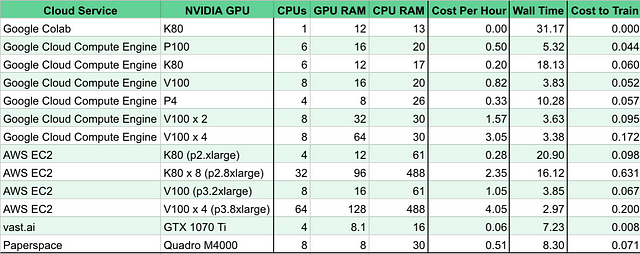

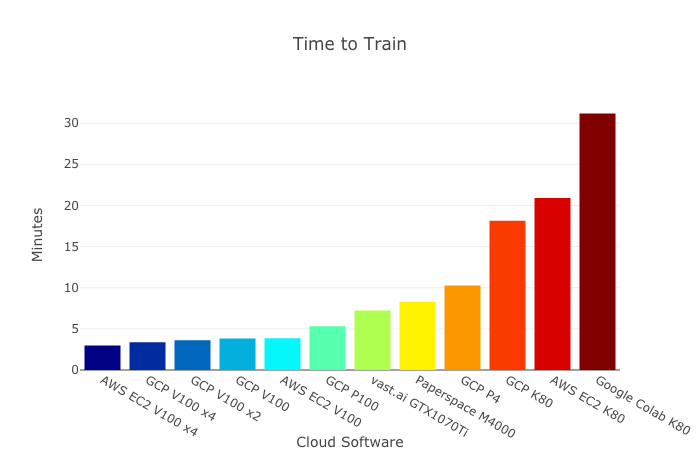

Here’s a chart showing the results by instance with the specs of the machines.

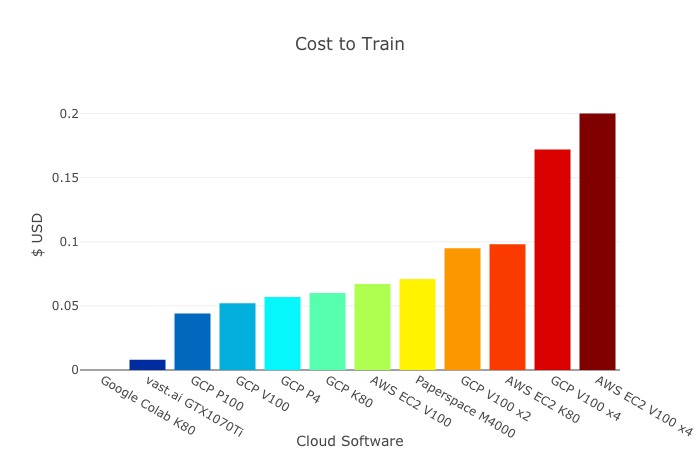

The data and charts are available in this Jupyter Notebook at Kaggle. Here are the results of the Cost to Train displayed graphically. GCP is short for Google Cloud Platform.

Google Colab was free but it’s slow. Of those that that cost something to train. vast.ai was by far the most cost effective.

I checked vast.ai pricing over several days and a machine with similar specs was generally available. One day I tested a similar machine that resulted in $.01 cost to train vs. $.008 for the first machine, so the results were very similar. If vast.ai saw a large jump in user demand without a similarly large increase in user hosts, prices would be expected to increase.

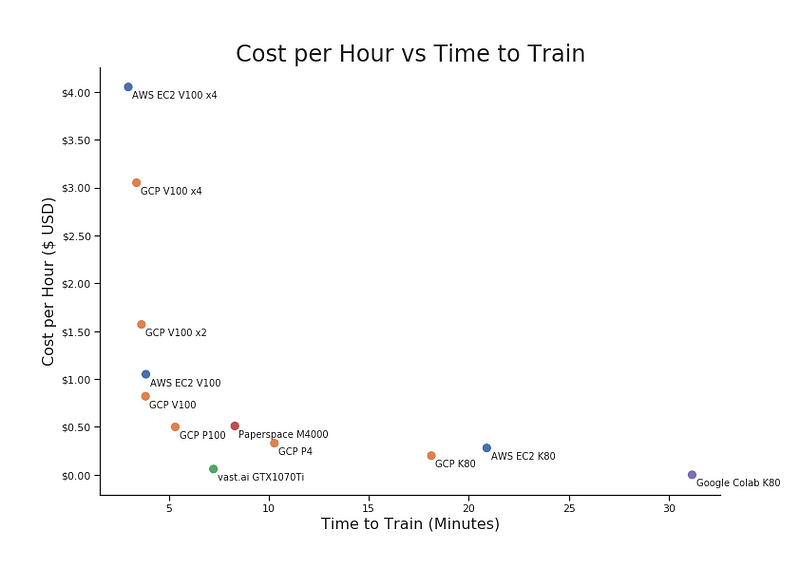

Let’s plot the Cost per Hour against the Time to Train.

The bottom left corner is where you want to be — optimizing cost and training time. vast.ai, Google Cloud’s single P100 and Google Cloud’s single V100 were strong options.

If you really want speed, AWS’s V100 x4 is fastest of the options compared. Its cost to train was also the highest.

If you really don’t want to spend money, Google Colab’s K80 does the job, but slowly. Again, parallelization wasn’t possible for Colab. Presumably that will get resolved and its performance would be near 20 minutes, where Google Cloud’s K80 and AWS’s K80 came in. Colab would then be a better option, but still slow. Once TPUs work with PyTorch Colab should be even better for PyTorch users.

Paperspace is slightly pricier than other options on the efficient frontier, but it reduces a lot of hassles with setup. Paperspace also has a very, very basic Standard GPU starting at $0.07 per hour, but the specs are so minimal (i.e. 512 MB GPU RAM) that it’s not really suitable for deep learning. Google Colab has a far better option for free.

Options far from the efficient frontier were excluded from the final charts. For example, AWS’s p2.8xlarge would be in the upper middle of the Cost Per Hour vs. Time to Train chart at over 16 minutes to train and $4.05 per hour for a spot instance. That is not a good value.

Time reported in this experiment doesn’t reflect time to SSH in and install latest package versions if they aren’t already on the image. AWS took a while — at least 10 min to get a running Jupyter Notebook, even after I had done it a many times for this project.

Google Cloud was much faster to get running, partly because an image with PyTorch v1.0 Preview and FastAI V1.0 is available.

vast.ai takes a few minutes to spin up, but then with a click you’re in a vast.ai online Jupyter Notebook, which is super easy to use.

One limitation of this study is that an image classification task was only one used. However, other deep learning training would likely show similar results.

Recommendations

If you are new to this whole area and want to learn deep learning, I suggest you first learn Python, Numpy, Pandas, and Scikit-learn. My recommendations for learning tools are in this article.

If you are already a proficient programmer and know how to handle bugs in new code, I suggest you start deep learning with FastAI v1.0. The runnable docs are available now.

Google Colab is a great place to start if you are learning with Keras/TensorFlow. It’s free. If Colab starts offering better PyTorch v1.0 support, it could also be a good place to start if you’re learning FastAI or PyTorch.

If you’re building with FastAI or PyTorch v1.0 and not in need of data privacy, and you aren’t a pro with Google Cloud or AWS, I suggest you start with vast.ai. Its performance is good, it is extremely inexpensive, and it is relatively easy to set up. I wrote a guide to using vast.ai with FastAI here.

If you’re scaling, use Google Cloud. It is a complete package that is affordable and secure. The UX and configuration complexity are superior to AWS. The pricing for GPUs, CPUs, and TPUs can be estimated easily and don’t vary with demand. However, the storage and ancillary fees can be difficult to decipher. Additionally, you’ll need to request quota increases if you plan on running more powerful GPU or TPUs.

Legal disclaimer: I’m not responsible if you forget to turn off your instance, don’t save your progress, or get hacked 😦.