The demand for data engineers is growing rapidly. According to hired.com the demand has increased by 45% in 2019. The median salary for Data Engineers in SF Bay Area is around $160k. So the question is: how to become a data engineer?

What Data Engineering is

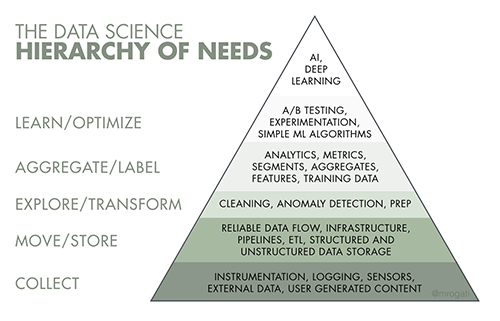

Data engineering is closely related to data as you can see from its name. But if data analytics usually means extracting insights from existing data, data engineering means the process of building infrastructure to deliver, store and process the data. According to The AI Hierarchy of Needs, the data engineering proccess is located at the very bottom: Collect, Move & Store, Data Preparation. So if your organization wants to be data/AI-driven then they should hire/train data engineers.

But what data engineers actually do? The amount of data is growing rapidly every single day. We are contemplating the new era where everybody can do a content from their mobile phone and other gadgets. Even small devices are connected to the Internet. Data engineers from the past were responsible for writing complex SQL queries, building ETL (extract, transform & load) processes using big enterprise tools like Informatica ETL, Pentaho ETL, Talend etc. But now the market demands more broader skillset. If you want to work as a data engineer you need to have:

- Intermediate knowledge of SQL and Python

- Experience working with cloud providers like AWS, Azure or GCP

- The knowledge of Java/Scala is a big plus

- Understading SQL/NoSQL databases (data modeling, data warehousing, performance optimization)

The skillset is very similar to what Backend engineers usually know. In reality if an organization is growing in terms of data the ideal candidate to transform into data engineer is a backend engineer.

The particular technologies and tools could differ due to company size, data volumes and data velocity. If we look at the FAANG for example, they usually require:

- Knowledge of Python, Java or Scala

- Experience working with Big data tools like Apache Hadoop, Kafka and Spark

- Solid knowledge of algorithms and data structures

- Undestanding of distributed systems

- Experience with Business Intelligence tools like Tableau, QlikView, Looker or Superset

Data Engineer's Skillset

Data engineering is an engineering area that is why the knowledge of computer science fundamentals is required, especially the understanding of most popular algorithms and data structures (Hello Mr. Cormen!).

Because data engineers deal with the data on a daily basis understading how databases work is a huge plus. For example, the most popular SQL databases like SQLite, PostgreSQL, MySQL use B-Tree data structure under the hood.

Algorithms & Data Structures

If you prefer video courses I would recommend to look at the Data Structures and Algorithms Specialization. I took these courses and think they are quite good as a starting point.

Speaking about talks I would highly recommend take a look at Alex Petrov’s presentation called What Every Programmer has to know about Database Storage:

Alex has a great series of posts related to databases:

Courses, video presentations are good but what about books? I would recommend the only book by Thomas Cormen and friends called Introduction to Algorithms. The most comprehensive reference on algorithms and data structures. To practice and strengthen your knowlegde go to leetcode.com and start solving problems. Practice makes perfect.

Databases are great, Carnegie Mellon University uploads their lessons to Youtube:

SQL — the lingua franca for databases

SQL was developed back in 70s and still is the most popular language to work with data. Periodically some experts claim that SQL is going to die very soon, but it is still alive despite many rumors. I think we will stick to SQL for another decade or two (or even more). If you look at the modern and popular databases you will see that almost all of them support SQL:

- PostgreSQL, MySQL, MS SQL Server, Oracle DB

- Amazon Redshift, Apache Druid, Yandex ClickHouse

- HP Vertica, Greenplum

In a big data ecosystem there are many different SQL engines: Presto (Trino), Hive, Impala etc. I would highly recommend to invest some time to master SQL.

If you are new to SQL, start with the Mode’s SQL guide: Introduction to SQL. If you feel comfortable you can continue with the DataCamp’s interactive courses. I would recommend these:

The best resources on SQL are Modern SQL and Use The Index, Luke! Practice makes perfect that is why go to Leetcode Databases problemset and start practicing By the way, do not forget to read my article on SQL window functions as well.

Programming: Python, Java and Scala

Python is a very popular programming language to build web apps as well as for data analytics & science. It has a very rich ecosystem and huge community. According to TIOBE Index Python is in the Top 3 widely used programming languages after C & Java.

Speaking about other 2 languages, many big data systems are written in Java or Scala:

- Apache Kafka (Scala)

- Hadoop HDFS (Java)

- Apache Spark (Scala)

- Apache Cassandra (Java)

- HBase (Java)

- Apache Hive, Presto in Java

In order to undestand how these systems work I would recommend to know the language in which they are written. The biggest concern with Python is its poor performance hence the knowledge of a more efficient language will be a big plus to your skillset.

If you are interested in Scala, I would recommend to take a look at Twitter’s Scala School. The book Programming in Scala by its creator is also a good starting point.

The Big Data Tools

There are lots of different technologies in a Big Data landscape. The most popular are:

- Apache Kafka is the leading message queue/event bus/event streaming

- Apache Spark is the unified analytics engine for large-scale data processing

- Apache Hadoop, the big data framework which consists of different tools, libraries and frameworks including distributed file system (HDFS), Apache Hive, HBase etc.

- Apache Druid is a real-time analytics database

It is really difficult to learn everything that is why focus on the most popular and learn fundamental concepts behind them. For example, back in 2013 Jay Kreps (co-founder of Apache Kafka) wrote the paper called The Log: What every software engineer should know about real-time data’s unifying abstraction.

Cloud Platforms

Everything goes to the clouds. You should have experience working with at least one cloud provider. I would recommend go with the Amazon Web Services which is the leading cloud provider in the world. The second place goes to the Microsoft Azure, the third place takes Google Cloud Platform.

All providers have certifications. For example, the most suitable certification for a data engineer in AWS is AWS Certified Data Analytics – Specialty. If you decide to proceed with GCP the right choice is Professional Data Engineer, for MSA is Azure Data Engineer Associate.

Fundamentals of Distributed Systems

The amount of data generated nowadays is tremendous. You cannot fit it into one computer. The data should be distributed across different nodes. If you want to be a good data engineer you have to understand the fundamentals of distributed systems. There are lots of resources where you can start your journey into this field:

- Distributed Systems lectures from MIT by Robert Morris

- Distributed Systems lectures by Martin Kleppmann

- Distributed Systems by Lindsey Kuper

I would also highly recommend the book Designing Data-Intensive Applications by Martin Kleppmann. He has a blog. Also if you prefer blogs I would recommend take a look at the series of posts about distributed systems by Vaidehi Joshi.

Data Pipelines

Building a data pipeline is one of the main responsibilities of a data engineer. Data pipeline is a process of data consolidation. Data engineer should be able to reliably deliver, load and transform data from multiple sources into a specific destination, usually it is central data warehouse or data lake. There are many tools which can help you build this process. Take a look at the Apache Airflow, Luigi from Spotify, Prefect or Dagster. If you prefer nocode solution Apache NiFi is a way to go.

Summary

Data engineer is a team player who is working with data analysts, scientists, infrastructure engineers and other stakeholders. So do not forget about soft skills like empathy, understanding a business domain, open-mindedness etc.