Ready to learn Machine Learning? Browse courses like Machine Learning for Predictive Analytics developed by industry thought leaders and Experfy in Harvard Innovation Lab.

Machine learning is the new hotness, we all agree. Probably every story you’ve read with a click-baity title like “7 Technology Trends That Will Dominate 2018” will mention machine learning or AI in one way or another.

The truth is, machine learning is great, but its really easy to quickly become disenfranchised by it if you’re not going about it the right way. I know this from personal experience.

Photo by NeONBRAND on Unsplash

Lead with a problem

First, and perhaps foremost, machine learning is still just scratching the surface of what is possible. What can be frustrating is that you definitely will never hear that from someone trying to sell you machine learning capabilities or services. Even the hot shot data scientists and ML engineers you hire (understandably) may not immediately parrot the limitations of the current state-of-the-art.

I can’t tell you how many use cases and requests I’ve heard from business large and small for things that just simply aren’t possible. I’ve probably heard an equal amount of requests for things that don’t actually need machine learning to accomplish.

Throwing machine learning at just any old problem will not work. You’ll end up wasting a lot of time and money.

Instead, think carefully about the problem you are trying to solve. The simpler you can make the problem, the more likely machine learning will work. Don’t imagine problems that you think only computers would be good at. Look at how much effort would go into solving the problem manually first, then evaluate whether a computer system could perform that task unaided.

Training is everything

Machine learning from scratch is still very very hard. I’d argue that the hardest part is the training. I learned this the hard way when I attempted to train a machine learning model to detect smoking in films and video. I originally thought that I simply needed to show it hundreds of pictures of cigarettes and it would then just learn what a cigarette looked like.

That failed.

Then I figured out I needed to give it two labels; smoking and not smoking. So I found a bunch of random images of things that were not cigarettes and loaded that in.

That also failed.

I then tried a different approach. I decided to take freeze frames from movies where people were smoking. Since that was the context the model was meant to run in, I figured I’d have a better chance of making it work. For my not smoking label, I used freeze frames from dialogue scenes from different movies.

That almost worked. The problem, it turns out, is that what I had done was train the model on detecting the movies I used to train. So it was essentially a is it one of these 5 movies or not model.

One of my favorite scenes from Silicon Valley

The time and effort I spent on this was in vain. I’ve heard similar anecdotes from other customers as well. They hired ML engineers and specialists to build custom models, which took a year, only to end up with something that didn’t work very well.

The solution to this problem is partly the same as the one above. Think about the problem first, and make sure that a good training set is something you can come by.

I ended up solving the problem using Tagbox from Machine Box, but I still had to think about my training data, and decide on how likely edge cases were going to occur.

Don’t spend more money than you save

This seems obvious but its worth repeating. There are some really nifty machine learning APIs out there from the likes of Google, Microsoft, IBM and others. But in practice, the cost of these tools add up really quickly. I’ve seen people get bills for $45,000 just for a month’s worth of development effort against these APIs. One major broadcaster I spoke with told me that it would’ve been more expensive to run all his content through these cloud APIs than it would be to pay high school students to tag everything manually.

That is a huge ROI problem. (It is also why I joined Machine Box, as we solved that problem by making everything a flat subscription fee regardless of use).The cost of training machine learning models on GPUs and elsewhere has come down, but it still costs something. And that something, in many cases, can be more than its worth.

The value you extract from a particular machine learning model will depend on your use case. Make sure its well thought out and validated.

Don’t expect miracles

Has Alexa ever not understood what you’ve said? Isn’t it really frustrating? I still see a lot of accuracy issues with these voice assistants. Voice recognition is very very hard to accomplish. In machine learning, we often cite a percentage of accuracy when we talk about a specific model. If that accuracy is above 80%, we feel like we’ve got something useful. Driving north of 90% is usually just a matter of some tweaking. But what we’re really doing is talking about how well our model predicts something from a subset of the training data we set aside and refer to as a validation set. Problems can arise when your model is run against things that aren’t in the training set. And in a lot of cases, it is impossible to account for every possible edge case. Alexa is in millions of different auditory situations when it is activated. Background noise, accents, echos and a lot of other things confound the voice recognition system.

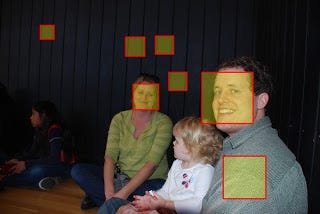

A lot of false positives from an implementation of OpenCV face recognition: https://goo.gl/m9jpwR

When a machine learning model misses something, its really easy to just think its a bug, or perhaps a defect in the model. It is vitally important you understand that that is not the case. False positives and false negatives are part and parcel of what machine learning is. It makes mistakes sometimes, just like we do. Every business has to be prepared for occasional false positives and negatives in machine learning. It is just a fact of life. Humans, performing tasks as businesses, make mistakes all the time.

One of the ways we help mitigate these issues at Machine Box is by making our boxes teachable. Meaning, you can simply correct something or add something to a model, on the fly, without having to undergo any type of retraining. Just like a human, machine learning models will need to be continuously improved over time to account for all the possible edge cases.

But your business and understanding of machine learning needs to take occasional false positives and negatives into account. Just because your system missed a keyword in some text, or a face in a picture, doesn’t mean the whole system should break down. If you’ve ever watched closed captioning on TV, you know that humans make mistakes all the time. Does it mean closed captioning is useless? Of course not. It still provides a vital service. Your implementation of machine learning should as well.

Should I not use it?

In spite of all this, I want to reiterate that machine learning is a wonderful, powerful type of computing that can bring a lot of value to businesses large and small. I have seen a lot of really good use cases for machine learning, being solved cheaply and effectively with Machine Box and sometimes other tools. Everything from improving security and compliance, to improving search and discovery. Used properly and it can save people an astonishing amount of time. It can also help generate a new source of revenue, identify threats, find lost data, and a whole lot more.

All of these successful implementations are such because they were all well-defined problems that can be solved by the current or previous state-of-the-art of machine learning. The amount of effort put in did not exceed the value created.

If you’re unsure if your use cases can be solved by machine learning, the best thing you can do is experiment. But obviously, in a way that doesn’t break the bank. You can easily do this with Machine Box or some of the cloud tools.

Before you jump into a time-consuming data science project, try your best to evaluate the problem, and make sure it’s the simplest iteration of the use case possible.