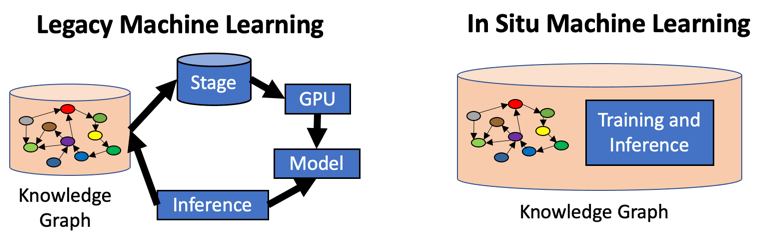

It is complex and expensive to extract data from a database, send it to a GPU, train a model, and then use this model to enrich a database. What if we could leave our data “in place” and continuously run algorithms that would automatically enrich our database with new insights? This is the vision behind a new generation of systems called “In Situ” machine learning systems. They reflect a new trend to integrate machine learning directly into our enterprise knowledge graphs.

The term In Situ means “in the original place”. In this context, it implies that we will keep data in place in our enterprise graph, and we are going to design our systems to minimize the need to move data around. If we look at the problem from the Systems Thinking perspective, we realize that the reason that we started moving data around is that older databases were incredibly inefficient at traversing relationships and binding specific datatypes to computational resources. This is because older relational databases were designed to run on a single server. Before the arrival of Massively Parallel Processing (MPP) enterprise knowledge graphs, the design of the older relational databases makes it difficult to do analysis over a cluster of 100s of nodes. With the arrival of Graphcore and the new Intel PIUMA architectures, we need to start thinking of our databases as integrated data-compute resources distributed over many servers and even sometimes in different data centers. It is now our task to rethink every process in our enterprise knowledge graph that requires unnecessary data movement.

The Benefits of In Situ Machine Learning

I was introduced to the concept of In Situ Machine Learning by Dr. Changran Liu of TigerGraph. I had seen Dr. Liu do in-database machine learning as a “trick” in the past using GSQL functions within TigerGraph. But at the time, I was apprehensive about protecting our production systems to avoid slow responses for our 25,000 concurrent users. I didn’t think carefully about it as a realistic long-term goal when we have 1,000x the compute that we have now. I didn’t appreciate the deep architectural tradeoffs of doing In Situ Machine Learning. Dr. Liu was the first person to carefully articulate some of the benefits of In Situ Machine Learning. Here are a few of the benefits if In Situ Machine Learning from Dr. Liu and some that I have added myself:

- Avoid slow and costly processes of moving data in and out of your database

- Avoid the security and audit problems of possible data leakage by excessive data movement

- Better support of the new generation of “Eyes Off” machine learning where our developers don’t have to see sensitive information such as Personal Healthcare Information.

- Better support for continuous model evolution over rapidly changing data sets

- Fewer limitations in model size

- Better utilization of the existing compute resources that are closer to the actual data

Why In Situ Machine Learning is More Natural

And if we think carefully about In Situ Machine Learning, we realize that it is a much more natural process. From research on Neurogenesis, we learned that our brains learn continuously. Throughout our lives, new neurons and synapses are continuously being generated and removed in our brains. And we do this without having to dump the data in our brains to external systems! So why can’t our enterprise knowledge graphs do the same things!

Understanding the Legacy of Video Games and GPUs

The answer is because our research building deep learning neural networks is fundamentally a massively parallel processing task. The only hardware we had sitting around at the time was some cool hardware originally designed to speed up rendering in video games: Graphics Processing Units (GPUs). But this historical hack should not be confused with good architecture, sound design, and Systems Thinking. Our design principle of “don’t move data if you don’t have to” should always be weighed with adherence to old ways of doing things “because that is the way we have always done things.”

You can see a video of Dr. Liu demonstrating In Situ Learning at the Graph + AI World conference session: Hands-on Workshop: Accelerating Machine Learning with Graph Algorithms here. Dr. Liu’s In Situ Machine Learning demo starts around 1:15 in the workshop.

I close with a statement of gratitude for the people that put on the Graph+AI World conference. It was really eye-opening for me to get exposed to the diverse set of speakers from a vast number of companies and industries — a big shout out to my former colleague Jonathan Herke and the rest of the team from TigerGraph. I know you worked had to make this conference happen, and it was totally worth it for my coworkers and me.