Ready to learn Artificial Intelligence? Browse courses like Uncertain Knowledge and Reasoning in Artificial Intelligence developed by industry thought leaders and Experfy in Harvard Innovation Lab.

Around 2012, researchers at University of Toronto used deep learning for the first time to win ImageNet, a popular computer image recognition competition, beating the best technique by a large margin. For those involved in the AI industry, this was a big deal, because computer vision, the discipline of enabling computers to understand the context of images, is one of the most challenging areas of artificial intelligence.

And naturally, like any other technology that creates a huge impact, deep learning became the focus of a hype cycle. Subsequently, deep learning pushed itself into the spotlight as the latest revolution in the artificial intelligence industry, and different companies and organizations started applying it to solve different problems (or pretend to apply it). Many companies started rebranding their products and services as using deep learning and advanced artificial intelligence. Others tried to use deep learning to solve problems that were beyond its scope.

Meanwhile, media outlets often wrote stories about AI and deep learning that were misinformed and were written by people who did not have proper understanding of how the technology works. Other, less reputable outlets used sensational headlines about AI to gather views and maximize ad profit. These too contributed to the hype surrounding deep learning.

And like every other hyped concept, deep learning faced a backlash. Six years later, Many experts believe that deep learning is overhyped, and it will eventually subside and possibly lead to another AI winter, a period where interest and funding in artificial intelligence will see a considerable decline.

Other prominent experts admit that deep learning has hit a wall, and this includes some of the researchers who were among the pioneers of deep learning and were involved in some of the most important achievements of the field.

But according to famous data scientist and deep learning researcher Jeremy Howard, the “deep learning is overhyped” argument is a bit— well—overhyped. Howard is founder of fast.ai, a non-profit online deep learning course, and he has lots of experience teaching AI to people who do not have a heavy background in computer science.

Howard debunked many of the arguments that are being raised against deep learning in a speech he delivered at the USENIX Enigma Conference earlier this year. The entire video clarifies very well what exactly deep learning does and doesn’t do and helps you get a clear picture of what to expect from the field.

Here are a few key myths that Howard debunks.

Deep learning is just a fad—next year it’ll be something else

Many people think that deep learning has popped out of nowhere, and just as fast as it has appeared, it will go away.

“What you’re actually seeing in deep learning today is the result of decades of research, and those decades of research are finally getting to the point of actually giving state of the art results,” Howard explains.

The concept of artificial neural networks, the main component of deep learning algorithms, have existed for decades. The first neural network dates to the 1950s.

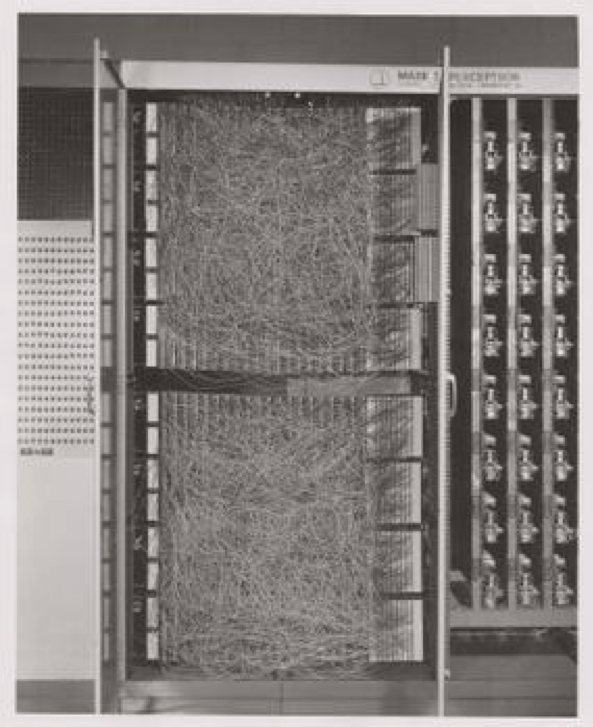

The Mark I Perceptron was the first implementation of neural networks in 1957 (Source: Wikipedia)

But it has only been thanks to decades of research and the availability of data and compute resources in recent years that the concept of deep learning has stepped out of research labs and found its way into practical domains.

“It’s after all this work that people have finally got to the point where it’s working really well,” Howard says. “You should expect to see [deep learning] continue to improve rather than disappear.”

Deep learning and machine learning are the same thing

To be fair, some people—advertently or inadvertently—sometimes use the plethora of terms that define different AI techniques interchangeably. And the misuse of AI vocabulary has led to confusion and skepticism toward the industry. Some people say that deep learning is just another name for machine learning, while others place it at the same level as other AI tools such as support vector machines (SVM), random forests and logistic regression.

But deep learning and machine learning are not the same. Deep learning is a subset of machine learning. In general, machine learning applies to all the techniques that mathematical models and behavior rules based on training data. ML techniques have been in use for a long time. But deep learning is far superior than its peers.

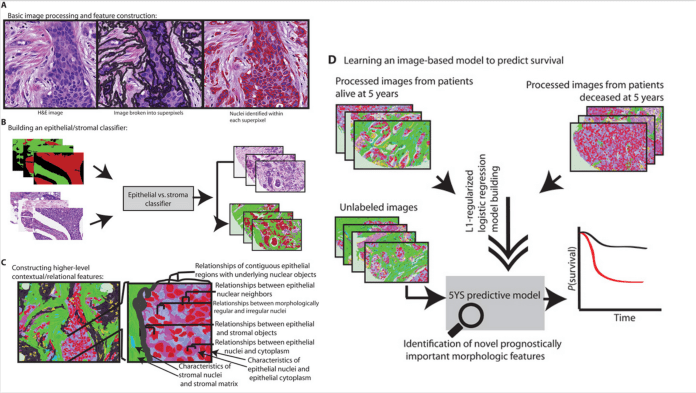

Before deep learning, scientists had to do put a lot of hard work into programming “features” or modules that could perform smaller parts of the task that your model wanted to perform. For instance, if you wanted to create an AI model that could detect images of cats, you would have to write smaller programs that could detect cat features such as the ears, the tail, the nose, the fur. And you would have to make those programs strong enough to detect those features from different angles and under different lighting conditions, as well as tell the difference between different cat species. And then you had to do machine learning on top of those features.

If you wanted to tackle a more complex problem, such as detecting breast cancer from MRI scans, then creating features would become even more challenging. “You would have dozens of domain experts working with dozens of computer programmers and mathematicians to come up with these ideas of features and program them,” Howard says. “And then you would put them through a classic machine learning model such as logistic regression.” The effort would effectively take years and years of work.

Classic machine learning approaches involved lots of complicated steps and required the collaboration of dozens of domain experts, mathematicians and programmers

Deep learning, on the other hand, replaces the arduous classic machine learning process with neural networks. Howard describes neural networks as an “infinitely flexible function.” This means that neural networks can be applied to most problems that you solved with machine learning without going through all the domain-specific feature engineering that you previously had to do.

To adapt a neural network to solve a specific problem you need to adjust its parameters. To do this, deep learning uses “gradient descent,” an all-purpose optimization algorithm that fits the parameters of a neural network to the problem it wants to solve.

Finally, deep learning leverages the power of GPUs and specialized hardware that have become available in recent years to perform these tasks in a reasonably fast and scalable way.

“It is only in the past few years that these three things have come together to allow us to actually use neural networks to get state-of-the-art results,” Howard says.

So rather than going through the expertise-intensive and error-prone process that previous machine learning methods involved, deep learning enables you to provide samples data (e.g. labeled cat pictures, MRI scans labeled as cancerous or non-cancerous…) and train the neural network using gradient descent. The neural network compares and finds common patters in those data samples and learns to apply the same knowledge to classify new samples of data it hasn’t seen before.

This approach has made deep learning the most popular artificial intelligence technique in the past few years and has caused an explosion of applications that use deep learning.

Deep learning is only good for image recognition

While acknowledging that deep learning is a very useful AI technique, a lot of its critics complain that its use is limited to solving problems that involve image classification.

“Image recognition is actually really important,” Howard says. A few years ago, Howard and a team of researchers trained a deep neural network on CT scans of lungs and created an algorithm that could detect malignant cancer tumors with lower false positive and negative rates in comparison to a panel of four human radiologists.

Howard also points out that many problems can be recast as image recognition problems. For instance, AlphaGo, the deep learning algorithm that beat the world champion at the ancient Chinese game of Go, was actually an image recognition convolutional neural network (CNN).

“Specifically, what AlphaGo did was it looked at lots of examples of Go boards that had been played in actual human tournaments,” Howard explains. “Basically, they ended up doing an image recognition neural network where the thing they were trying to learn wasn’t ‘Is this photo a cat or a dog’ but ‘Is this a picture of a Go board where white wins or black wins.’ They ended up with something where they could actually predict the winner of a Go game by looking at the Go board.”

This approach has been the key piece to the success of AlphaGo and many other AI algorithms that have mastered different board and video games.

The point is, many problems can be transformed into image recognition problems and solved with deep learning. For instance, one of the students of Howard’s deep learning course created a neural network that was trained on the images that represented mouse movements and clicks. “In this case, he created a convolutional neural network, an image recognition program, to try and predict fraud based on these pictures,” Howard says.

Deep learning can turn visual representations of mouse movements and clicks into fraud detection applications

That said, deep learning has also proven its worth beyond the domain of computer vision and image recognition.

Howard points out that deep learning now also works for most natural language processing (NLP) problems. This includes areas like machine translation and text summarization. NLP is also a key component that enables AI assistants such as Siri, Alexa and Cortana to understand your commands. (To be clear, there are distinct limits to deep learning’s grasp of human language.)

Deep learning can also solve problems involving structured data, such as rows and columns in a spreadsheet. For instance, you can provide a neural network with a set of rows representing financial transactions and their outcome (fraudulent or normal) and train it to predict fraudulent transactions.

Howard points out that deep learning can also be applied to time series and signals problems, such as an order of events of different IP addresses connecting to a network or sensor data collected over time.

The pain points of deep learning

But more importantly, Howard also points out to some of the areas where deep learning has had limited success. These areas include reinforcement learning, adversarial models and anomaly detection.

Some experts believe reinforcement learning is the holy grail of current artificial intelligence. Reinforcement learning involves developing AI models without providing them with a huge amount of labeled data. In reinforcement learning, you provide your model with the constraints of the problem domain and let it develop its own behavioral rules. AlphaGo Zero, the advanced version of AlphaGo, used reinforcement learning to train itself from scratch and best its predecessor. While deep reinforcement learning is one of the more interesting areas of AI research, it has still had limited success in solving real-world problems. Google Brain AI researcher Alex Irpan has a fascinating post on the limits of deep reinforcement learning.

Adversarial models, the second area where Howard mentions in video, is another pain point of deep learning. Adversarial examples are instances where manipulating the inputs can cause a neural network to behave in irrational ways. There are many papers in which researchers show how adversarial examples can turn into attacks on AI models.

There have been several efforts to harden deep learning models against adversarial attacks, but so far, there has been limited success. Part of the challenge stems from the fact that neural networks are very complex and hard to interpret.

Anomaly detection, the third deep learning pain point that Howard speaks about is also very challenging. The general concept is to train a neural network on a baseline data and let it determine behavior that deviates from the baseline. This is one of the main approaches to using AI in cybersecurity and several companies are exploring the concept. However, it still hasn’t been able to establish itself as a very reliable method to fight security threats.

Deep learning is unusable because it’s a black box

This is a real concern, especially in areas where critical decisions are being conferred to artificial intelligence models, such as health care, self-driving cars and criminal justice. The people who are going to let deep learning make decisions on their behalf need to know what drives those decisions. Unfortunately, the performance advantage you get when you train a neural network at performing a task comes at a cost to the visibility you get at its decision-making process. This is why deep learning is often called a “black box.”

But there are also lots of interesting efforts to explain AI decisions and help both engineers and end users understand the elements that influence the output of neural networks.

“The way you understand a deep learning model is you can look inside the black box using all of these interpretable ML techniques,” Howard says.

(I recently wrote an in-depth feature for PCMag on different explainable AI techniques. It contains interviews with AI researchers and experts. You might find it an interesting read if you want to dig in deeper into the efforts relating to interpreting AI decisions.)

Deep learning needs lots of data

The general perception is that to create a new deep learning model, you need access to millions and billions of labeled examples, and this is why it’s only accessible to large tech companies.

“The claim that you need lots of data is generally not true because most people in practice use transfer learning,” Howard says.

In general, transfer learning is a discipline in machine learning where knowledge gained from training one model is transferred to another model that performs a similar task. Compared to how humans transfer knowledge from one domain to another, it’s very primitive. But transfer learning is a very useful tool in the domain of deep learning, because it enables developers to create new models with much less data.

“You start with a pre-trained [neural] network, which maybe somebody else’s and then you fine-tune the weights for your particular task,” Howard explains. Howard further says that in general, if you have about 1,000 examples, you should be able to develop a good neural network.

You need a PhD to do deep learning

Deep learning is a very complicated domain of computer science and it involves a lot of advanced mathematical concepts. But in the past years, plenty of tools and libraries have been created that abstract away the underlying complexities and enable you to develop deep learning models without getting too involved in the mathematical concepts.

Fast.ai and Keras are two off-the-shelf libraries that you can use to quickly develop deep learning applications. There are also plenty of online courses, including Howard’s fast.ai, Coursera and others, that enable you to get started in deep learning programming with little programming knowledge and without having a computer science degree. Many people with backgrounds other than computer science have been able to apply these courses to real-world problems.

To be clear, deep learning research remains a very advanced and complicated domain. Talent is both scarce and expensive. The people who develop new deep learning techniques are some of the most coveted and well paid researchers. But that doesn’t mean others need to have the same level of knowledge use the results of those research projects in their applications.

Deep learning needs lots of computing power and GPU

“You might worry that you need a huge room full of GPUs [for deep learning]. That’s really not true on the whole,” Howard says. “The vast majority of the successful results I see now are done with a single GPU.”

Big racks of GPUs are needed for big research projects that you see at large companies and organizations, such as this robotic hand that was trained with 6144 CPUs and 8 GPUs.

Another example is OpenAI Five, an AI model trained to play the famous Dota 2 online battle arena video game. OpenAI Five was trained with 128,000 CPU cores and 256 GPUs.

However, most practical problems can be solved with a single GPU. For instance, you can go through Howard’s Fast.ai course with a single GPU.

Some closing thoughts

I would surely recommend that you watch the entire video (below). In it, Howard delves into some more specialized topics such as whether you can apply deep learning to infosec (I think the topic deserves an entire post in itself).

What’s important is that we understand the extents and limits as well as the opportunities and advantages that lie in deep learning, because it is one of the most influential technologies of our time. Deep learning is not overhyped. Perhaps it’s just not well understood.