Ready to learn Machine Learning? Browse Machine Learning Training and Certification courses developed by industry thought leaders and Experfy in Harvard Innovation Lab.

If you read the first article in this series, you’re already on your way to upping your math game. Maybe some of those funny little symbols are starting to make sense.

But here’s another dirty little secret nobody tells you about AI:

You don’t actually need that much math to get started.

If you’re a developer or sys-admin you probably already use a lot of libraries and frameworks that you know little about. You don’t have to understand the inner workings of web-scraping to use curl. The same is true with AI. There are a number of frameworks and projects that make it easy to get going fast without needing a data science Ph.D.

Don’t get me wrong. The math helps you feel confident about what’s going on behind the scenes. It allows you to read research papers and advanced books like Ian Goodfellow’s Deep Learning without your eyes glazing over. So keep studying the books I gave you in the last article. But if you want to start using AI, you can do that today.

Let’s get started with some practical projects.

My approach to learning is very similar to the excellent approach outlined in The First Twenty Hours. We all know the 10,000 hour rule. To truly master a skill you need to put in a lot of time. But we’re not there yet. We’re just getting started. Right now we’re trying to get from “this sucks” to “this is so much fun!”

The basics of the approach are simple:

- Pick a project.

- Get past self-crippling beliefs.

- Try lots of stuff and fail fast.

- Practice.

Easy, right? So let’s go!

Pick a Project

First off, you need a project that will really motivate you to get out of your comfort zone.

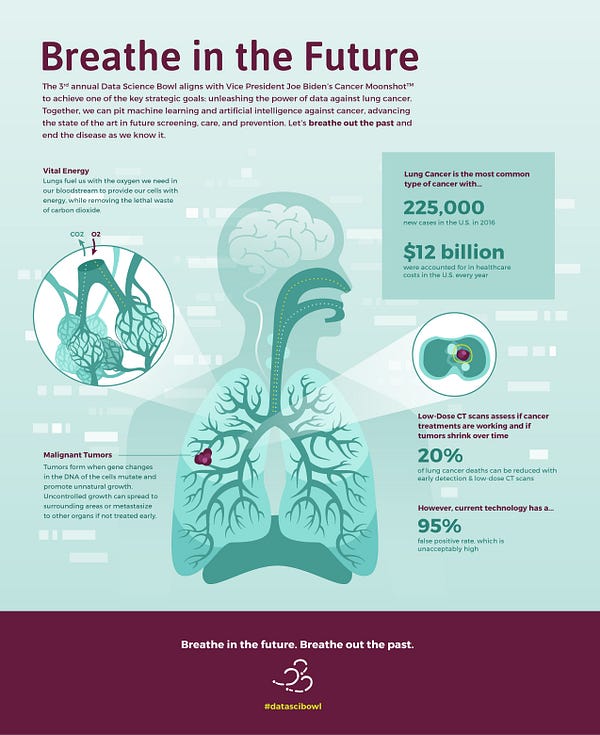

Kaggle is the place for machine learning. Right now they’re hosting a contest with a $1 million purse to improve classification of lung cancer lesions. Anyone can enter, including you.

Now I know what you’re thinking. There’s no chance that I win this. This is a contest for heavy hitters. Glad you brought that up, because that brings us to step two:

Get Over Self-Crippling Beliefs

The most important step in learning anything new is to shut down that little voice of self-doubt in your head as fast as possible. The First Twenty Hours advocates removing distractions, practicing on the clock and a number of other techniques. Throw in meditation, affirmations or heavy drinking. Whatever works. Just do whatever it takes to get that voice to go away so you can focus. If you need a self-help book to get over the hump, try You Are a Badass, a fun, funny, sarcastic masterpiece!

Here’s the deal: You do suck right now. But that’s OK! You won’t for long.

Feeling confused and frustrated is always the first stage of learning. So rather than beating yourself up, see it as a sign that you’re on the right track. You’re learning something awesome!

You probably won’t win the competition, but so what? Focus on getting a competent entry submitted before the deadline. Not everyone can win a marathon, but finishing one is a hell of an accomplishment in and of itself, right?

And you know what? You just might win. Seriously.

As an amateur, you’re not burdened by years of theory and ideas that weigh down the professionals. Just remember the story about the student who solved two unsolvable math problems after finding them on the blackboard and mistaking them for homework assignments. The truth is data science is more art than science. It’s a field that attracts polymaths with all kinds of eclectic backgrounds. So get in there and try stuff.

Who knows what will happen?

Maybe you’ll see something the experts missed, make a real impact on cancer detection and take home some serious money to boot!

Try Lots of Stuff and Fail Fast

If you’re a dev-ops guy, you know this mantra. It applies to learning too. What I do is grab a bunch of samples of books and start skimming them quickly to see which ones make the most sense to me. Each person has a different style, so some books will work for one person and not another. Pick the one that works best for you.

There are a few books on machine learning out there, like Real World Machine Learning. Unfortunately, because the field is so new, most of the books are just starting to come out this year. You can pre-order Deep Learning: A Practitioners Approach orHands-on Machine Learning with Scikit-Learn and Tensorflow.

But you don’t have to wait. Let me introduce you to my friend safari Books Online. For forty bucks a month you can read as many books as you want and you get access to books in progress before they’re released, including the two listed above.

I’m going to save you some time though. Right now it’s totally unnecessary to learn how to code deep learning systems from scratch in Python, R, or Java. You need tools to get you rolling with Deep Learning fast so you can start working on your contest entry.

You want Keras with either TensorFlow or Theano.

You don’t even need to set it up yourself. Grab this sweet all-in-one deep learning Docker image.

Frankly, it doesn’t matter whether you use TensorFlow or Theano. They’re basically engines for running machine learning. At this point in your education, both are equal, so pick one.

Keras is a library of machine learning frameworks created by a top notch Google AI researcher. I had the good fortune of meeting the creator of Keras this weekend, Francois Chollet. He described Keras as the key to “democratizing AI.” He said that “deep learning is mature but it’s not yet widely disseminated…You don’t have to be an AI researcher to use Keras.” Instead, you can just start playing around with all kinds of state of the art algorithms right away.

If you’ve already got a Mac or Linux rig with a good Nvidia graphics card you’re good to go. If you don’t, considering picking up an Alienware. I recommend the mid-range Aurora series. You don’t need a kick-ass processor. You need an SSD, a secondary spinning disk to dump data to, 16–64GB of memory and the best Nvidia card(s) you can afford. Focus all your cash on the cards, as they really accelerate deep learning. You’ll need to reformat it with Linux and get the latest binary drivers. Unfortunately, the open source ones won’t cut it for the latest chipsets. They’ll likely boot to a black screen. Fix that like this.

There are also some tutorials out there for building your own rig if you’re a do-it-yourselfer. Also, I just added my own tutorial in part three!

Lastly, you can use the AWS, Google or Azure cloud, but GPU compute in the cloud can get expensive quick. Better to buy than lease until you know what you’re doing.

Practice

Now you’re ready to get started. Here’s a super-simple example for getting started with Keras.

You are going to need an approach to the competition. Once again, I’m going to save you some time.

The most effective method of tagging and studying images at the moment is known as a convolutional neural net (CNN). Google, Facebook, Pinterest, and Amazon all use them for image processing and tagging. You might as well start with the best-practice, right?

In fact, if you head over the competition itself, get the data set, and check out the tutorial, you’ll see that it walks you through slicing and dicing the images and using a CNN with Keras and TensorFlow backend. Voila!

Frankly, you could do a lot worse than just implementing the tutorial and messing around with the parameters for a few weeks to see what you get.

After that get crazy. Throw different parameters and algorithms at it. Experiment and have fun. Maybe you’ll stumble across something the experts missed!

If you’re ready to try something more advanced after that, there are some great posts on the Kaggle Data Science Bowl 2017 board. Turns out data scientists are not above sharing some of their secret sauce. Check out this one, which helps you start exploring the data, which is a series of anonymized CT scans.

This one is more advanced and currently the most popular post on the board for good reason. It helps you do “pre-processing,” which is basically scrubbing and massaging the data to make it easier for neural nets to deal with more fluidly. It actually turns the 2D images into 3D images! Super cool!

Frankly, if you type out all this code yourself and get it running, you’re already kicking serious ass. This approach to programming is called “the hard way,” i.e., just type in the code without thinking about it until you understand it. There is even a series of books on Python and other languages that take this approach to learning, and it may work for you.

One warning: Someone posted a perfect score in the competition already. He did it in a clever way, by studying the leaderboards and effectively doubling his training set size. It’s perfectly legal, but it won’t really help your goal, which is to learn about how to run neural nets against a training set for a good cause. I’d skip this approach for now, and focus on running Keras against the CT scans.

That’s it! With any luck, you’ll help redefine cancer research and take home some cash too. Not a bad day’s work.

But even if you don’t win, you’ll be well on your way to learning how to use AI in the real world.

Whatever happens, remember to have fun!