The concepts of Linear Algebra are crucial for understanding the theory behind Machine Learning, especially for Deep Learning. It gives you a better intuition for how algorithms really work under the hood, which enables you to make better decisions. So if you really want to be a professional in this field, you will not come around mastering some of its concepts. This post will give you an introduction to the most important concepts of Linear Algebra, that are used in Machine Learning.

Table of Contents:

- Introduction

- Mathematical Objects

- Scalar

- Vector

- Matrix

- Tensor

- Computational Rules

- 1. Matrix-Scalar Operations

- 2. Matrix-Vector Multiplication

- 3. Matrix-Matrix Addition and Subtraction

- 4. Matrix-Matrix Multiplication

- Matrix Multiplication Properties

- 1. Commutative

- 2. Associative

- 3. Distributive

- 4. Identity Matrix

- Inverse and Transpose

- 1. Inverse

- 2. Transpose

- Summary

- Resources

Introduction

Linear Algebra is a continuous form of mathematics and it is applied throughout science and engineering because it allows you to model natural phenomena and to compute them efficiently. Because it is a form of continuous and not discrete mathematics, a lot of computer scientists don’t have a lot of experience with it. Linear Algebra is also central to almost all areas of mathematics like geometry and functional analysis. Its concepts are a crucial prerequisite for understanding the theory behind Machine Learning, especially if you are working with Deep Learning Algorithms. You don’t need to understand Linear Algebra before you get started with Machine Learning but at some point, you want to gain a better intuition for how the different machine learning algorithms really work under the hood. This will help you to make better decisions during a Machine Learning system’s development. So if you really want to be a professional in this field, you will not come around mastering the parts linear algebra that are important for Machine Learning. In Linear Algebra, data is represented by linear equations, which are presented in the form of matrices and vectors. Because of that, you are mostly dealing with matrices and vectors rather than with scalars (we will cover these terms in the following section). When you have the right libraries, like Numpy, in your proposal, you can compute complex matrix multiplication very easily with just a few lines of code. Note that this Blogpost ignores concepts of Linear Algebra that are not important for Machine Learning.

Mathematical Objects

Scalar

A scalar is simply just a single number. For example 24.

Vector

A Vector is an ordered array of numbers and can be in a row or a column. A Vector has just a single index, that can point to a specific value within the vector. For example, V2 refers to the second value within the Vector, which is „-8“ in the yellow picture above.

Matrix

A Matrix is an ordered 2D array of numbers and it has two indices. The first one points to the row and the second one to the column. For example, M23 refers to the value in the second row and the third column, which is „8“ in the yellow picture above. A Matrix can have multiple numbers of rows and columns. Note that a vector is also a Matrix, but with only one row or one column.

The Matrix in the example in the yellow picture is also a 2 by 3-dimensional Matrix (rows*columns). Below you can see another example of a Matrix along with it’s notation:

Tensor

A Tensor is an array of numbers, arranged on a regular grid, with a variable number of axis. A Tensor has three indices, where the first one points to the row, the second to the column and the third one to the axis. For example, V232 points to the second row, the third column, and the second axis. This refers to the value 0 in right Tensor at the picture below:

It is the most general term for all of these concepts above because a Tensor is a multidimensional array and it can be a vector and a matrix, which depends on the number of indices it has. For example, a first-order tensor would be a vector (1 index). A second order tensor is a matrix (2 indices) and third-order tensors (3 indices) and higher are called higher-order tensors (more than 3 indices).

Computational Rules

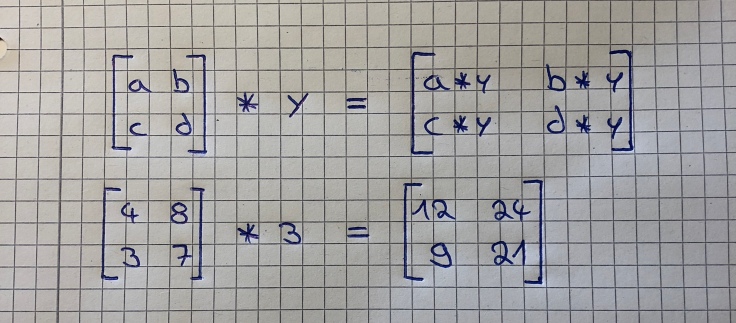

1. Matrix-Scalar Operations

If you multiply, divide, subtract or add a scalar with a matrix, you just do this mathematical operation with every element of the matrix. The image below shows that perfectly for the example of multiplication:

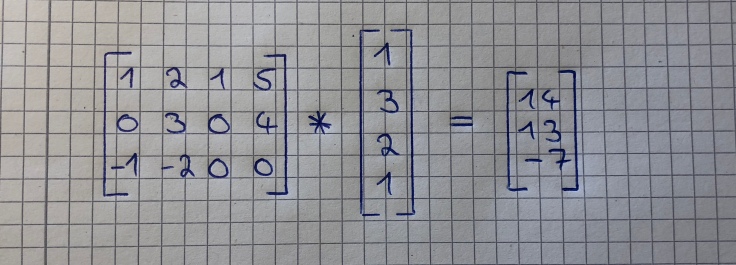

2. Matrix-Vector Multiplication

Multiplying a matrix with a vector can be thought of as multiplying each row of the matrix with the column of the vector. The output will be a vector that has the same number of rows as the matrix. The imagee below show how this works:

To better understand the concept, we will go through the calculation of the second image. To get the first value of the resulting vector (16), we take the numbers of the vector we want to multiply with the matrix (1 and 5), and multiply them with the numbers of the first row of the matrix (1 and 3). This looks like this:

1*1 + 3*5 = 16

We do the same for the values within the second row of the matrix:

4*1 + 0*5 = 4

And again for the third row of the matrix:

2*1 + 1*5 = 7

Here is another example:

And here is some kind of cheat-sheet:

3. Matrix-Matrix Addition and Subtraction

Matrix-Matrix Addition and Subtraction is fairly easy and straightforward. The requirement is that the matrices have the same dimensions and the result will be a matrix that has also the same dimensions. You just add or subtract each value of the first matrix with its corresponding value in the second matrix. The picture below shows what I mean:

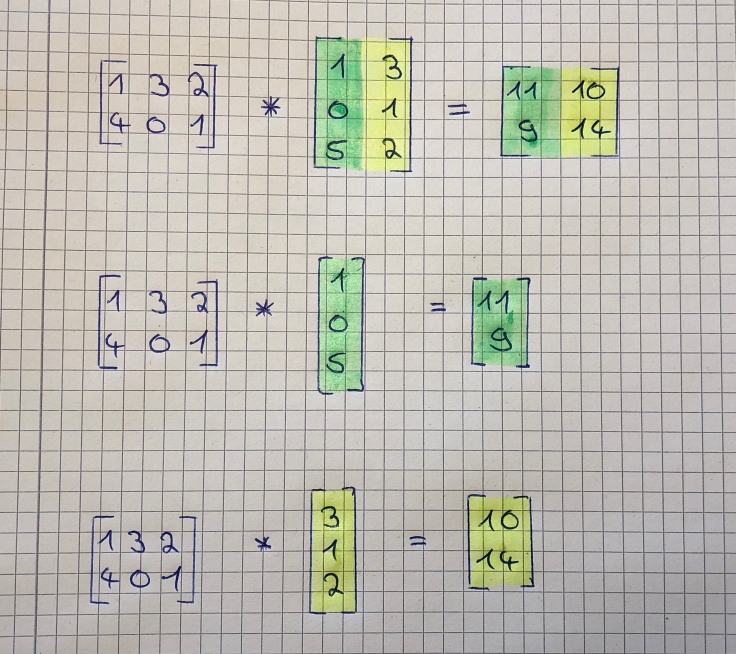

4. Matrix-Matrix Multiplication

Multiplying two Matrices together isn’t the hard either if you know how to multiply a matrix by a vector. Note that you can only multiply Matrices together if the number of the first matrixes columns matches the number of the second Matrixes rows. The result will be a matrix that has the same number of rows as the first matrix has and the same number of columns as the second matrix. It works as follows:

You simply split the second matrix into column-vectors and multiply the first matrix separately with each of these vectors. Then you put the results in a new matrix (without adding them up!). The image below explains this step by step:

And here is again some kind of cheat sheet:

Matrix Multiplication Properties

Matrix Multiplication has several properties that allow us to bundle a lot of computation into one Matrix multiplication. We will discuss them one by one below. We will start by explaining these concepts with Scalars and then with Matrices because this gives you a better understanding.

1. Not Commutative

Scalar Multiplication is commutative but not Matrix Multiplication. This means that when we are multiplying scalars, 7*3 is the same as 3 * 7. But when we multiply matrices with each other, A*B isn’t the same as B*A.

2. Associative

Scalar and Matrix Multiplication are both associative. This means that the Scalar multiplication 3 (5*3) is the same as (3*5) 3 and that the Matrix multiplication A (B*C) is the same as (A*B) C.

3. Distributive

Scalar and Matrix Multiplication are also both distributive. This means that 3 (5 + 3) is the same as 3*5 + 3*3 and that A (B+C) is the same as A*B + A*C.

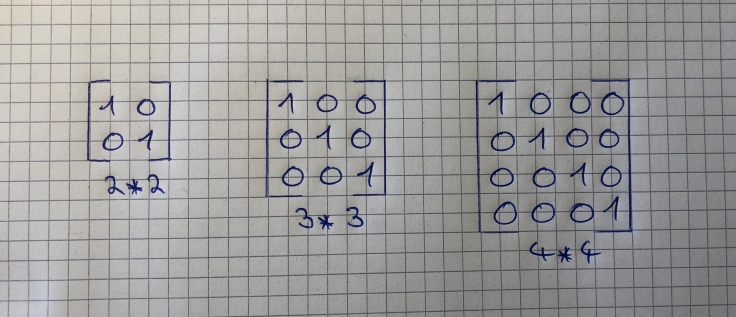

4. Identity Matrix

The identity Matrix is a special kind of matrix but first, we need to define what an identity is. The number 1 is an identity because everything you multiply with 1 is equal to itself. Therefore every Matrix that is multiplied by an Identity Matrix is equal to itself. For example, Matrix A times its Identity-Matrix is equal to A.

You can spot an Identity Matrix by the fact that it has ones along its diagonals and that every other value is zero. It is also a „squared matrix“, meaning that its number of rows matches its number of columns.

We previously discussed that Matrix multiplication is not commutative but there is one exception, namely if we multiply a Matrix by an identity matrix. Because of that, the following equation is true: A * I = I * A = A

Inverse and Transpose

The Matrix inverse and the Matrix transpose are two special kinds of Matrix properties. Again, we will start by discussing how these properties relate to real numbers and then how they relate to Matrices.

1. Inverse

First of all, what is an inverse? A number that is multiplied by its inverse is equal to 1. Note that every number except 0 has an inverse. If you would multiply a Matrix by its inverse, the result would be its identity matrix. The example below shows how the inverse of scalars looks like:

But not every Matrix has an inverse. You can compute the inverse of a matrix if it is a „squared matrix“ and if it can have an inverse. Discussing which Matrices have an inverse would be unfortunately out of the scope of this post.

Why do we need an Inverse? Because we can’t divide Matrices. There is no concept of dividing by a Matrix but we can multiply a Matrix by an Inverse, which results in the same thing.

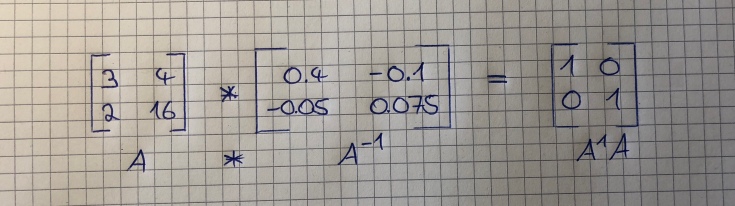

The image below shows a Matrix that gets multiplied by its own inverse, which results in a 2 by 2 identity matrix.

You can easily compute the inverse of a matrix (if it can have one) using Numpy. Heres the link to the documentation: https://docs.scipy.org/doc/numpy-1.14.0/reference/generated/numpy.linalg.inv.html.

2. Transpose

And lastly, we will discuss the Matrix Transpose Property. This is basically the mirror image of a matrix, along a 45 degree axis. It is fairly simple to get the Transpose of a Matrix. Its first column is just the first row of the Transpose-Matrix and the second column is turned into the second row of the Matrix-Transpose. An m*n Matrix is simply transformed into an n*m Matrix. Also, the Aij element of A is equal to the Aji(transpose) element. The image below illustrates that:

Summary

In this post, you learned about the mathematical objects of Linear Algebra that are used in Machine Learning. You also learned how to multiply, divide, add and subtract these mathematical objects. Furthermore, you have learned about the most important properties of Matrices and why they enable us to make more efficient computations. On top of that, you have learned what inverse- and transpose Matrices are and what you can do with it. Although there are also other parts of Linear Algebra used in Machine Learning, this post gave you a proper Introduction to the most important concepts.