I suppose most Machine Learning (ML) models are conceived on a whiteboard or a napkin, and born on a laptop. As the fledgling creatures start babbling their first predictions, we’re filled with pride and high hopes for their future abilities. Alas, we know deep down in our heart that that not all of them will be successful, far from it.

A small number fail us quickly as we build them. Others look promising, and demonstrate some level of predictive power. We are then faced with the grim challenge of deploying them in a production environment, where they’ll either prove their legendary valour or die an inglorious death

One day, your models will rule the world… if you read all these posts and pay attention 😉

In this series of opinionated posts, we’ll discuss how to train ML models and deploy them to production, from humble beginnings to world domination. Along the way, we’ll try to take justified and reasonable steps, fighting the evil forces of over-engineering, Hype Driven Development and “why don’t you just use XYZ?”.

Enjoy the safe comfort of your Data Science sandbox while you can, and prepare yourself for the cold, harsh world of production.

Day 0

So you want to build a ML model. Hmmm. Let’s pause for a minute and consider this:

- Could your business problem be addressed by a high-level AWS service, such as Amazon Rekognition, Amazon Polly, etc.?

- Or by the growing list of applied ML features embedded in other AWS services?

Don’t wave this off: no Machine Learning is easier to manage than no Machine Learning. Figuring a way to use high-level services could save you weeks of work, maybe months.

If the answer is “yes”

Please ask yourself:

- Why would you go through all the trouble of building a redundant custom solution?

- Are you really “missing features”? What’s the real business impact?

- Do you really need “more accuracy” How do you know you could reach it?

If you’re unsure, why not run a quick PoC with your own data? These services are fully-managed (no… more… servers) and very easy to integrate in any application. It shouldn’t take a lot of time to figure them out, and you would then have solid data to make an educated decision on whether you really need to train your own model or not.

If these services work well enough for you, congratulations, you’re mostly done! If you decide to build, I’d love to hear your feedback. Please get in touch.

If this answer is “no”

Please ask yourself the question again! Most of us have an amazing capability to twist reality and deceive ourselves 🙂 If the honest answer is really “no”, then I’d still recommend thinking about subprocesses where you could use the high-level services, e.g. :

- using Amazon Translate for supported language pairs and using your own solution for the rest.

- using Amazon Rekognition to detect faces before feeding them to your model,

- using Amazon Textract to extract text before feeding it to your NLP model.

This isn’t about pitching AWS services (do I look like a salesperson?). I’m simply trying to save you from reinventing the wheel (or parts of the wheel): you should really be focusing on the business problem at hand, instead of building a house of cards that you read about in a blog post or saw at a conference. Yes, it may look great on your resume, and the wheel is initially a fun merry-go-round… and then, it turns into the Wheel of Pain, you’re chained to it and someone else is holding the whip.

Why did I blindly trust that meetup talk? Crom! Help me escape and bash that guy’s skull with his laptop.

Anyway, enough negativity 🙂 You do need a model, let’s move on.

Day 1: one user (you)

We’ll start our journey at the stage where you’ve trained a model on your local machine (or a local dev server), using a popular open source library like scikit-learn, TensorFlow or Apache MXNet. Maybe you’ve even implemented your own algorithm (Data scientists, you devils).

You’ve measured the model’s accuracy using your test set, and things look good. Now you’d like to deploy the model to production in order to check its actual behaviour, run A/B tests, etc. Where to start?

Batch prediction or real-time prediction?

First, you should figure out whether your application requires batch prediction (i.e. collect a large number data points, process them periodically and store results somewhere), or real-time prediction (i.e. send a data point to a web service and receive an immediate prediction ). The reason why I bring this point early on is because it has a large impact on deployment complexity.

At first sight, real-time prediction sounds more appealing (because… real-time, yeah!), but it also comes with stronger requirements, inherent in web services: high availability, the ability to handle traffic bursts, etc. Batch is more relaxed, as it only needs to run every now and then: as long as you don’t lose data, no one will see if it’s broken in between 😉

Scaling is not a concern right now: all you care about is deploying your model, kicking the tires, running some performance tests, etc. From my experience, you’ve probably taken the shortest route and deployed everything to a single Amazon EC2 instance. Everybody knows a bit of Linux CLI, and you read somewhere that using “IaaS will protect you from evil vendor lock-in”. Ha! EC2 it is, then!

I hear screams of horror and disbelief across the AWS time-space continuum, and maybe some snarky comments along the lines of “oh this is totally stupid, no one actually does that!”. Well, I’ll put money on the fact that this is by far how most people get started. Congrats if you didn’t, but please let me show these good people which way is out before they really hurt themselves 😉

And so, staring into my magic mirror, I see…

Batch prediction

You’ve copied your model, your batch script and your application to an EC2 instance. Your batch script runs periodically as a cron job, and saves predicted data to local storage. Your application loads both the model and initial predicted data at startup, and uses it to do whatever it has to do. It also periodically checks for updated predictions, and loads them whenever they’re available.

Real-time prediction

You’ve embedded the model in your application, loading it at startup and serving predictions using all kinds of data (user input, files, APIs, etc.).

One way or the other, you’re now running predictions in the cloud, and life is good. You celebrate with a pint of stout… or maybe gluten-free, fair-trade, organic soy milk latte, because it’s 2019 after all.

Week 1: one sorry user (you)

The model predicts nicely, and you’d like to invest more time in collecting more data and adding features. Unfortunately, it didn’t take long for things to go wrong and you’re now bogged down in all kinds of issues (non exhaustive list below):

- Training on your laptop and deploying manually to the cloud is painful and error-prone.

- You accidentally terminated your EC2 instance and had to reinstall everything from scratch.

- You ‘pip install’-ed a Python library and now your EC2 instance is all messed up.

- You had to manually install two other instances for your colleagues, and now you can’t really be sure that you’re all using identical environments.

- Your first load test failed, but you’re not sure what to blame: application? model? the ancients wizards of Acheron?

- You’d like to implement the same algorithm in TensorFlow, and maybe Apache MXNet too: more environments, more deployments. No time for that.

- And of course, everyone’s favorite: Sales have heard that “your product now has AI capabilities”. You’re terrified that they could sell it to a customer and ask you to go live at scale next week.

The list goes on. It would be funny if it wasn’t real (feel free to add your own examples in the comments). All of the sudden, this ML adventure doesn’t sound as exciting, does it? You’re spending most of your time on firefighting, not on building the best possible model. This can’t go on!

I’ve revoked your IAM credentials on ‘TerminateInstances’. Yes, even in the dev account. Any questions?

Week 2: fighting back

Someone on the team watched this really cool AWS video, featuring a new ML service called Amazon SageMaker. You make a mental note of it, but right now, there’s no time to rebuild everything: Sales is breathing down your neck, you have a customer demo in a few days, and you need to harden the existing solution.

Chances are, you don’t have a mountain of data yet: training can wait. You need to focus on making prediction reliable. Here are some solid techniques measures that won’t take more than a few days to implement.

Use the Deep Learning AMI

Maintained by AWS, this Amazon Machine Image comes pre-installed with a lot of tools and libraries that you’ll probably need: open source, NVIDIA drivers, etc. Not having to manage them will save you a lot of time, and will also guarantee that your multiple instances run with the same setup.

The AMI also comes with the Conda dependency and environment manager, which lets you quickly and easily create many isolated environments: that’s a great way to test your code with different Python versions or different libraries, without unexpectedly clobbering everything.

Last but not least, this AMI is free of charge, and just like any other AMI, you can customize if you *really* have to.

Break the monolith

Your application code and your prediction code have different requirements. Unless you have a compelling reason to do so (ultra low latency might be one), they shouldn’t live under the same roof. Let’s look at some reasons why:

- Deployment: do you want to restart or update your app every time you update the model? Or ping your app to reload it or whatever? No no no no. Keep it simple: when it comes to decoupling, nothing beats building separate services.

- Performance: what if your application code runs best on memory-intensive instances and your ML model requires a GPU? How will you handle that trade-off? Why would you favour one or the other? Separating them lets you pick the best instance type for each use case.

- Scalability: what if your application code and your model have different scalability profiles? It would be a shame to scale out on GPU instances because a small piece of your application code is running hot… Again, it’s better to keep things separated, this will help take the most appropriate scaling decisions as well as reduce cost.

Now, what about pre-processing / post-processing code, i.e. actions that you need to take on the data just before and just after predicting. Where should it go? It’s hard to come up with a definitive answer: I’d say that model-independent actions (formatting, logging, etc.) should stay in the application, whereas model-dependent actions (feature engineering) should stay close to the model to avoid deployment inconsistencies.

Build a prediction service

Separating the prediction code from the application code doesn’t have to be painful, and you can reuse solid, scalable tools to build a prediction service. Let’s look at some options:

- Scikit-learn: when it comes to building web services in Python, I’m a big fan of Flask. It’s neat, simple and it scales well. No need to look further IMHO. You code would look something like that.

- TensorFlow: no coding required! You can use TensorFlow Serving to serve predictions at scale. Once you’ve trained your model and saved it to the proper format, all it takes to serve predictions is:

docker run -p 8500:8500 r

--mount type=bind,source=/tmp/myModel,target=/models/myModel r

-e MODEL_NAME=myModel -t tensorflow/serving &- Apache MXNet: in a similar way, Apache MXNet provides a model server,able to serve MXNet and ONNX models (the latter is a common format supported by PyTorch, Caffe2 and more). It can either run as a stand-alone application, or inside a Docker container.

Both model servers are pre-installed on the Deep Learning AMI: that’s another reason to use it. To keep things simple, you could leave your pre/post-processing in the application and invoke the model deployed by the model server. A word of warning, however: these models servers implement neither authentication nor throttling, so please make sure not to expose them directly to Internet traffic.

- Anything else: if you’re using another environment (say, custom code) or non-web architectures (say, message passing), the same pattern should apply: build a separate service that can be deployed and scaled independently.

(Optional) Containerize your application

Since you’ve decided to split your code, I would definitely recommend that you use the opportunity to package the different pieces in Docker containers: one for training, one for prediction, one (or more) for the application. It’s not strictly necessary at this stage, but if you can spare the time, I believe the premature investment is worth it.

If you’ve been living under a rock or never really paid attention to containers, now’s probably the time to catch up:) I highly recommend running the Docker tutorial, which will teach you everything you need to know for our purpose.

Containers make it easy to move code across different environments (dev, test, prod, etc.) and instances. They solve all kinds of dependency issues, which tend to pop up even if you’re only managing a small number of instances. Later on, containers will also be a pre-requisite for larger-scale solutions such as Docker clusters or Amazon SageMaker.

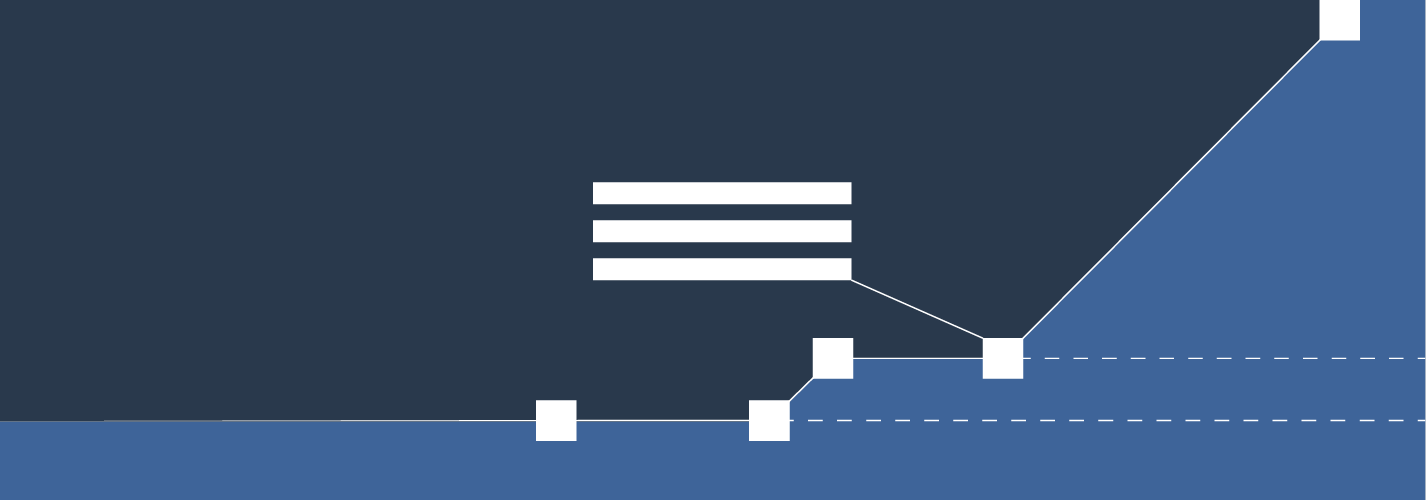

End of week 2

After a rough start, things are looking up!

- The Deep Learning AMI provides a stable, well-maintained foundation to build on.

- Containers help you move and deploy your application with much less infrastructure drama than before.

- Prediction now lives outside of your application, making testing, deployment and scaling simpler.

- If you can use them, model servers save you most of the trouble of writing a prediction service.

Still, don’t get too excited. Yes, we’re back on track and ready to for bigger things, but there’s still a ton of work to do. What about scaling prediction to multiple instances, high availability, managing cost, etc. And what should we do when mountains of training data start piling up? Face it, we’ve barely scratched the surface.

“Old fool! Load balancers! Auto Scaling! Automation!”, I hear you cry. Oh, you mean you’re in a hurry to manage infrastructure again? I thought you guys wanted to Machine Learning 😉

On this bombshell, it’s time to call it a day. In the next post, we’ll start comparing and challenging options for larger-scale ML training: EC2 vs. ECS/EKS vs SageMaker. An epic battle, no doubt.