Photo by Hans-Peter Gauster on Unsplash

What’s not apparent to many practitioners and researchers in Deep Learning is that the rich variety of methods developed over the past several years are relevant to systems that have different kinds of goals. Deep Learning arose from the Machine Learning community, so it is natural to think of DL networks as systems suitable for performing predictions. Predictions are unfortunately too broad a goal and thus leads to a lack of specificity as to the appropriate methods to fine tune a solution. What I mean here is that you can cast almost any intelligent goals as that of making a prediction. However, to be successful, one has to a minimum understand what kind of prediction is being made and this leads towards a more pragmatic understanding of whether the right tools for the job are used.

DL networks in its most basic essence are perturbative systems. They work by incrementally and gradually adjusting their initial state to eventually arrive at a targeted goal. In the most common usage of DL, that is of supervised learning, the objective is to fit the pre-labeled training data towards minimizing a predictive loss function. DL differs from other machine learning methods such as SVM and Decision Trees in its constituent components. As a consequence of this difference, the gradual adjustments (or more generally, the curriculum) are performed differently. As an example, SVM requires the entire training set to be in memory. Decision Trees apply either bagging, boosting or stacking ensemble methods. DL methods employ Stochastic Gradient Descent (SGD) methods, however a practitioner employs all kinds of other methods to accelerate convergence as well as to improve generalization.

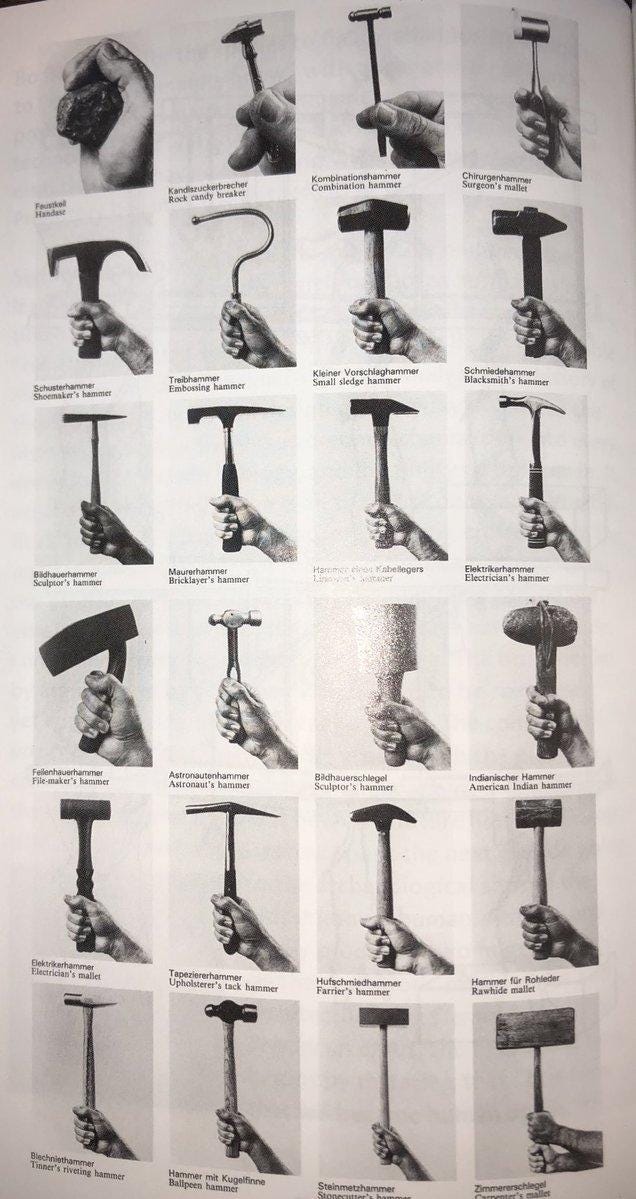

The point that we often miss is that convergence isn’t the end goal, rather the goal is to train a mass of computational elements to achieve a specific task. Mores specifically, a specific kind of prediction. Unfortunately, not all predictions are equal and there are many kinds of predictions. To make this clearer, classifying images into a fixed set of categories is a different kind of prediction that translating English to German. Both are predictive system, but this categorization glosses over the specific architectural nuisances of each kind of system. If every problem is a nail, then a hammer is your tool. In reality, there are plenty of kinds of hammers for different contexts:

Hans Hollein, Hammer’s (Man TransFORMS, 1976)

We can all appreciate this notion that you want to use the right tool for the job. However, the attractiveness of Deep Learning is that it appears to be capable enough to be used for about any kind of cognitive job. This is a major misconception in that there are many kinds of cognition and each kind works very differently.

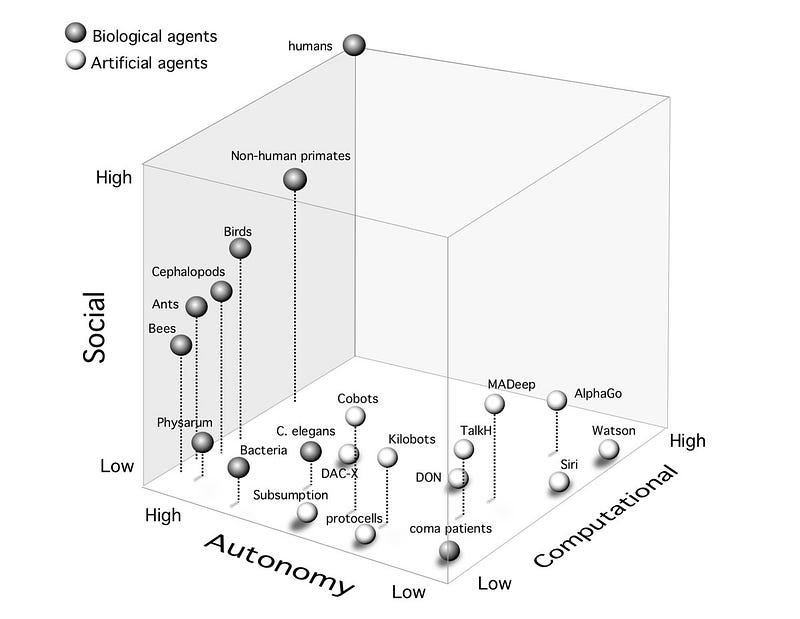

To illustrate this, I wrote previously that there are three major dimensions of intelligence that the field of AI seeks to solve. These are (1) Computational intelligence (2) Autonomous intelligence and (3) Social intelligence.

https://arxiv.org/pdf/1705.11190.pdf

In the above graph, one will notice that artificial systems are very high in computational intelligence. However, in two other intelligence dimensions, artificial systems are not very capable. The methods required for other kinds of intelligence are obviously going to be very different. They might be share common functionality, but to assume that they employ the same functionality would be a gross mistake.

The one error in the above graph is that it incorrectly depicts the human having higher computational intelligence than AlphaGo. For the narrow task that AlphaGo is designed to solve, a human would have lower intelligence. It would be like comparing a human with a hand calculator to do long division. Systems will zero autonomy and designed for narrow tasks will certainly have higher computational intelligence.

There are many researchers who have the unsubstantiated belief that to achieve AGI, one would have to reach the edges of massive computation. Marcus Hutter’s AIXI formulation is one such approach. AIXI is an incomputable theory of intelligence that borrows from Solomonoff’s induction, which is also incomputable. These approaches make an implicit assumption that because we haven’t achieved AI that the characteristics of a solution may be incomputable. This theory assumes that general intelligence is due exclusively to computational intelligence. It’s a theory that has great appeal for mathematicians, but it is obviously non-sensical. Unbounded rationality is a fantasy that we should strive to ween ourself away from.

Unsupervised learning and meta-learning are actually another example of a non-sensical notion of solving AI. The way evolution solves cognition is through the development of stepping stone skills. What this implies is that it depends entirely on the kind of problem that is being solved. Is the unsupervised or meta-learning task for prediction, autonomous control or generative design? Each kind of problem requires different base skills and thus there’s some kind of basecamp that can be targeted rather than this fanciful notion of being able to bootstrap from nothing. Bootstrap magic methods are a fiction that appeals to researchers who believe in salvation through mathematical magic. Valuable cognitive behaviors evolve from the demands of the environment, any correlation to an an abstract mathematical principle is by chance and not causal to some yet do discover abstract mathematical formulation.

Because there are many kind of intelligences and we know that there are many kinds of predictions that can be made, we must select the DL architecture that is most suited for the kind of prediction task. Note that intelligence is a high level goal (i.e. make something autonomous) and predictions are goals at a more pragmatic and manageable level (i.e. classify dog breeds).

I will therefore like to propose several areas of study that involve Deep Learning methodology. These areas of study will borrow from a common toolbox but also have their own tools that are unique and fine tuned to the specific problem that is being addressed.

Generative Computational Modeling — Numerical methods required to simulate complex systems (example: Weather and Economics) are excruciating difficult to get right and made more precise. Deep Learning generative models appear to be able to scale to high complexity. This area of investigation involves developing techniques to generate higher fidelity models of reality. This is not Artificial Intelligence in the classic sense. This is computational intelligence that is currently beyond the capabilities of humans. Humans on their own cannot generate high fidelity simulations of physical systems like non-linear fluids. What is being proposed here is to employ DL methods to computational methods. An example of this is described in Quanta magazine:

DeepMind has recently revealed the successful use of generative models to predict protein folding:

AlphaFold trained a generative network to invent new protein fragments that were used to incrementally improve the score of the proposed protein structure. This use of generative models was so impressive that it prompted the following reaction:

The fact that the collective powers of Novartis, Merck, Pfizer, etc, with their hundreds of thousands (~million?) of employees, let an industrial lab that is a complete outsider to the field, with virtually no prior molecular sciences experience, come in and thoroughly beat them on a problem that is, quite frankly, of far greater importance to pharmaceuticals than it is to Alphabet.

Complex Predictive Systems — Generative models attempt to simulate systems from bottom up processes. Contrast this to predictive systems that attempt to disentangle observations of the world to create more abstract predictive models. The tools in this set are geared towards slicing and dicing observations and then generating causal models that can drive better predictions of bulk measures. It’s a top down approach that learn from descriptive measures of reality and employs these measure to develop causal models that drive future predictions.

If this activity has a familiar ring to it, this is because this is the kind of work that Data Scientists are supposed to perform. That is, trying to make sense of data and explaining the results of that explorations. Aspects of this approach involve advanced reasoning capabilities like Logical Induction. These leverage superhuman reasoning capabilities that already exist in automated proof systems. This is the kind of system that attempts to automate data analysis (see: Automated Statistician) One example of this kind of system (albeit, without causal modeling and in a narrow domain) is AlphaGo.

There are also contexts that DL can make predictions that defy explanation. This has been uncovered in studies involving images of the retina. A paper from Google shows that DL can predict cardiovascular risk simply from images of the retina. Here’s an interesting Twitter thread that spawned a lot of discussion on how gender can also be predicted with surprising accuracy.

Adaptive Imitation — Deep Learning systems are like natural systems in their ability to mimic behavior. What DL systems do very well is not only do they mimic behavior well, they do so in an adaptive manner. So when you employ DL to mimic human motion, human poses, animal motion and controlled flight. One example of this is DeepMimic:

Waymo has been employing an imitation based system to improve the driving of their self-driving car fleet:

Imitation leads to more natural behavior that is important for safe interaction with other vehicles, pedestrians, and cyclists that share the road. However there are limitations, so many new techniques are needed to compensate for the deficiencies of a pure imitation based approach.

Generative Design Exploration — Generative capabilities can be coupled with constraint systems to allow the massive exploration of different designs. An excellent example of this can be found in the DeepBlocks application that is used for real estate development:

Autodesk is a company that is leading this area of generative design exploration:

As well as Nvidia with its research on rendering realistic video from GANs:

Decision Support Systems — The motivation of the Deep Learning Canvas is to explore areas where human heuristics can fail. That is, we can identify cognitive tasks that exhibit information overload, time sensitive actions, too much to recall or not enough meaning. In these tasks we can leverage DL methods to reduce the load. For example, OCR based systems that translate images of pages of books into text thus allowing books to be indexable and thus searchable. The same can be done using voice recognition, to index meetings or lectures, allowing content to be searchable. The simple surfacing of previously unsearchable content leads to better support for decision processes. This idea can be expanded even further so as to support more advanced decision making:

These five areas are all advanced new capabilities that could not have happened without the arrival of Deep Learning. In my previous retrospective, I suggested that AI chip companies specialize in a niche to survive. One can thus see a product strategy that focuses on any one of these areas. So as an example, Generative Computational Modeling is an area that a traditional HPC user may be interested in. Therefore, tooling should be developed that is complimentary to the traditional HPC tool chain. This will encourage adoption and facilitate migration.

It would be unrealistic for a company to specialize in all five areas. It’s too broad and doing so would dilute one’s focus. Nobody wants to be a jack-of-all-trades and master of none. The marketplace allows firms that specialize an opportunity to survive in a niche. Therefore, any company focusing on a DL product must specialize in any one of these five areas. Furthermore, each of these five areas support different verticals. So as an example, Generative Modeling is something valuable in the architecture industry.

I would would like to point out that the methods developed in these spaces are not necessarily the methods that require Artificial General Intelligence (AGI). As I’ve alluded to previously in “Three Cognitive Dimensions”, developing autonomous intelligence requires a different toolbox from that found in generative models, predictive models, imitation based models, generative design and decision support systems. These five are all low hanging fruit that can be applied with today’s current technology.

AGI is an entirely different kind of capability that may draw some inspiration from these five areas, but will require the invention of new cognitive capabilities that are outside of what exists. To understand this, I’ve created a capability maturity model:

Deep Learning is at Level Two. The application classes described here are within the realm of applicability.

Further Reading

Exploit Deep Learning: The Deep Learning AI Playbook