No, they are not the same. If machine learning is just glorified statistics, then architecture is just glorified sand-castle construction.

I am, to be quite honest, tired of hearing this debate reiterated on social media and within my University on a near-daily basis. Usually, this is accompanied by somewhat vague statements to explain away the issue. Both sides are guilty of doing this. I hope that by the end of this article you will have a more informed position on these somewhat vague terms.

The Argument

Contrary to popular belief, machine learning has been around for several decades. It was initially shunned due to its large computational requirements and the limitations of computing power present at the time. However, machine learning has seen a revival in recent years due to the preponderance of data stemming from the information explosion.

So, if machine learning and statistics are synonymous with one another, why are we not seeing every statistics department in every university closing down or transitioning to being a ‘machine learning’ department? Because they are not the same!

There are several vague statements that I often hear on this topic, the most common one being something along these lines:

“The major difference between machine learning and statistics is their purpose. Machine learning models are designed to make the most accurate predictions possible. Statistical models are designed for inference about the relationships between variables.”

Firstly, we must understand that statistics and statistical models are not the same. Statistics is the mathematical study of data. You cannot do statistics unless you have data. A statistical model is a model for the data that is used either to infer something about the relationships within the data or to create a model that is able to predict future values. Often, these two go hand-in-hand.

So there are actually two things we need to discuss: firstly, how is statistics different from machine learning, and secondly, how are statistical models different from machine learning.

To make this slightly more explicit, there are lots of statistical models that can make predictions, but predictive accuracy is not their strength.

Likewise, machine learning models provide various degrees of interpretability, from the highly interpretable lasso regression to impenetrable neural networks, but they generally sacrifice interpretability for predictive power.

From a high-level perspective, this is a good answer. Good enough for most people. However, there are cases where this explanation leaves us with a misunderstanding about the differences between machine learning and statistical modeling. Let us look at the example of linear regression.

Statistical Models vs Machine learning — Linear Regression Example

It seems to me that the similarity of methods that are used in statistical modeling and in machine learning has caused people to assume that they are the same thing. This is understandable, but simply not true.

The most obvious example is the case of linear regression, which is probably the major cause of this misunderstanding. Linear regression is a statistical method, we can train a linear regressor and obtain the same outcome as a statistical regression model aiming to minimize the squared error between data points.

We see that in one case we do something called ‘training’ the model, which involves using a subset of our data, and we do not know how well the model will perform until we ‘test’ this data on additional data that was not present during training, called the test set. The purpose of machine learning, in this case, is to obtain the best performance on the test set.

For the statistical model, we find a line that minimizes the mean squared error across all of the data, assuming the data to be a linear regressor with some random noise added, which is typically Gaussian in nature. No training and no test set are necessary. For many cases, especially in research (such as the sensor example below), the point of our model is to characterize the relationship between the data and our outcome variable, not to make predictions about future data. We call this procedure statistical inference, as opposed to prediction. However, we can still use this model to make predictions, and this may be your primary purpose, but the way the model is evaluated will not involve a test set and will instead involve evaluating the significance and robustness of the model parameters.

The purpose of (supervised) machine learning is obtaining a model that can make repeatable predictions. We typically do not care if the model is interpretable, although I would personally recommend always testing to ensure that model predictions do make sense. Machine learning is all about results, it is likely working in a company where your worth is characterized solely by your performance. Whereas, statistical modeling is more about finding relationships between variables and the significance of those relationships, whilst also catering for prediction.

To give a concrete example of the difference between these two procedures, I will give a personal example. By day, I am an environmental scientist and I work primarily with sensor data. If I am trying to prove that a sensor is able to respond to a certain kind of stimuli (such as a concentration of a gas), then I would use a statistical model to determine whether the signal response is statistically significant. I would try to understand this relationship and test for its repeatability so that I can accurately characterize the sensor response and make inferences based on this data. Some things I might test are whether the response is, in fact, linear, whether the response can be attributed to the gas concentration and not random noise in the sensor, etc.

In contrast, I can also get an array of 20 different sensors, and I can use this to try and predict the response of my newly characterized sensor. This may seem a bit strange if you do not know much about sensors, but this is currently an important area of environmental science. A model with 20 different variables predicting the outcome of my sensor is clearly all about prediction, and I do not expect it to be particularly interpretable. This model would likely be something a bit more esoteric like a neural network due to non-linearities arising from chemical kinetics and the relationship between physical variables and gas concentrations. I would like the model to make sense, but as long as I can make accurate predictions I would be pretty happy.

If I am trying to prove the relationship between my data variables to a degree of statistical significance so that I can publish it in a scientific paper, I would use a statistical model and not machine learning. This is because I care more about the relationship between the variables as opposed to making a prediction. Making predictions may still be important, but the lack of interpretability afforded by most machine learning algorithms makes it difficult to prove relationships within the data (this is actually a big problem in academic research now, with researchers using algorithms that they do not understand and obtaining specious inferences).

Source: Analytics Vidhya

It should be clear that these two approaches are different in their goal, despite using similar means to get there. The assessment of the machine learning algorithm uses a test set to validate its accuracy. Whereas, for a statistical model, analysis of the regression parameters via confidence intervals, significance tests, and other tests can be used to assess the model’s legitimacy. Since these methods produce the same result, it is easy to see why one might assume that they are the same.

Statistics vs Machine Learning — Linear Regression Example

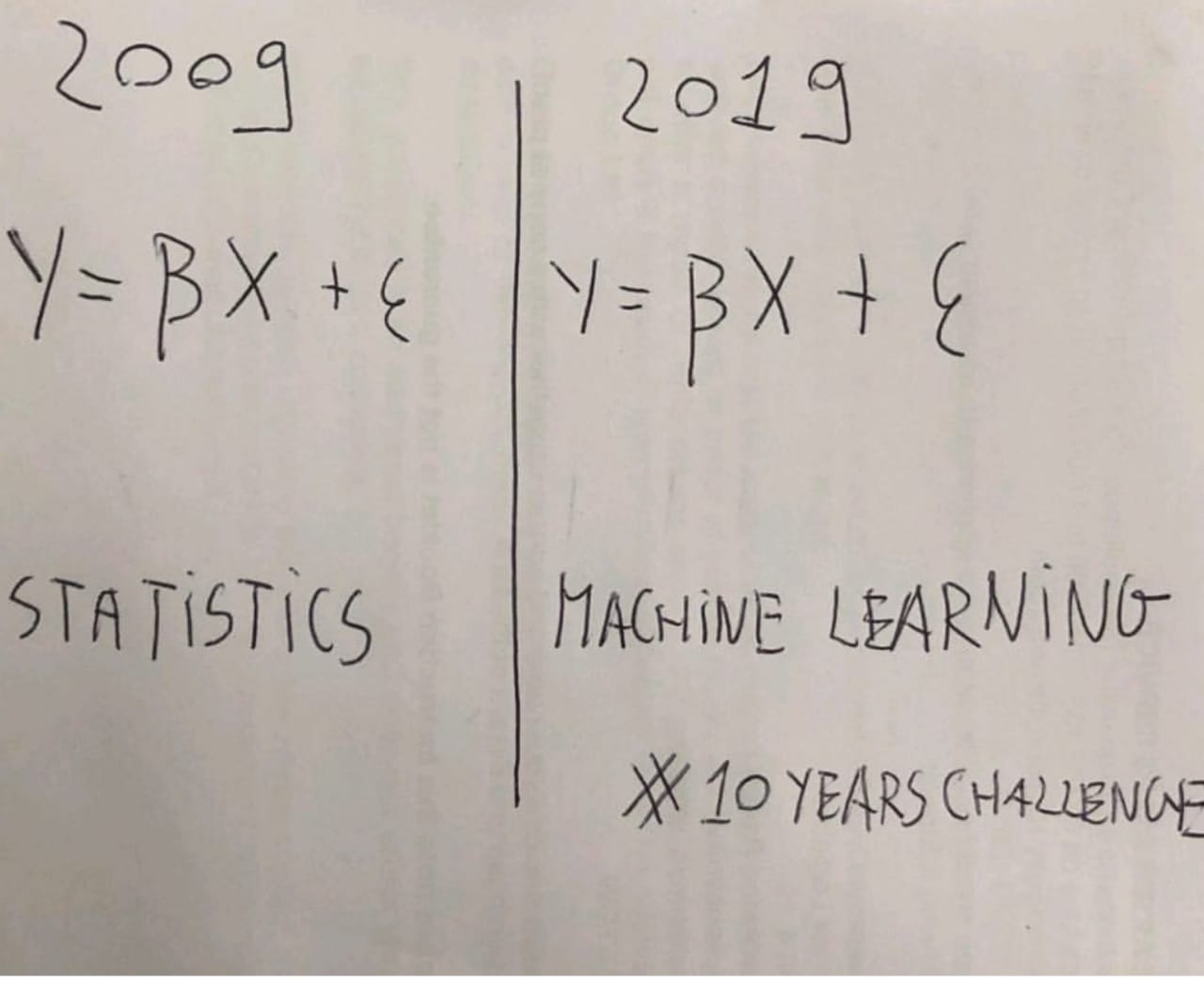

I think this misconception is quite well encapsulated in this ostensibly witty 10-year challenge comparing statistics and machine learning.

However, conflating these two terms based solely on the fact that they both leverage the same fundamental notions of probability is unjustified. For example, if we make the statement that machine learning is simply glorified statistics based on this fact, we could also make the following statements.

Physics is just glorified mathematics.

Zoology is just glorified stamp collection.

Architecture is just glorified sand-castle construction.

These statements (especially the last one) are pretty ridiculous and all based on this idea of conflating terms that are built upon similar ideas (pun intended for the architecture example).

In actuality, physics is built upon mathematics, it is the application of mathematics to understand physical phenomena present in reality. Physics also includes aspects of statistics, and the modern form of statistics is typically built from a framework consisting of Zermelo-Frankel set theory combined with measure theory to produce probability spaces. They both have a lot in common because they come from a similar origin and apply similar ideas to reach a logical conclusion. Similarly, architecture and sand-castle construction probably have a lot in common — although I am not an architect so I cannot give an informed explanation — but they are clearly not the same.

To give you a scope of how far this debate goes, there is actually a paper published in Nature Methods which outlines the difference between statistics and machine learning. This idea might seem laughable, but it is kind of sad that this level of discussion is necessary.

Before we go on further, I will quickly clear up two other common misconceptions that are related to machine learning and statistics. These are that AI is different from machine learning and that data science is different from statistics. These are fairly uncontested issues so it will be quick.

Data Science is essentially computational and statistical methods that are applied to data, these can be small or large data sets. This can also include things like exploratory data analysis, where the data is examined and visualized to help the scientist understand the data better and make inferences from it. Data science also includes things like data wrangling and preprocessing, and thus involves some level of computer science since it involves coding, setting up connections and pipelines between databases, web servers, etc.

You do not necessarily need to use a computer to do statistics, but you cannot really do data science without one. You can once again see that although data science uses statistics, they are clearly not the same.

Similarly, machine learning is not the same as artificial intelligence. In fact, machine learning is a subset of AI. This is pretty obvious since we are teaching (‘training’) a machine to make generalizable inferences about some type of data based on previous data.

Machine Learning is built upon Statistics

Before we discuss what is different about statistics and machine learning, let us discuss first the similarities. We have already touched on this somewhat in the previous sections.

Machine learning is built upon a statistical framework. This should be overtly obvious since machine learning involves data, and data has to be described using a statistical framework. However, statistical mechanics, which is expanded into thermodynamics for large numbers of particles, is also built upon a statistical framework. The concept of pressure is actually a statistic, and temperature is also a statistic. If you think this sounds ludicrous, fair enough, but it is actually true. This is why you cannot describe the temperature or pressure of a molecule, it is nonsensical. Temperature is the manifestation of the average energy produced by molecular collisions. For a large enough amount of molecules, it makes sense that we can describe the temperature of something like a house or the outdoors.

Would you concede that thermodynamics and statistics are the same? No, thermodynamics uses statistics to help us understand the interaction of work and heat in the form of transport phenomena.

In fact, thermodynamics is built upon many more items apart from just statistics. Similarly, machine learning draws upon a large number of other fields of mathematics and computer science, for example:

- ML theory from fields like mathematics & statistics

- ML algorithms from fields like optimization, matrix algebra, calculus

- ML implementations from computer science & engineering concepts (e.g. kernel tricks, feature hashing)

When one starts coding on Python and whips out the sklearn library and starts using these algorithms, a lot of these concepts are abstracted so that it is difficult to see these differences. In this case, this abstraction has led to a form of ignorance with respect to what machine learning actually involves.

Statistical Learning Theory — The Statistical Basis of Machine Learning

The major difference between statistics and machine learning is that statistics is based solely on probability spaces. You can derive the entirety of statistics from set theory, which discusses how we can group numbers into categories, called sets, and then impose a measure on this set to ensure that the summed value of all of these is 1. We call this a probability space.

Statistics makes no other assumptions about the universe except these concepts of sets and measures. This is why when we specify a probability space in very rigorous mathematical terms, we specify 3 things.

A probability space, which we denote like this, (Ω, F, P), consists of three parts:

- A sample space, Ω, which is the set of all possible outcomes.

- A set of events, F, where each event is a set containing zero or more outcomes.

- The assignment of probabilities to the events, P; that is, a function from events to probabilities.

Machine learning is based on statistical learning theory, which is still based on this axiomatic notion of probability spaces. This theory was developed in the 1960s and expands upon traditional statistics.

There are several categories of machine learning, and as such I will only focus on supervised learning here since it is the easiest to explain (although still somewhat esoteric as it is buried in math).

Statistical learning theory for supervised learning tells us that we have a set of data, which we denote as S = {(xᵢ,yᵢ)}. This basically says that we a data set of n data points, each of which is described by some other values we call features, which are provided by x, and these features are mapped by a certain function to give us the value y.

It says that we know that we have this data, and our goal is to find the function that maps the x values to the y values. We call the set of all possible functions that can describe this mapping as the hypothesis space.

To find this function we have to give the algorithm some way to ‘learn’ what is the best way to approach the problem. This is provided by something called a loss function. So, for each hypothesis (proposed function) that we have, we need to evaluate how that function performs by looking at the value of its expected risk over all of the data.

The expected risk is essentially a sum of the loss function multiplied by the probability distribution of the data. If we knew the joint probability distribution of the mapping, it would be easy to find the best function. However, this is in general not known, and thus our best bet is to guess the best function and then empirically decide whether the loss function is better or not. We call this the empirical risk.

We can then compare different functions and look for the hypothesis that gives us the minimum expected risk, that is, the hypothesis that gives the minimal value (called the infimum) of all hypotheses on the data.

However, the algorithm has a tendency to cheat in order to minimize its loss function by overfitting to data. This is why after learning a function based on the training set data, that function is validated on a test set of data, data that did not appear in the training set.

The nature of how we have just defined machine learning introduced the problem of overfitting and justified the need for having a training and test set when performing machine learning. This is not an inherent feature of statistics because we are not trying to minimize our empirical risk.

A learning algorithm that chooses the function that minimizes the empirical risk is called empirical risk minimization.

Examples

Take the simple case of linear regression. In the traditional sense, we try to minimize the error between some data in order to find a function which can be used to describe the data. In this case, we typically use the mean squared error. We square it so that positive and negative errors do not cancel each other out. We can then solve for the regression coefficients in a closed form manner.

It just so happens, that if we take our loss function to be the mean squared error and perform empirical risk minimization as espoused by statistical learning theory, we end up with the same result as traditional linear regression analysis.

This is just because these two cases are equivalent, in the same way that performing maximum likelihood on this same data will also give you the same result. Maximum likelihood has a different way of achieving this same goal, but nobody is going to argue and say that maximum likelihood is the same as linear regression. The simplest case clearly does not help to differentiate these methods.

Another important point to make here is that in traditional statistical approaches, there is no concept of a training and test set, but we do use metrics to help us examine how our model performs. So the evaluation procedure is different but both methods are able to give us results that are statistically robust.

A further point is that the traditional statistic approach here gave us the optimal solution because the solution had a closed form. It did not test out any other hypotheses and converge to a solution. Whereas, the machine learning method tried a bunch of different models and converged to the final hypothesis, which aligned with the outcome from the regression algorithm.

If we had used a different loss function, the results would not have converged. For example, if we had used hinge loss (which is not differentiable using standard gradient descent, so other techniques like proximal gradient descent would be required) then the results would not be the same.

A final comparison can be made by considering the bias of the model. One could ask the machine learning algorithm to test linear models, as well as polynomial models, exponential models, and so on, to see if these hypotheses fit the data better given our a priori loss function. This is akin to increasing the relevant hypothesis space. In the traditional statistical sense, we select one model and can evaluate its accuracy, but cannot automatically make it select the best model from 100 different models. Obviously, there is always some bias in the model which stems from the initial choice of algorithm. This is necessary since finding an arbitrary function that is optimal for the dataset is an NP-hard problem.

So which is better?

This is actually a silly question. In terms of statistics vs machine learning, machine learning would not exist without statistics, but machine learning is pretty useful in the modern age due to the abundance of data humanity has access to since the information explosion.

Comparing machine learning and statistical models is a bit more difficult. Which you use depends largely on what your purpose is. If you just want to create an algorithm that can predict housing prices to a high accuracy, or use data to determine whether someone is likely to contract certain types of diseases, machine learning is likely the better approach. If you are trying to prove a relationship between variables or make inferences from data, a statistical model is likely the better approach.

Source: StackExchange

If you do not have a strong background in statistics, you can still study machine learning and make use of it, the abstraction offered by machine learning libraries makes it pretty easy to use them as a non-expert, but you still need some understanding of the underlying statistical ideas in order to prevent models from overfitting and giving specious inferences.

Where can I learn more?

If you are interested in delving more into statistical learning theory, there are many books and university courses on the subject. Here are some lecture courses I recommend:

If you are interested in delving more into probability spaces then I warn you in advance it is pretty heavy in mathematics and is typically only covered in graduate statistics classes. Here are some good sources on the topic: