Ready to learn Machine Learning? Browse Machine Learning Training and Certification courses developed by industry thought leaders and Experfy in Harvard Innovation Lab.

A simple english explanation, minus the math, stats & code

Deep learning has created a perfect dichotomy.

On the one hand, we have data science practitioners raving about it, and every one and their colleague jumping in to learn and make a career out of this supposedly game-changing technology in analytics.

And then there is everyone else wondering what the buzz is all about. With a multitude of analytics technologies projected as the panacea to business’ problems, one wonders what this additional ‘cool thing’ is all about.

Photo by Sandro Schuh on Unsplash

For people on the business side of things, there are no easy avenues to get a simple and intuitive understanding. A Google search gets one entangled in the deep layers of neural networks, or gets them bowled over by the math symbols. Online courses on the subject haunt one with a bevy of stats terms.

One eventually gives in and ends up taking all of the hype at face value. Here’s an attempt to demystify and democratize the understanding of deep learning (DL), in simple english and in under 5 minutes. I promise not to show you the cliched pictures of human brains, or a spider web of networks 🙂

So, just what is Deep learning?

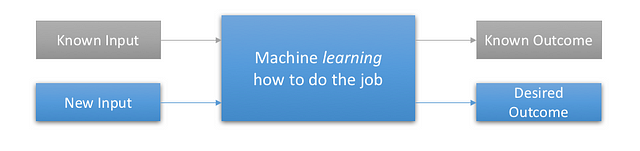

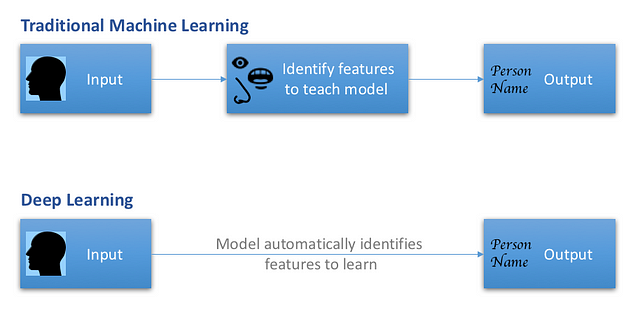

Let’s start with the basic premise of machine learning (ML). The attempt is to teach machines on how to get to a desired outcome, when presented with some input. Say, when shown the past 6 month’s stock prices, predict tomorrow’s value. Or, when presented with a face, identify the person.

The machine learns how to do things like this, obviating the need for laborious instructions every time.

Deep learning is just a disciple (or, discipline) of machine learning, but with a higher IQ. It does the same thing as above, but in a much smarter way.

And, how is it different from machine learning?

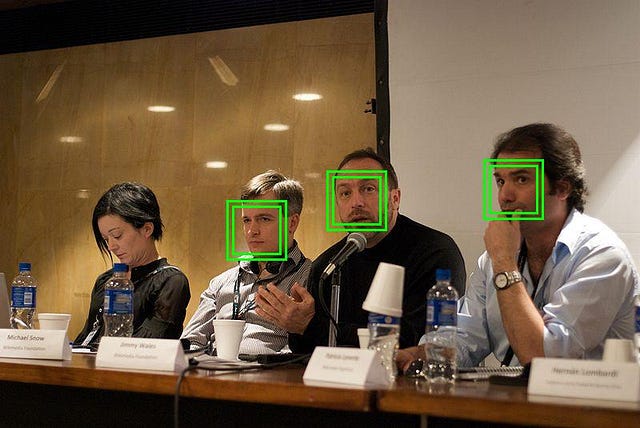

Let me explain this by using a simple example of face detection.

Pic: “Jimmy answering questions” by Beatrice Murch derivative work: Sylenius, licensed under CC BY 2.0

Traditional face recognition using machine learning involves first manually identifying noticeable features on a human face (such as eyes, eyebrows, chin). Then, a machine is trained to associate every known face with these specific features. Now show a new face, and the machine extracts these preset features and does a comparison to get the best match. This works moderately.

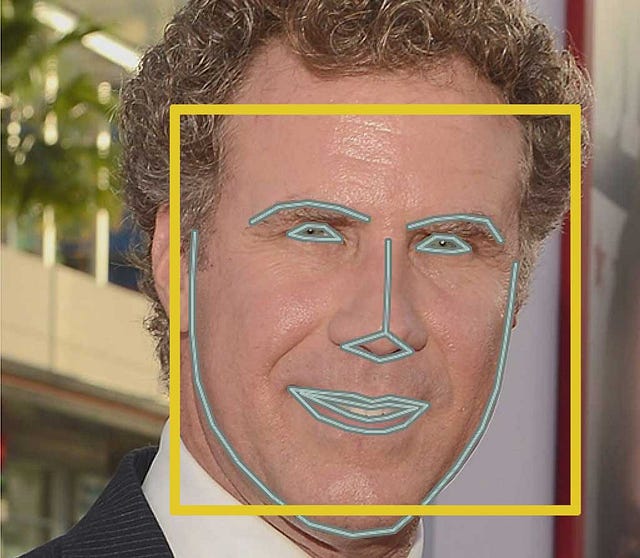

Pic: Machine learning is fun.. by Adam Geitgey

Now, how does deep learning solve the same problem? The process is nearly the same, but remember this student is smarter. So, instead of spoon-feeding standard facial features, you let the model creatively figure out what to notice. It may decide that the most striking feature in human faces is the curvature on the left cheek, or how flat a forehead is. Or, perhaps something even subtler.

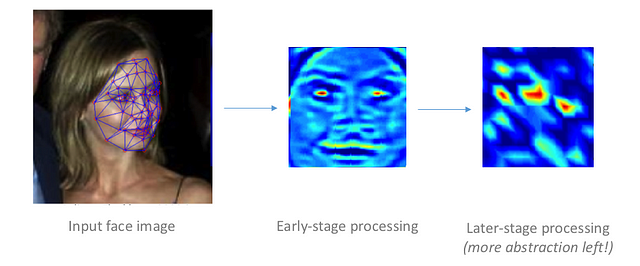

Facial features identified by some of the interim layers of DeepFace DL architecture

It silently figures out this connection between the input (face) and output (name), when shown tons of such pairs. Then, when presented with a new face, voila it gets it right magically. Compared to earlier recognition techniques, DL hits the ball way out of the park, in both accuracy and speed.

Icons by hunotika, MGalloway(WMF), Google [CC BY 3.0] via Wikimedia Commons

But, why do they always show pictures of the human brain?

To be fair, there is a connection.

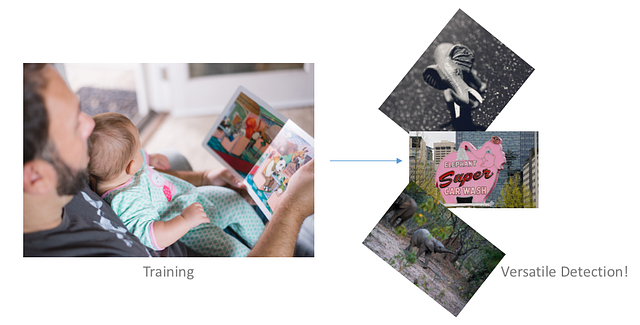

Lets review how a child learns the first lessons. You show flash cards with the picture of an elephant, and read it out aloud. After a few such instances, when the baby looks at any semblance of an elephant, she identifies it instantly. Irrespective of the pose, color or context. We didn’t teach her about the trunk, tusk or shape of ears, but she learnt it in totality. And she just gets it.

Photos by Picsea, Anita Jankovic, Anna Stoffel, Chris Rhoads on Unsplash

Just as we are unsure how the baby learnt to identify what makes up an elephant, we really don’t know how neural networks, the technology behind deep learning figures this out. This is where all similarities to the human brain and neural connections spring up, but I’ll stop here and save you the hassle.

It suffices to know that deep learning is insanely smart at automatically identifying the most distinguishing signals (features) in any given data (face). In other words, it is a master at feature extraction. When given tons of input-output pairs, it identifies what to learn and how to learn it.

Deep learning figures out the strongest pattern in any presented entity — a face, voice or even a table of numbers.

Is this such a big deal for Machine learning?

Yes, it’s Huge.

Inspite of the stellar advances in machine learning, the biggest challenge facing the discipline has been… you guessed it right, feature extraction. Data scientists spend sleepless nights discovering connections between an input (a hundred factors of customer behavior) and output (customer churn). Then the machines conveniently learn from them.

So, the difference between top accuracy and poor results is the identification of best features. Now, thanks to deep learning, if machines can do this heavy lifting as well automatically, won’t it be neat?

What use does a pattern identification machine have for business?

Plenty.

Deep learning can be applied anywhere there is a fitment for machine learning. It can comfortably thrash problems with structured data, an area where traditional algorithms reign supreme. Based on what we’ve seen, it can crash the learning cycles, and push accuracy to dizzying levels.

But the biggest bang for buck is in those areas where ML is still stuttering without a brisk start. Take the case of images, video, audio or deeper meaning from plain old text. Deep learning has crushed problems with such data types that need machines to identify, classify or predict. Lets look at a few.

- Advanced face recognition technology is seeing early applications in the real-world, and the quality of image or exposure are no longer constraints.

- It has made not just detection of animal species possible, but lets us name every whale shark in the ocean. Say hello to Willy, the humpback whale!

- Advances in speech recognition cut error rates by over 30% since DL took over. And about 2 years ago, they beat humans in this space.

- DL has endowed machines with artistic abilities, and there are interesting applications of image synthesis and style transfer made possible.

- Thanks to DL it is possible to extract deeper meaning from text, and there are initial attempts to solve the rankling challenge of fake news.