In September 2012, Alex Krizhevsky and Ilya Sutskever, two AI researchers from the University of Toronto, made history at ImageNet, a popular competition in which participants develop software that can recognize objects in a large database of digital images. Krizhevsky and Sutskever, and their mentor, AI pioneer Geoffrey Hinton, submitted an algorithm that was based on deep learning and neural networks, an artificial intelligence technique that the AI community viewed with skepticism because of its past shortcomings.

AlexNet, the deep learning algorithm developed by the U of T researchers, was able to win the competition with an error rate of 15.3 percent, a whopping 10.8 percent better than the runner up. By some accounts, the event triggered the deep learning revolution, creating interest in the field by many academic and commercial organizations.

Today, deep learning has become pivotal to many of the applications we use every day such as content recommendation systems, translation apps, digital assistants, chatbots and facial recognition systems. Deep learning has also helped create advances in many special domains such as healthcare, education, and self-driving cars.

The fame of deep learning has also led to confusion and ambiguity over what it is and what it can do. Here’s a brief breakdown of what deep learning and neural networks are, what they can (and can’t do) and what are their strengths and limits.

A primer on machine learning

Deep learning is a subset of machine learning, a field of AI that changes the way software behavior is developed. Classical approaches to developing software, also known as “good old-fashioned AI” (GOFAI), involve programmers manually coding the rules that define the behavior of an application.

GOFAI works well in domains where the rules are clear cut and can be translated into program-flow commands such as if…else commands. But rule-based systems struggle in fields such as computer vision, where software has to make sense of the content of photos and video taken from different angles and under different lighting conditions.

Machine learning algorithms use different mathematical and statistical models to analyze large sets of data and find useful patterns and correlations. Machine learning then uses the gained knowledge to make predictions or define the behavior of an application.

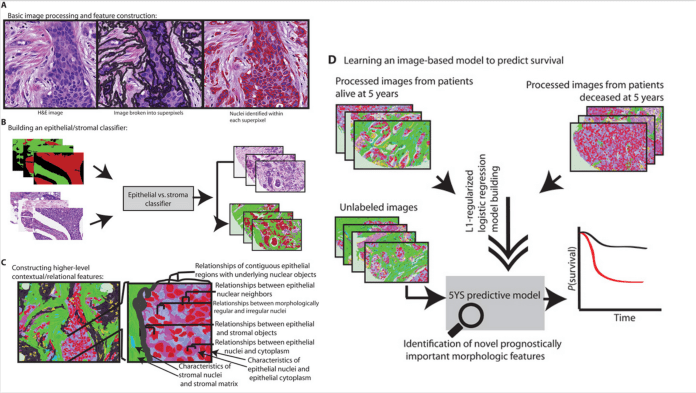

Machine learning has been in use for decades, but its capabilities were limited in some areas. There is still a lot of labor-intensive manual design involved. For instance, when used in computer vision, developers must do a lot of “feature engineering” which enabled the algorithm to extract different features from images, and then they applied a statistical model such as logistic regression or support vector machine (SVM). The process is very time consuming and required the involvement of several AI engineers and domain experts.

Classic machine learning approaches involved lots of complicated steps and required the collaboration of dozens of domain experts, mathematicians, and programmers

How do neural networks and deep learning algorithms work?

Deep learning differs from other machine learning and AI techniques in that it involves very little manual design. Deep learning uses neural networks, a structure that AI researcher Jeremy Howard defines as “infinitely flexible function” that can solve most machine learning problems without going through the domain-specific feature engineering that you previously had to perform.

When you provide a neural network with a set of examples, say images of people, it can find the common features between those images. When you stack several neural networks on top of each other, it can go from finding simple features, such as edges and contours, to more complicated features, such as eyes, noses, ears, faces and bodies.

Layered neural networks can extract different features from images in a hierarchical way (source: www.deeplearningbook.org)

When creating deep learning algorithms, developers and engineers configure the number of layers and the type of functions that connect the outputs of each layer to the inputs of the next. Next, they train the model by providing it with lots of annotated examples. For instance, you give a deep learning algorithm with thousands of images and labels that correspond to the content of each image.

The algorithm will run those examples through its layered neural network (the many layers is why it’s called “deep” learning) and adjust the weights of the variables (or neurons or activations) in each layer of the neural network to be able to detect the common patterns that define images with similar labels.

With enough training, a neural network becomes fine-tuned and will be able to classify unlabeled images based on the knowledge it has gained from the examples.

Finding quality training data is one of the main challenges of deep learning algorithms. Fortunately, there are many publicly available datasets that deep learning engineer can choose from.

An example is an ImageNet database, which contains more than 14 million images labeled into more than 200,000 categories. ImageNet is one of the de facto standards for training and testing computer vision algorithms. Other datasets include CIFAR, another general computer-vision dataset, and MNIST, a specialized database of tens of thousands of hand-written digits.

Supervised, unsupervised and reinforcement learning

The process described above is called “supervised learning” and it’s currently the main way deep learning algorithms are developed. It’s called supervised because the AI model is given a full set of problems (e.g. images) and their solutions (e.g. their associated labels or descriptions) and is instructed to find the correct mappings between the inputs and outputs. Supervised learning is used in domains such as computer vision and speech recognition.

Unsupervised learning, another breed of deep learning models, is used to solve problems where you have a bunch of data but you don’t have a corresponding output to map them to. In this case, the deep learning algorithm has to peruse the training data and find useful patterns that would have otherwise required a lot of human effort.

For instance, a deep learning algorithm can go through 10 years’ worth of sales data and give you sales predictions or suggestions on how to adjust the prices of your goods to maximize sales. These are called predictive and prescriptive analytics and is useful in many domains such as weather forecasting and content recommendation.

Reinforcement learning, the other type of deep learning model training, is considered by many as the “holy grail of artificial intelligence” (that is, based on what we know right now). In reinforcement learning, an AI model is provided with the basic rules of a problem domain and is left to develop its behavior on its own without supervision or data from humans. The deep learning model uses trial and error to gradually learn how to master the domain.

Reinforcement learning is one of the main methods used in developing AI models that have mastered famous games such as chess, Go, poker, and more recently, StarCraft II. Scientists have also used reinforcement learning to develop robot hands that teach themselves to handle objects, one of the very tough challenges of the AI industry. Although a very exciting field of AI, reinforcement learning has very distinct limits and is very demanding in terms of compute resources. While it has performed very interesting feats in labs, reinforcement learning has shown limited use in real world applications.

Why is deep learning possible now?

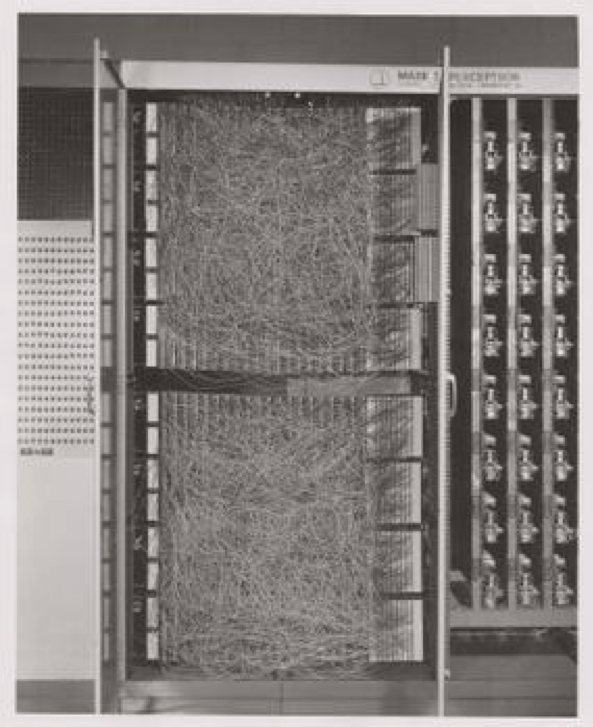

While deep learning became popular earlier this decade, it isn’t new. The concepts of neural networks date back to the 1950s, when the Mark I Perceptron, the first neural network, was developed.

Neural networks were also discussed in the 1980s and 1990s but were mostly dismissed because of their limited performance and their data and computing requirements. At the time, the data and compute resources required to create deep learning models weren’t available. Training a deep learning model required a lot of time. Other methods were more realistic in their demands and their results.

The Mark I Perceptron was the first implementation of neural networks in 1957 (Source: Wikipedia)

Presently, both data and computing have become widely available and inexpensive. There are a plethora of GPUs and specialized hardware that can help in training deep learning models at very fast rates. There are cloud computing services such as PaperSpace and Crestle that are specialized for deep learning.

Applications of deep learning

Deep learning has currently found its way into many different fields. Below are some of the interesting applications of deep learning:

- Self-driving cars: In order to navigate streets without a driver, autonomous vehicles need to be able to make sense of their surroundings. Deep learning algorithms ingest video feeds from cameras installed around the cars and detect street signs, traffic lights, other cars and pedestrians. Deep learning is one of the main components of driverless cars (but not the only one).

- Facial recognition: Face recognition is currently used in many different domains, such as unlocking your iPhone, making payments, and finding criminals. Previous iterations of the technology required extensive manual effort and weren’t very reliable. With deep learning, a facial recognition system only needs to view several images of a person and it will be able to detect that person’s face in photos and video in real time and with decent accuracy. AI-based facial recognition is currently at the center of ethical debates because of its potential sinister uses.

- Speech recognition and transcription: A well-trained deep learning model can transform a stream of audio into written text and is far more accurate than any previous transcription technologies. Deep learning enables smart speakers to parse voice commands given by their users. Beyond transcribing text, deep learning can also help tell the difference between the voices of different people and determine who is speaking.

- Machine translation: Prior to deep learning, automated translation systems were very limited in their quality and were very difficult to develop, requiring separate efforts for every language pair. In recent years, tech giants such as Google have been using deep learning to improve the quality of their machine translation systems. Deep learning’s understanding of human language is limited, but it can nonetheless perform remarkably well at simple translations.

- Medical imaging: Deep learning models can help doctors automate the process of analyzing x-ray and MRI scans and find symptoms and diagnose diseases. Deep learning will not replace radiologists, but it will surely help them become better at their job.

The limits of deep learning

Neural network created by pins and threads

Deep learning has solved many problems that were previously believed to be off-limits for computers. But the achievements of deep learning have led to many wrong interpretations and expectations of its capabilities. While being a very exciting technology, deep learning also has distinct limits.

In his in-depth paper, “Deep Learning: A Critical Appraisal,” Gary Marcus, the former head of AI at Uber and a professor at New York University, details the limits and challenges of deep learning faces, which summarize into the following points:

- Deep learning requires a lot of data. Unlike humans, who can learn concepts and make reliable decisions based on limited and incomplete data, deep learning models are often only as good as the quality and quantity of data they’re trained with. This poses a limit in areas where annotated data is not available.

- Deep learning models are shallow: Deep learning and neural networks are very limited in their capabilities to apply their knowledge in areas outside their training, and they can fail in spectacular and dangerous ways when used outside the narrow domain they’ve been trained for.

- Deep learning is opaque: Unlike other machine learning models, deep learning involves very little top-down human design. They are also very complicated and involve thousands and millions of parameters. This makes it hard to interpret their outputs and the reasoning behind their decisions. Neural networks are described as black boxesbecause of their opacity. The problem has given rise to a series of efforts and studies toward creating explainable AI.

Deep learning and neural networks are often compared with human intelligence. But while deep learning can perform some complicated tasks on par or better than humans, it works in a way that is fundamentally different from the human mind. It is especially limited in commonsense and abstract decision-making.

The threats of deep learning

Deep learning is a very powerful tool. But like every other effective technology, it comes with its own tradeoffs.

Deep learning models are prone to algorithmic bias because it derives its behavior from its training data. This means that whatever hidden or overt biases embedded in the training examples will also find their way into the decisions the deep learning algorithm makes.

In the past few years, there have been several cases where deep learning models were found to discriminate against specific groups of people. For example, last October, Amazon had to shut down an AI recruiting tool because it was biased against female applicants.

In the wrong hands, deep learning can serve very evil purposes. As deep learning becomes increasingly efficient in creating natural-looking images and sounds, there’s fear that the technology could be used to create a new breed of AI-based forgery crimes. Last year, there was much controversy over FakeApp, a video application that used deep learning to swap the faces of people in videos. The app was used to put the faces of celebrities and politicians in pornographic videos.

Another threat of deep learning is adversarial attacks. Because of the way they’re created, deep learning algorithms can behave in unexpected ways—or at least in ways that would seem illogical to us humans. And given the opaque nature of neural networks, it’s hard to find all the logical errors they contain.

Experts and researchers have shown time and again that these failure can be turned into adversarial attacks where a malicious actor forces a deep learning algorithm to manifest dangerous behavior. For instance, researchers were able to fool the vision algorithms of a self-driving car by pasting a few color stickers on a stop sign. To a human, it would still look like a stop sign. But the driverless car would miss it altogether and possibly create a dangerous situation.

Deep learning is just the beginning

Deep learning has come a long way since it has been pushed into the limelight thanks to the efforts of Krizhevsky, Sutskever and Hinton, and the many others who have workd in the field.

But deep learning still isn’t the realization of artificial intelligence in the sense of creating synthetic beings that can think like humans. We don’t even know if artificial general intelligence will ever be created, and many experts believe that it’s a wasted goal.

However, as far as scientists are concerned, we still have to push forward to create better techniques and technologies to automate complicated decisions. In 2017, Hinton, the longtime pioneer and advocate of deep learning and neural networks, suggested that he would be ready to throw everything he has created away and start all over again.

“The future depends on some graduate student who is deeply suspicious of everything I have said,” Hinton said.