Ready to learn Machine Learning? Browse Machine Learning Training and Certification courses developed by industry thought leaders and Experfy in Harvard Innovation Lab.

This is the first of a series of articles intended to make Machine Learning more approachable to those who do not have a technical training. I hope it is helpful.

Advancements in computer technology over the past decades have meant that the collection of electronic data has become more commonplace in most fields of human endeavor. Many organizations now find themselves holding large amounts of data spanning many prior years. This data can relate to people, financial transactions, biological information, and much, much more.

Simultaneously, data scientists have been developing iterative computer programs called algorithms that can look at this large amount of data, analyse it and identify patterns and relationships that cannot be identified by humans. Analyzing past phenomena can provide extremely valuable information about what to expect in the future from the same, or closely related, phenomena. In this sense, these algorithms can learn from the past and use this learning to make valuable predictions about the future.

While learning from data is not in itself a new concept, Machine Learning differentiates itself from other methods of learning by a capacity to deal with a much greater quantity of data, and a capacity to handle data that has limited structure. This allows Machine Learning to be successfully utilized on a wide array of topics that had previously been considered too complex for other learning methods.

Examples of Machine Learning

The following are examples of more well-developed uses of Machine Learning that you may have come across in your day-to-day lives:

- Credit scoring: Financial institutions collect detailed information on their customers over time — for example, income, assets, job, age, financial history. This data can be analysed to identify which characteristics are more associated with negative outcomes such as defaulting on loans, or which drive positive outcomes such as timely loan repayment. Thus, a predictive relationship can be constructed which can classify customers based on their likelihood of default, and the financial institution can use this to make more efficient decisions on loans.

- Basket analysis: When a customer goes through a checkout in a grocery store or online, the information on the specific items purchased will end up in a large database. This database can be analysed to determine typical purchasing behaviors or associations. For example, how likely is it that a customer who has bought a toothbrush will also buy toothpaste? In many cases personal customer data can be collected that can help analyse how this behavior changes within certain demographic or income groups. Analyzing this data can inform marketing and advertising strategy and decision making. It can also lead to more personalized advertising where a customer can receive offers about products they are more likely to be interested in.

- Genetic Science: Members of the online DNA-testing service 23andme.com provide personal and health information together with a sample of their saliva for DNA analysis. These members are often sent questionnaires about their health and personal traits. The genetic codes of people who report similar health conditions or traits can be analyzed over a large number of individuals for frequently occurring strings or sectors. If such strings or sectors are discovered, they can be used to predict traits or possible medical issues that may lie ahead. This type of learning can also be used to identify biological relationships between members of the service, in some cases reuniting family members whom have been separated through adoption or other circumstances.

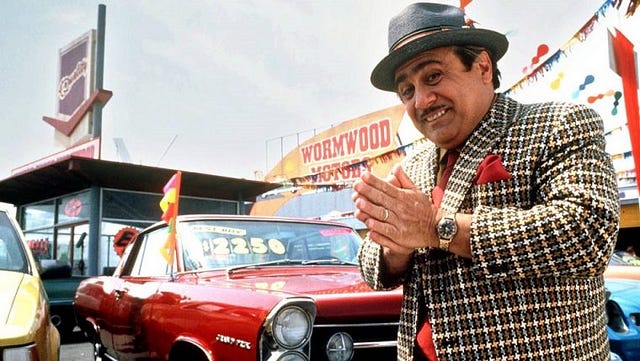

- Valuation: Data on car sales over a period of time can be analyzed to determine what characteristics of the car most affect the price, and the sensitivity of the price to these characteristics. Based on this, online valuation tools are now available which can advise a price range for a car based on information inputted by the owner.

- Other common applications include medical diagnosis, handwriting to text conversion, speech recognition, face recognition, image compression, robotics, autonomous vehicles and many other uses.

Types of Machine Learning

Machine Learning can be classified into three main categories:

- Supervised learning algorithms make use of a training set of input and output data. The algorithm learns a relationship between the input and output data from the training set and then uses this relationship to predict the output for new data. One of the most common supervised learning objectives is classification. Classification learning aims to use the learned information to predict membership of a certain class. The credit scoring example represents classification learning in that it predicts people who default on loans.

- Unsupervised learning aims to make observations in data where there is no known outcome or result, through deducing underlying patterns and structure in the data. Association learning is one of the most common forms of unsupervised learning, where the algorithm searches for associations between input data. The shopping basket analysis example represents association learning.

- Reinforcement learning is a form of ‘trial and error’ learning where input data stimulates the algorithm into a response, and where the algorithm is ‘punished’ or ‘rewarded’ depending on whether the response was the desired one. Robotics and autonomous technology make great use of this form of learning,

What are the necessary conditions for successful Machine Learning?

Machine Learning and ‘Big Data’ has become more well-known and has generated a lot of press in recent years. As a result, many individuals and organizations are considering how and if it might apply to their specific situation and whether there is value to be gained from it.

However, building internal capabilities for successful Machine Learning (or making use of external expertise) can be costly. Before taking on this challenge, it is wise to assess whether the right conditions exist for the organization to have a chance of success. The main considerations here relate to data and to human insight.

There are three important data requirements for effective Machine Learning. Often, not all of these requirements can be satisfactorily met, and shortcomings in one can sometimes be offset by one or both of the others. These requirements are:

- Quantity: Machine Learning algorithms need a large number of examples in order to provide the most reliable results. Most training sets for supervised learning will involve thousands, or tens of thousands of examples.

- Variability: Machine Learning aims to observe similarities and differences in data. If data is too similar (or too random), it will not be able to effectively learn from it. In classification learning, for example, the number of examples of each class in the training data is critical to the chances of success.

- Dimensionality: Machine Learning problems often operate in multidimensional space, with each dimension associated with a certain input variable. The greater the amount of missing information in the data, the greater the amount of empty space which prevents learning. Therefore, the level of completeness of the data is an important factor in the success of the learning process.

Machine Learning can also be aided by high quality human insight. The permutations and combinations of analyses and scenarios than can be studied from a given set of data are often vast. The situation can be simplified by conversations with subject matter experts. Based on their knowledge of the situation, they can often highlight the aspects of the data that are most likely to provide insights. For example, a recruiting expert can help to identify what data points are most likely to drive a company’s selection decisions based on many years of being involved in and observing those decisions. Knowledge of underlying processes within an organization can also help the data scientist select the algorithm which best models that process and which, therefore, has the greatest chance of success.