In this column we briefly introduce the concepts of artificial intelligence (AI), artificial neural networks (ANNs), machine learning (ML), deep learning (DL), and deep neural networks (DNNs).

There are so many new technologies bouncing around these days that it’s difficult to wrap one’s brain around all the terminology and abbreviations, so I thought it might be a good idea to provide some easy-to-read overviews.

In this column, we are going to briefly introduce the concepts of artificial intelligence (AI), artificial neural networks (ANNs), machine learning (ML), deep learning (DL), and deep neural networks (DNNs). See also: What the FAQ are VR, MR, AR, DR, AV, and HR? See also: What the FAQ are the IoT, IIoT, IoHT, and AIoT?

Early Musings on AI

Way back in the middle of the 1800s, The English polymath – inventor, philosopher, mathematician, and mechanical engineer – Charles Babbage (1791–1871) conceived the idea of a steam-powered mechanical computer that he dubbed the Analytical Steam Engine.

Babbage worked on his Analytical Engine from around 1830 until he died, but sadly it was never completed. It is often said that Babbage was a hundred years ahead of his time and that the technology of the day was inadequate for the task. However, in his book, Engines of the Mind, Joel Shurkin refuted this, stating:

One of Babbage’s most serious flaws was his inability to stop tinkering. No sooner would he send a drawing to the machine shop than he would find a better way to perform the task and would order work stopped until he had finished pursuing the new line. By and large this flaw kept Babbage from ever finishing anything.

Working with Babbage was Augusta Ada Lovelace (1815–1852), the daughter of the English poet Lord Byron. Ada, who was a splendid mathematician and one of the few people who fully understood Babbage’s vision, created a program for the Analytical Engine. Had the machine ever actually worked, this program would have been able to compute the mathematical sequence known as Bernoulli numbers.

Based on this work, Ada is now credited as being the first computer programmer and, in 1979, a modern programming language was named ADA in her honor. (In their spare time, Babbage and Ada also attempted to create a system for predicting the winners of horse races, but it is said that they lost a lot of money!)

Of more interest to us here is the fact that Ada mused on the possibilities of something akin to AI. Babbage thought of his engine only as a means for performing mathematical calculations. He hated the tedium associated with performing calculations by hand and — as far back as 1812 — he is reported as saying, “I wish to God these calculations had been performed by steam.” By comparison, Ada wrote notes about the possibilities of computers one day using numbers as symbols to represent other things like musical notes, and she went so far as to speculate of machines “having the ability to compose elaborate and scientific pieces of music of any degree of complexity or extent.”

Around 100 years after Ada’s ponderings, the English mathematician, computer scientist, logician, cryptanalyst, philosopher, and theoretical biologist, Alan Mathison Turing (1912–1954), was hard at work at Bletchley Park during WWII leading the effort to crack the German Enigma and Lorenz ciphers. After the war, in his seminal paper, Computing Machinery and Intelligence (1950), Turing considered the question, “Can Computers Think?”

I fear Turing’s cogitations and ruminations didn’t exactly cheer him up, because during a lecture the following year he said:

“It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers… They would be able to converse with each other to sharpen their wits. At some stage therefore, we should have to expect the machines to take control.”

As part of his Computing Machinery and Intelligence paper, Turing suggested a game called the “Imitation Game” (hence the highly recommended 2014 movie of the same name). This game, which eventually became known as the Turing test, is a method of inquiry — based on a computer’s ability to communicate indistinguishably from a human — for determining whether or not a computer is capable of thinking like a human being.

Unfortunately, in June 2012, at an event marking what would have been Alan Turing’s 100th birthday, a chatbot called Eugene Goostman successfully convinced 29% of its judges that it was human. This prompted Jordan Pearson to pen a column, Forget Turing, the Lovelace Test Has a Better Shot at Spotting AI.

In this column, we are introduced to the Lovelace test, which was designed by a team of computer scientists in the early 2000s, and which is based on Ada’s argument that, “Until a machine can originate an idea that it wasn’t designed to, it can’t be considered intelligent in the same way humans are.”

The Dartmouth Workshop and Beyond

The Dartmouth Workshop — more formally, the Dartmouth Summer Research Project on Artificial Intelligence — took place in 1956. This gathering is now considered to be the founding event of the field of artificial intelligence as we know and love it today.

Having said this, AI stayed largely in the realm of academia until circa the 2010s (pronounced “twenty-tens”), at which point a combination of algorithmic developments coupled with advances in processing technologies caused AI to explode onto the scene with gusto, abandon, a fanfare of trumpets (possibly trombones), and – of course — a cornucopia of abbreviations.

Artificial Intelligence (AI): In computer science, artificial intelligence (AI), sometimes called machine intelligence, is intelligence demonstrated by machines, in contrast to the natural intelligence displayed by humans. AI can refer to any device that perceives its environment and takes actions that maximize its chance of successfully achieving its goals. A more elaborate definition characterizes AI as a system’s ability to correctly interpret external data, to learn from such data, and to use those learnings to achieve specific goals and tasks through flexible adaptation.

As part of all this, it’s become common to talk about “cognitive systems,” which refers to systems that are capable of learning from their interactions with data and humans and modifying their behavior accordingly — essentially continuously reprogramming themselves. (It is believed that the majority of embedded systems will have cognitive capabilities in the not-so-distant future; see also What the FAQ is an Embedded System?).

Artificial Neural Networks (ANNs): These are computing systems that are inspired by, but not identical to, the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons (ANs), which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. When an artificial neuron receives one or more signals, it processes them and decides whether or not to signal any neurons connected to it.

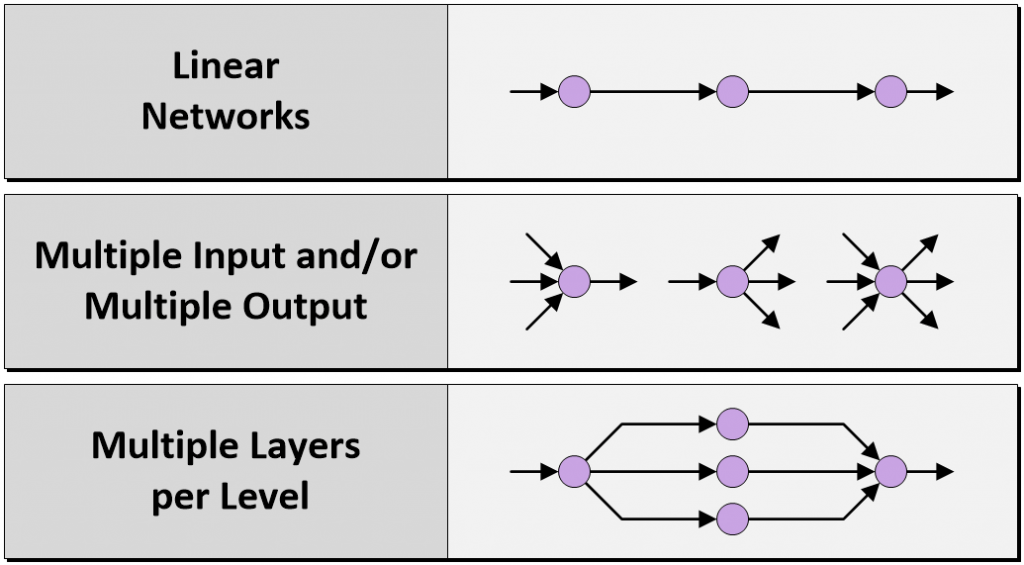

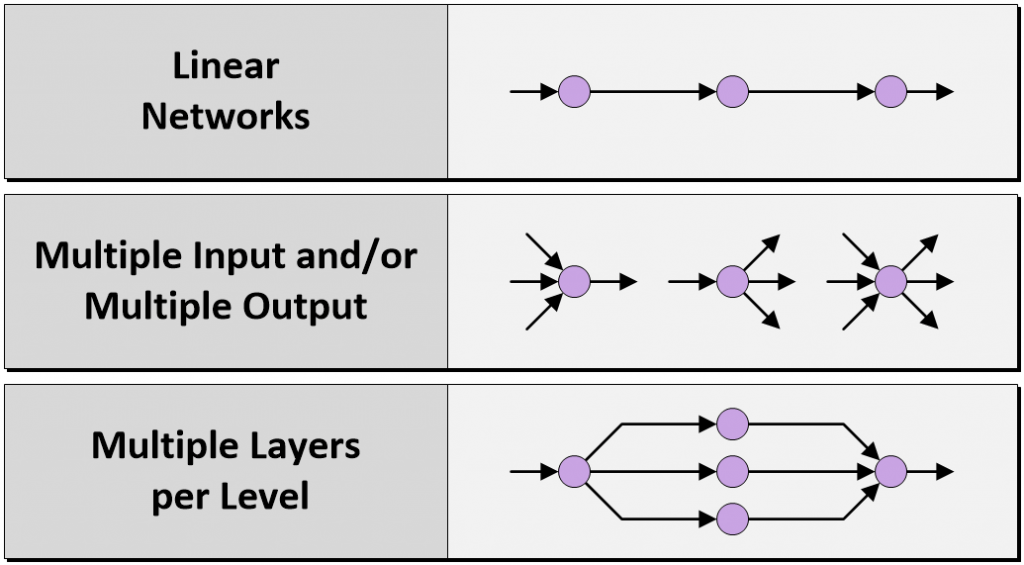

A common form of ANN is formed from layers of ANs, with the outputs from each layer feeding the inputs to the next layer. Early ANNs were limited to only a few layers, each containing only a handful of ANs, but today’s ANNs can have hundreds or thousands of layers, each comprising thousands of ANs. Furthermore, early systems supported only linear networks with a single layer per level, while today’s networks can support multiple inputs and/or multiple outputs along with multiple layers per level.

Such systems “learn” to perform tasks by considering examples, generally without being programmed with task-specific rules. In the case of image recognition, for example, they might learn to identify images that contain flowers by analyzing example images that have been labeled by humans as “flower” (augmented with the type of flower) or “no flower,” after which they can use the results to identify flowers in other images.

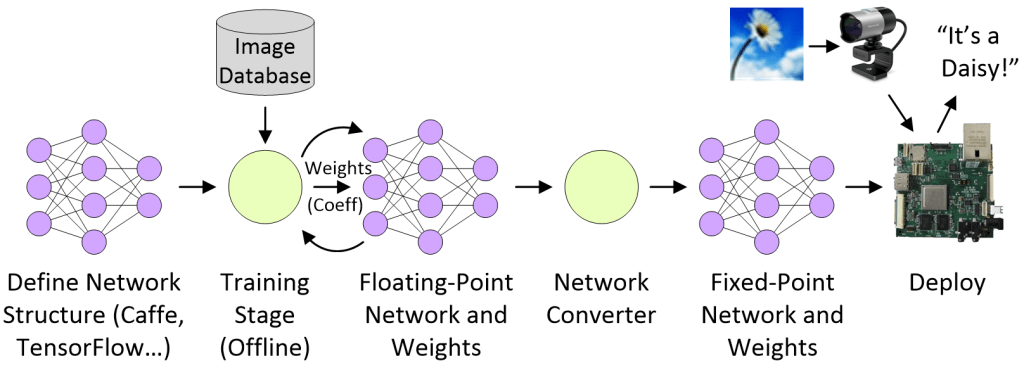

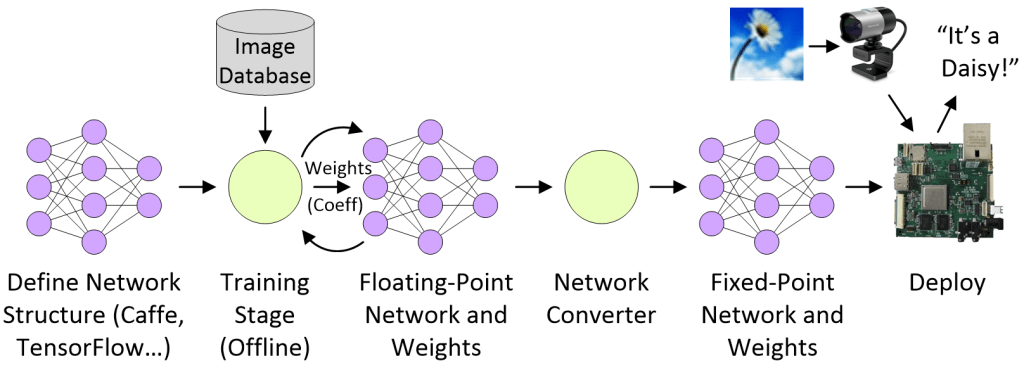

A high-level overview of such a system is as follows. We start by defining the network architecture using a framework like Caffe or TensorFlow. We then train our network using hundreds of thousands, or millions, of tagged images. At this stage, the network is based on floating-point calculations and weights (coefficients). Next, we use a network converter to translate the network into a smaller, “lighter” fixed-point representation in a form suitable for deployment on a physical system involving either a central processing unit (CPU), a graphics processing unit (GPU), a field-programmable gate array (FPGA), a System-on-Chip (SoC), or – more recently – an integrated circuit (“silicon chip”) that is custom designed to perform AI applications. Finally, we use a camera to feed new images to the deployed inference engine in the hope that it will correctly identify the contents of these images.

Deep Neural Networks (DNNs): A deep neural network (DNN) is an artificial neural network (ANN) with a lot of layers of artificial neurons between the input and output layers. A convolutional neural network (CNN) is one form of implementation, and CNNs are currently the method of choice for processing visual and other two-dimensional data.

DNNs are typically feedforward networks in which data flows from the input layer to the output layer without looping back. By comparison, recurrent neural networks (RNNs), in which data can flow in any direction, are better suited for applications such as language modeling.

Machine Learning (ML) and Deep Learning (DL): Machine learning (ML) is the scientific study of algorithms and statistical models that computer systems use to perform a specific task without using explicit instructions, relying instead on patterns and inference. Machine learning is regarded as being a subset of artificial intelligence. Machine learning algorithms build a mathematical model based on sample data, known as “training data,” in order to make predictions or decisions without being explicitly programmed to perform the task.

In the same way that machine learning is seen as a subset of artificial intelligence, deep learning (DL) is regarded as a subset of machine learning. Deep learning can be supervised, semi-supervised, or unsupervised. Deep learning architectures such as DNNs, CNNs, and RNNs have been applied to fields including computer vision, speech recognition, natural language processing, audio recognition, social network filtering, machine translation, bioinformatics, drug design, medical image analysis, material inspection, and board game programs, where they have produced results comparable to — in some cases superior to — human experts.