John McCarthy coined the term Artificial Intelligence in the 1950s, being one of the founding fathers of Artificial Intelligence along with Marvin Minsky. Also in 1958, Frank Rosenblatt built a prototype neuronal network, which he called the Perceptron. In addition, the key ideas of the Deep Learning neural networks for computer vision were already known in 1989; also the fundamental algorithms of Deep Learning for time series such as LSTM were already developed in 1997, to give some examples. So, why now this Artificial Intelligence boom?

Undoubtedly, the available computing has been the main trigger, as we have already presented in a previous post. However, other factors have contributed to unleashing the potential of Artificial Intelligence and related technologies. Next, we are going to talk about the most important factors that have influenced it.

The data, the fuel for Artificial Intelligence

Source: Júlia Torres — Barcelona

Artificial Intelligence requires large datasets for the training of its models but, fortunately, the creation and availability of data has grown exponentially thanks to the enormous decrease in cost and increased the reliability of data generation: digital photos, cheaper and precise sensors, etc. Furthermore, the improvements in storage hardware of recent years, associated with the spectacular advances in technology for its management with NoSQL[8]databases, have allowed having enormous datasets to train Artificial Intelligence models.

Beyond the increases in the availability of data that the advent of the Internet has led to recently, specialized data resources have catalyzed the progress of the area. Many open databases have supported the rapid development of Artificial Intelligence algorithms. An example is the ImageNet[9] database, of which we have already spoken, freely available with more than 10 million images tagged by hand. But what makes ImageNet special is not precisely its size, but the competition that was carried out annually with it, being an excellent way to motivate researchers and engineers.

While in the early years the proposals were based on traditional computer vision algorithms, in 2012 Alex Krizhevsky used a Deep Learning neural network, now known as AlexNet, which reduced the error rate to less than half of what the winner of the previous edition of the competition got. Already in 2015, the winning algorithm rivalled human capabilities, and today Deep Learning algorithms far exceed the error rates in this competition of those who have humans.

But ImageNet is only one of the available databases that have been used to train Deep Learning networks lately; many others have been popular, such as: MNIST[10], STL[11], COCO[12], Open Images[13], Visual Question Answering[14], SVHN[15], CIFAR-10/100[16], Fashion-MNIST[17], IMDB Reviews[18], Twenty Newsgroups[19], Reuters-21578[20], WordNet[21], Yelp Reviews[22], Wikipedia Corpus[23], Blog Authorship Corpus[24], Machine Translation of European Languages[25], Free Spoken Digit Dataset[26], Free Music Archive[27], Ballroom[28], The Million Song[29], LibriSpeech[30], VoxCeleb[31], The Boston Housing[32], Pascal[33] , CVPPP Plant Leaf Segmentation[34], Cityscapes[35].

It is also important to mention here Kaggle[36], a platform that hosts competitions of data analysis where companies and researchers contribute and share their data while data engineers from around the world compete to create the best prediction or classification models.

Entering into an era of computation democratization

Source: BSC-CNS

However, what happens if you do not have this computing capacity in your company? Artificial Intelligence has until now been mainly the toy of big technology companies like Amazon, Baidu, Google or Microsoft, as well as some new companies that had these capabilities. For many other businesses and parts of the economy, artificial intelligence systems have so far been too expensive and too difficult to fully implement the hardware and software required.

But now we are entering another era of democratization of computing, and companies can have access to large data processing centers of more than 28,000 square meters (four times the field of Barcelona football club (Barça)), with hundreds of thousands of servers inside. We are talking about Cloud Computing[37].

Cloud Computing has revolutionized the industry through the democratization of computing and has completely changed the way business operates. And now it is time to change the scenario of Artificial Intelligence and Deep Learning, offering a great opportunity for small and medium enterprises that cannot build this type of infrastructure, although Cloud Computing can offer it to them; in fact, it offers access to a computing capacity that previously was only available to large organizations or governments.

Besides, Cloud providers are now offering what is known as Artificial Intelligence algorithms as a Service (AI-as-a-Service), Artificial Intelligence services through Cloud that can be intertwined and work together with internal applications of companies through simple protocols based on API REST[38].

This implies that it is available to almost everyone, since it is a service that is only paid for the time used. This is disruptive, because right now it allows software developers to use and put virtually any artificial intelligence algorithm into production in a heartbeat.

Amazon, Microsoft, Google and IBM are leading this wave of AIaaS services, are put into production quickly from the initial stages (training). At the time of writing this book, Amazon AIaaS was available at two levels: predictive analytics with Amazon Machine Learning[39] and the SageMaker[40] tool for rapid model building and deployment. Microsoft offers its services through its Azure Machine Learning which can be divided into two main categories as well: Azure Machine Learning Studio[41] and Azure Intelligence Gallery[42]. Google offers Prediction API[43] and the Google ML Engine[44]. IBM offers AIaaS services through its Watson Analytics[45]. And let’s not forget solutions that already come from startups, like PredicSis[46] or BigML[47].

Undoubtedly, Artificial Intelligence will lead the next revolution. Its success will depend to a large extent on the creativity of the companies and not so much on the hardware technology, in part thanks to Cloud Computing.

An open-source world for the Deep Learning community

Deep Learning Frameworks (source: https://aws.amazon.com/ko/machine-learning/amis/)

Some years ago, Deep Learning required experience in languages such as C++ and CUDA; Nowadays, basic Python skills are enough. This has been possible thanks to the large number of open source software frameworks that have been appearing, such as Keras, central to our book. These frameworks greatly facilitate the creation and training of the models and allow abstracting the peculiarities of the hardware to the algorithm designer to accelerate the training processes.

The most popular at the moment are TensorFlow, Keras and PyTorch, because they are the most dynamic at this time if we rely on the contributors and commits or stars of these projects on GitHub[48].

In particular, TensorFlow has recently taken a lot of impulse and is undoubtedly the dominant one. It was originally developed by researchers and engineers from the Google Brain group at Google. The system was designed to facilitate Machine Learning research and make the transition from a research prototype to a production system faster. If we look at the Gihub page of the project[49] we will see that they have, at the time of writing this book, more than 35,000 commits, more than 1500 contributors and more than 100,000 stars. Not despicable at all.

TensorFlow is followed by Keras[50], a high level API for neural networks, which makes it the perfect environment to get started on the subject. The code is specified in Python, and at the moment it is able to run on top of three outstanding environments: TensorFlow, CNTK or Theano. Keras has more than 4500 commits, more than 700 contributors and more than 30,000 stars[51].

PyTorch and Torch[52] are two Machine Learning environments implemented in C, using OpenMP[53] and CUDA to take advantage of highly parallel infrastructures. PyTorch is the most focused version for Deep Learning and based on Python, developed by Facebook. It is a popular environment in this field of research since it allows a lot of flexibility in the construction of neural networks and has dynamic tensors, among other things. At the time of writing this book, Pytorch has more than 12,000 commits, around 650 contributors and more than 17,000 stars[54].

Finally, and although it is not an exclusive environment of Deep Learning, it is important to mention Scikit-learn[55], that is used very often in the Deep Learning community for the preprocessing of data[56]. Scikit-learn has more than 22500 commits, more than 1000 contributors and nearly 30,000 stars[57].

But as we have already advanced, there are many other frameworks oriented to Deep Learning. Those that we would highlight are Theano[58] (Montreal Institute of Learning Algorithms), Caffe[59] (University de Berkeley), Caffe2[60] (Facebook Research) , CNTK[61] (Microsoft), MXNET[62](supported by Amazon among others), Deeplearning4j[63], Chainer[64] , DIGITS[65] (Nvidia), Kaldi[66], Lasagne[67], Leaf[68], MatConvNet[69], OpenDeep[70], Minerva[71] and SoooA[72] , among many others.

An open-publication ethic

Source: ArXiv.org

In the last few years, in this area of research, in contrast to other scientific fields, a culture of open publication has been generated, in which many researchers publish their results immediately (without waiting for the approval of the peer review usual in conferences) in databases such as arxiv.org of Cornell University (arXiv)[73]. This implies that there are numerous softwares available in open source associated with these articles, which allow this field of research to move tremendously quickly, since any new discovery is immediately available for the whole community to see it and, if it is the case, build on top a new proposal.

This is a great opportunity for users of these techniques. The reasons for research groups to openly publishing their latest advances can be diverse. For example, articles rejected in main conferences in the area can propagate solely as a preprint on arxiv. This is the case of one key paper for the advancement of Deep Learning written by G. Hinton, N. Srivastava, A. Krizhevsky, I. Sutskever and R. Salakhutdinov that introduced Dropout mechanism[74]. This paper was rejected from NIPS in 2012[75].

Or Google, when publishing the results, consolidates its reputation as a leader in the sector, attracting the next wave of talent, which is one of the main obstacles to the advancement of the topic.

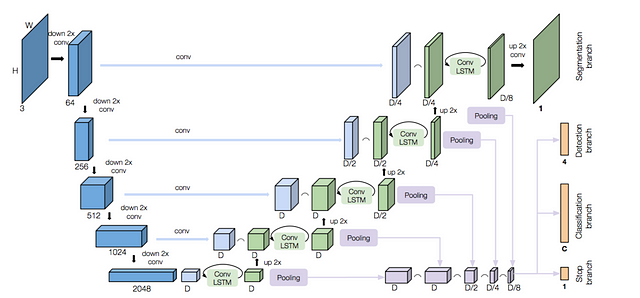

Advances in algorithms

Source: https://arxiv.org/pdf/1712.00617.pdf

Thanks to the improvement of the hardware that we have already presented and to having more computing capacity by the scientists who were researching in the area, it has been possible to advance dramatically in the design of new algorithms that have allowed to overcome important limitations detected in the previous algorithms. For example, not until many years ago it was very difficult to train multilayer networks from an algorithm point of view. But in this last decade there have been impressive advances with improvements in activation functions, use of pre-trained networks, improvements in training optimization algorithms, etc. Today, algorithmically speaking, we can train models of hundreds of layers without any problem.

REFERENCES

[7] The data that appear in this section are available at the time of writing this section (Spanish version of the book) at the beginning of the year 2018.

[8] Wikipedia, NoSQL. [online]. Available at: https://es.wikipedia.org/wiki/NoSQL [Accessed: 15/04/2018]

[9] The ImageNet Large Scale Visual Recognition Challenge (ILSVRC). [online]. Available at: www.image-net.org/challenges/LSVRC. [Accessed: 12/03/2018]

[10] MNIST [online]. Available at: http://yann.lecun.com/exdb/mnist/[Accessed: 12/03/2018]

[11] STL [online]. Available at: http://ai.stanford.edu/~acoates/stl10/[Accessed: 12/03/2018]

[12] See http://ccodataset.org

[13] See http://github.com/openimages/dataset

[14] See http://www.visualqa.org

[15] See http://ufldl.stanford.edu/housenumbers

[16] See http://www.cs.toronto.edu/~kriz/cifar.htmt

[17] See https://github.com/zalandoresearch/fashion-mnist

[18] See http://ai.stanford.edu/~amaas/data/sentiment

[19] See https://archive.ics.uci.edu/ml/datasets/Twenty+Newsgroups

[20] See https://archive.ics.uci.edu/ml/datasets/reuters-21578+text+categorization+collection

[21] See https://wordnet.princeton.edu

[22] See https://www.yelp.com/dataset

[23] See https://corpus.byu.edu/wiki

[24] See http://u.cs.biu.ac.il/~koppel/BlogCorpus.htm

[25] See http://statmt.org/wmt11/translation-task.html

[26] See https://github.com/Jakobovski/free-spoken-digit-dataset

[27] See https://github.com/mdeff/fma

[28] See http://mtg.upf.edu/ismir2004/contest/tempoContest/node5.html

[29] See https://labrosa.ee.columbia.edu/millionsong

[30] See http://www.openslr.org/12

[31] See http://www.robots.ox.ac.uk/~vgg/data/voxceleb

[32] See https://archive.ics.uci.edu/ml/machine-learning-databases/housing/housing.names

[33] See http://host.robots.ox.ac.uk/pascal/VOC/

[34] See https://www.plant-phenotyping.org/CVPPP2017

[35] See https://www.cityscapes-dataset.com

[36] Kaggle [online]. Available at: ttp://www.kaggle.com [Accessed: 12/03/2018]

[37] Empresas en la nube: ventajas y retos del Cloud Computing. Jordi Torres. Editorial Libros de Cabecera. 2011.

[38] Wikipedia. REST. [online]. Available at: https://en.wikipedia.org/wiki/Representational_state_transfer [Accessed: 12/03/2018]

[39] Amazon ML [online]. Available at: https://aws.amazon.com/aml/[Accessed: 12/03/2018]

[40] SageMaker [online]. Available at: https://aws.amazon.com/sagemaker/[Accessed: 12/03/2018]

[41] Azure ML Studio [online]. Available at: https://azure.microsoft.com/en-us/services/machine-learning-studio/ [Accessed: 12/03/2018]

[42] Azure Intelligent Gallery [online]. Available at: https://gallery.azure.ai[Accessed: 12/03/2018]

[43] Google Prediction API [online]. Available at: https://cloud.google.com/prediction/docs/ [Accessed: 12/03/2018]

[44] Google ML engine [online]. Available at: https://cloud.google.com/ml-engine/docs/technical-overview [Accessed: 12/03/2018]

[45] Watson Analytics [online]. Available at: https://www.ibm.com/watson/[Accessed: 12/03/2018]

[46] PredicSis [online]. Available at: https://predicsis.ai [Accessed: 12/03/2018]

[47] BigML [online]. Available at: https://bigml.com [Accessed: 12/03/2018]

[48] See https://www.kdnuggets.com/2018/02/top-20-python-ai-machine-learning-open-source-projects.html

[49] See https://github.com/tensorflow/tensorflow

[50] See https://keras.io

[51] See https://github.com/keras-team/keras

[53] See http://www.openmp.org

[54] See https://github.com/pytorch/pytorch

[55] See http://scikit-learn.org

[56] See http://scikit-learn.org/stable/modules/preprocessing.html

[57] See https://github.com/scikit-learn/scikit-learn

[58] See http://deeplearning.net/software/theano

[59] See http://caffe.berkeleyvision.org

[61] See https://github.com/Microsoft/CNTK

[62] See https://mxnet.apache.org

[63] See https://deeplearning4j.org

[65] See https://developer.nvidia.com/digits

[66] See http://kaldi-asr.org/doc/dnn.html

[67] See https://lasagne.readthedocs.io/en/latest/

[68] See https://github.com/autumnai/leaf

[69] See http://www.vlfeat.org/matconvnet/

[70] See http://www.opendeep.org

[71] See https://github.com/dmlc/minerva

[72] See https://github.com/laonbud/SoooA/

[74] G. Hinton, N. Srivastava, A. Krizhevsky, I. Sutskever and R. Salakhutdinov “Improving neural networks by preventing co-adaptation of feature detectors” https://arxiv.org/pdf/1207.0580.pdf

[75] See https://twitter.com/ChrisFiloG/status/1009594246414790657