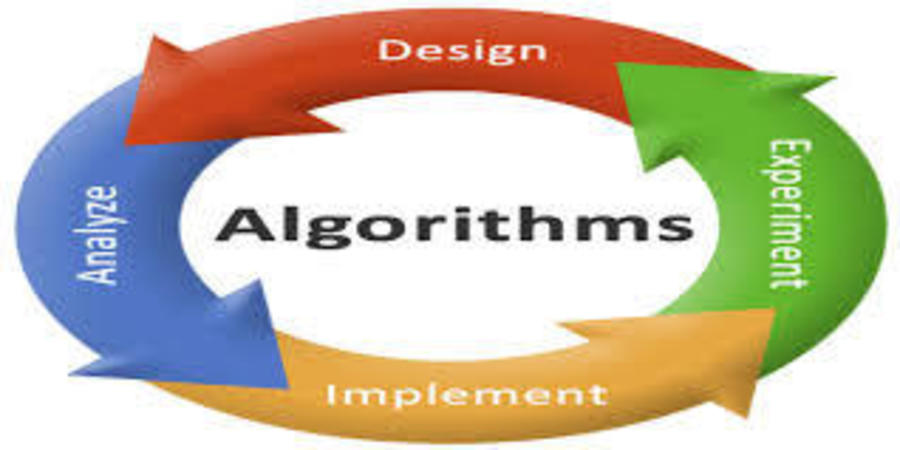

AIOps is an umbrella term for using complex infrastructure management and cloud solution monitoring tools to automate the data analysis and routine DevOps operations. Processing all the incoming machine-generated data on time is not humanly possible, of course. However, this is exactly the sort of tasks Artificial Intelligence (AI) algorithms like deep learning models excel at. The only remaining question is the following: how to put these Machine Learning (ML) tools to good work in the daily life of DevOps engineers? Here is how AIOps can help your IT department.