Ready to learn Blockchain? Browse courses like Blockchain for Finance Professionals developed by industry thought leaders and Experfy in Harvard Innovation Lab.

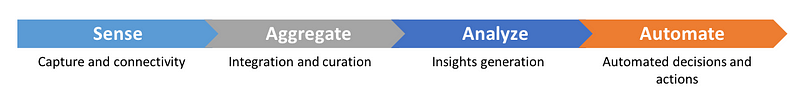

The three currently most prominent enterprise technologies are without doubt AI, blockchain, and IoT, and the driving factor behind them is data; people even go so far to proclaim that “data is the new oil”. New technologies enable collection, sharing, analysis of data, and automation of decisionsbased on them in ways that haven’t been possible before in what is essentially a data value chain.

Data Value Chain

Out of the three, blockchain technology is what assembles the pieces and there is a whole ecosystem of data-driven blockchain projects emerging. This decentralized ecosystem is set to help incentivize people to contribute data, technical resources, and effort:

- 1st generation projects have been focussing on creating the data infrastructure to connect and integrate data, e.g. IOTA, IoT Chain, or IoTex for data from connected IoT devices, or Streamr for streaming data.

- 2nd generation projects have been working on creating data marketplaces, e.g. Ocean Protocol, SingularityNet, or Fysical and crowd data annotation platforms, e.g. Gems or Dbrain.

- With solutions covering the first steps on this data value chain maturing, my friends @sherm8n and Rahul started to work on Raven Protocol, a first 3rd generation project that will close an important gap at the analyze stage: Compute resources for AI training.

A recent OpenAI report showed that “the amount of compute used in the largest AI training runs has been increasing exponentially with a 3.5 month-doubling time”, this is a 300.000x increase since 2012.

OpenAI Report: AI and Compute

The immediate consequences from this are:

- Higher costs, as used compute is increasing faster than supply

- Longer lead times for new solution, as model training takes longer

- Increased market entry barriers based on access to funding & resources

These consequences can proof dire for smaller firms and researchers, limiting their ability to create competitive models without significant funding. And even with funding, they might be blacklisted from resources if they’re considered competition by the providers.

“So Gavin Belson can just pick up his phone and make us radioactive to every single web hosting service?”

But big corporations will feel the costs as well considering both the growth rate of resources and the growth rate of their AI efforts multiplied. I spoke to a few Chief Data Officers of Fortune 500 firms over the past months, and while they don’t consider it an issue yet, even they have to agree that they can invest their money in better ways than in bought-in HPC resources.

The beauty of blockchain ecosystems is that they allow to tap into otherwise unused resources, trade resources that would not have been tradable, and allow people to participate in a market that otherwise could not participate. From an economic perspective, it improves the leverage on existing resources.

Where 1st & 2nd generation data blockchain solutions used this to lower the barrier for access to annotated quality data, Raven Protocol is going to solve the training cost challenge. It is closing the gap that would have prevented the proverbial chain to hold, that is only as strong as its weakest link (hint: it’s the data value chain).

Together, the solutions in this blockchain data ecosystem create new opportunity and they lower cost. Especially the second is key as it lowers the entry barriers for new innovation, enabling more people to contribute and therefore potentially accelerating our progress as a society.

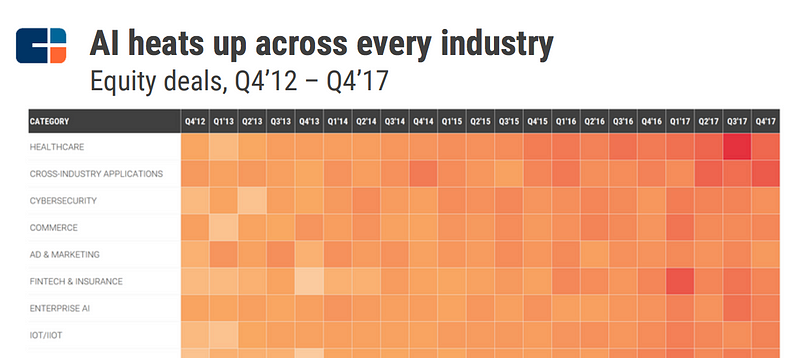

CBInsights: The State of Artificial Intelligence 2018

However, access to data and high costs create an entry barrier, which would limit research of new solutions to incumbents & other big players. The blockchain data ecosystem changes that and increases our chances of finding the right solutions in time. Raven Protocol is probably not the last, but an important stepping stone to make that work.