Ready to learn Internet of Things? Browse courses like IIoT Applications for Machine Learning developed by industry thought leaders and Experfy in Harvard Innovation Lab.

While IoT has many advantages, enterprises need to overcome some important problems in cloud computing to fully gain from these potential advantages.

It’s expected that by 2020, up to 65% of enterprises will be using the Internet of Things (IoT). It’s also a known fact that IoT and the cloud are impossible to separate—but only about a third of the data collected by the growing army of sensors is analyzed at source.

While IoT has many advantages, enterprises need to overcome some important problems in cloud computing to fully gain from these potential advantages. One of the main issues is reducing the need for bandwidth by not sending every bit of information over cloud channels, and then aggregating it at certain access points. To accomplish this, enterprises must invest heavily. But the increased costs here will be justified by the overwhelming reduction in inefficiencies.

What do you mean by “things?”

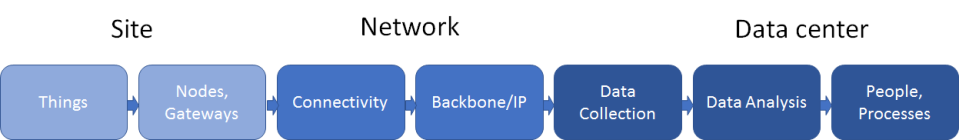

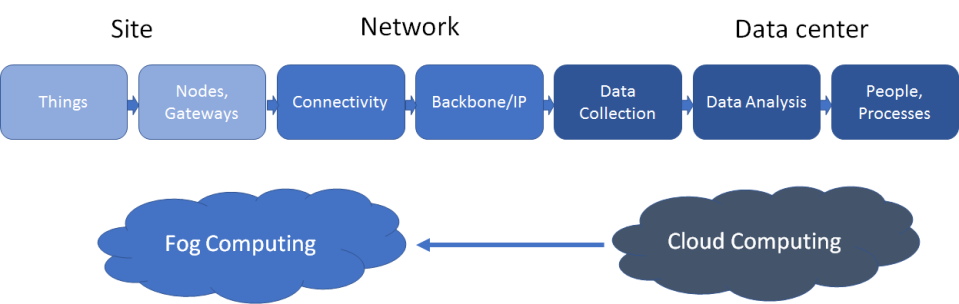

IoT typically has three main components:

- Site (things, nodes/gateways)

- Network (connectivity, backbone/IP)

- Data center (data integration, data analysis, people/processes).

Enterprises have been running all the above in the cloud. But this has resulted in latency, mobility, geographic, network bandwidth, reliability, security, and privacy challenges. To overcome these challenges, Fog was created by Cisco to extend cloud computing to the edge of an enterprise’s network.

Going from the cloud into the fog

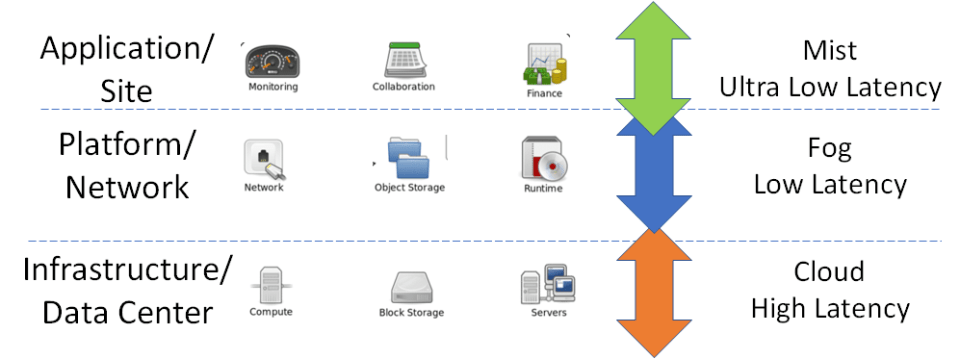

Also known as Edge computing or “Fogging,” Fog computing facilitates the operations of computing, storage, and networking services between end devices and cloud computing data centers. It offers an intermediate level of computing power, with the Datacenter hosted on the cloud. All other peripheral devices are managed using Fog computing, as shown below:

Enterprises have benefited from Fog computing, as it extends the cloud-computing paradigm to the edge. However, the stress on the network from addressing applications and services that do not fit this paradigm due to technical and infrastructural limitations has made architects look at a lightweight and rudimentary form of computing power, which resides directly within the network fabric. This is where Mist computing comes in.

Mist computing operates on the extreme edge of the network fabric. It uses microcomputers and microcontrollers to feed into Fog computing nodes—and can potentially extend onward towards cloud-computing platforms:

With the advent of Fog and Mist computing, applications that require rapid, time-critical (millisecond reaction times) actions like autonomous vehicles, emergency response services, drones, and virtual reality have become more safe, predictable, and efficient.

Experts in the exploration sector of the asset and data-intensive oil and gas industry can obtain real-time subsurface imaging and monitoring data to reduce exploratory well drilling. This not only saves money, but also minimizes environmental damage. Mist computing enables thousands of seismic sensors to generate the high-resolution imaging that is required to discover risks and opportunities.

As shown above, in tackling latency with a combination of Cloud, Fog, and Mist computing, you can transform faster and craft smart cities, drone-enabled supply chains, remote energy extraction and exploration, smart traffic, video surveillance, virtual reality, environmental conservation, and emergency response solutions.

So even with all these clouds, fog and mist, the future is still looking pretty bright.