The Current Software Engineering Landscape

Today’s software engineering (SE) practices stretch between increasingly complex component development and the need for automation of the software development lifecycle. Indeed, on the software expert side, a plethora of new enabling technologies promises to simplify the development process; on the end user side, however, the urge for rapid development of domain-specific applications increases too fast for any new technology to cope with.

Going rapidly from idea conception to product launching – even if it is a minimum viable product – has become a necessity nowadays in order to be competitive and to test solutions, products and services with the fewest resources possible (time and effort).

Upon software product creation, developers typically have to identify the basic requirements, define (semi-)formal specifications, sketch a draft system architecture, and proceed with software prototyping, where they usually follow hybrid architectures, integrating open source code and/or components from (their own) proprietary software repositories. These phases may be repeated in an iterative fashion, bringing about additional overhead. In particular, the process of searching for the most suitable – and inter-compatible – software resources may introduce significant delays in the SE process, as reconfiguration and extensive testing is generally required.

Practically, one could benefit through the reuse of existing software solutions, both open source and proprietary ones, which have to be appropriately validated in order to lead to quality software.

Understanding Why Software Projects Fail

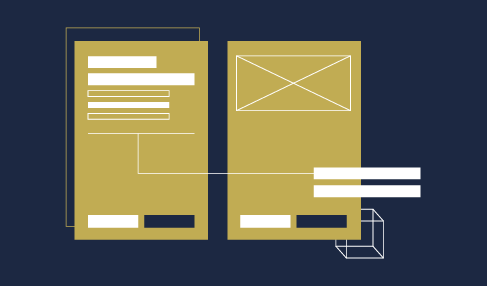

It is common knowledge that the success or failure of a software project is related to a number of parameters that may or may not have anything to do with the approach followed or the complexity of the problem. In fact, Barry Boehm [1], in 2002, identified six top reasons for the failure of a software project –– namely, incomplete requirements, lack of user involvement, lack of resources, unrealistic expectations, lack of executive support, and changes in requirements and specifications. A more recent study argues that software project complexity (and thus unpredictability) is the result of the convergence of three factors: User Requirements, Technology, and People (often referred to as the Stacey Matrix or Stacey Graph). Applied to software development, the Stacey Matrix aligns software projects into four categories according to their disposition on agreement on user requirements and certainty on technology: Simple, Complicated, Complex, and Chaotic (Figure 1).

Figure 1. Success and failure of software project based on methodology

Obviously, in order to improve software project success rate, projects should avoid the chaotic classification. If the software project were decomposed into well-designed components, complexity would decrease, leading to increased success ratio.

Introducing Data Mining into the Picture

To this end, data mining could prove an appropriate path towards generating reusable, well-defined and quality-tested software artifacts, which can be integrated with minimum effort, thus leading to successful software projects. Data mining for Software Engineering can be (and has been) applied at various levels of abstraction: a) at the software model/team management level, where static and dynamic mining techniques aim to localize and predict software bugs, b) at the software requirements/software design level, where association analysis and clustering techniques are performed for generating recommendations, c) at the software development/source code level, where techniques are applied in order to identify quality characteristics, as well as the reusability potential of source code, and d) at the operation/live-mode level, where outlier analysis techniques are performed for early diagnosis and self-healing of intelligent software systems (such as autonomous robots, IoT systems, and software agents).

a. Predicting Software Bugs

Lately, several researchers have focused on Software Reliability Prediction (SRP). The problem at hand focuses on discovering defect-prone components in a software project either pre-release or post-release. Thus, the main aim is to predict whether a software source code artifact contains code that either has defects or could potentially evolve as such. The term “component” (defined as software artifacts in a wider context) may refer to a class or a package (in an object-oriented perspective), but higher-level components or even “inter-project” level components (e.g. software projects or web services) are also possible. In the context of Software Reliability, current data mining approaches focus on predicting defect-prone components [2-6] using several quantitative metrics that are known to be satisfactory predictors of reliability [3].

b. Enhancing the Software Requirements Process

Requirements Engineering may refer to any process and/or artifact related to software requirements, while the “given context” refers to the project-specific information provided by the developers and the stakeholders of a project. The need for proper identification of software requirements is crucial, since reengineering costs resulting from poorly specified requirements are considerably high [7]. In this context, and given the popularity of open source software initiatives and the adoption of diverse methodologies, several researchers have proposed data mining techniques and recommendation systems technologies [8]. This way they aspire to identify the features of a system [9], non-functional characteristics [10], and even the relation between requirements and stakeholders [11].

c. Mining Source Code

Given the flourishing of the open-source software paradigm, mining for information from source code repositories has also been very popular. Various approaches exist; some of them aim to improve auto-correction [12] or find syntax errors [13] in order to assist software developers when writing code. Other approaches aspire to identify the structural patterns of a programming language through Abstract Syntax Trees [14] and N-gram language models [15] extraction, thus leading to conclusions with respect to the quality characteristics of software. Mining code snippets [16], in order to identify similar code patterns, and APIs are also extremely popular in Code Search Engines, while frequent tree mining algorithms have also been used extensively [17], aspiring to identify common behavior patterns, focusing on the reuse perspective of software components.

d. Identifying Faulty Behaviors of Intelligent Software

Autonomous software systems have to operate following an open-world assumption paradigm and have to advance their intelligence mechanism to make decisions and act/react appropriately. Robustness is critical, so failure detection and handling, and respective adaptation of behavior based on system health and fault detection are essential [18]. Fault diagnosis consists of detecting anomalies in the behavior of systems and subsystems. Early fault detection can improve the performance of software systems [19] and can also help during testing and debugging. Statistical methods, such as particle filters, neural networks and Bayesian methods have been used [20] to help with fault detection and identification. Lately, other machine learning techniques, namely GMMs [21] and one-class SVMs [22], seem to be a better fit in reducing software “failures” to a minimum, thus requiring as little human intervention as possible.

Each one of these categories will be thoroughly discussed in the series of blogs to come, where emphasis will be placed on the advantages of applying data mining in the various software engineering facets.

References

[1] Boehm, B.: Software engineering is a value-based contact sport. Software, IEEE 19.5: 95-96 (2002).

[2] Diamantopoulos T., and Symeonidis, A.L., Towards Interpretable Defect-Prone Component Analysis using Genetic Fuzzy Systems. In Proceedings of the IEEE/ACM 4th International Workshop on Realizing Artificial Intelligence Synergies in Software Engineering (RAISE’15), pp.32-38, Florence, Italy, 2015.

[3] Marco D’Ambros, Michele Lanza, and Romain Robbes. Evaluating Defect Prediction Approaches: A Benchmark and an Extensive Comparison. Empirical Software Engineering, 17(4–5):531–577, August 2012.

[4] S. R. Chidamber and C. F. Kemerer. A Metrics Suite for Object Oriented Design. IEEE Transactions on Software Engineering, 20(6):476–493, June 1994.

[5] F. Brito e Abreu and W. Melo. Evaluating the Impact of Object-Oriented Design on Software Quality. In Proceedings of the 3rd International Software Metrics Symposium, pages 90–99, Mar 1996.

[6] Raimund Moser, Witold Pedrycz, and Giancarlo Succi. A Comparative Analysis of the Efficiency of Change Metrics and Static Code Attributes for Defect Prediction. In Proceedings of the 30th International Conference on Software Engineering, pages 181–190, New York, USA, 2008.

[7] Dean Leffingwell. Calculating your return on investment from more effective requirements management. American Programmer, 10(4):13– 16, 1997.

[8] A. Felfernig, G. Ninaus, H. Grabner, F. Reinfrank, L. Weninger , D. Pagano, and W. Maalej. An overview of recommender systems in requirements engineering. In Walid Maalej and Anil Kumar Thurimella, editors, Managing Requirements Knowledge, pages 315–332. Springer Berlin Heidelberg, 2013.

[9] Vander Alves, Christa Schwanninger, Luciano Barbosa, Awais Rashid, Peter Sawyer, Paul Rayson, Christoph Pohl, and Andreas Rummler. An exploratory study of information retrieval techniques in domain analysis. In Proceedings of the 2008 12th International Software Product Line Conference, SPLC ’08, pages 67–76,Washington, DC, USA, 2008. IEEE Computer Society.

[10] Jose Romero-Mariona, Hadar Ziv, and Debra J. Richardson. Srrs: A recommendation system for security requirements. In Proceedings of the 2008 International Workshop on Recommendation Systems for Software Engineering, RSSE ’08, pages 50–52, New York, NY, USA, 2008. ACM.

[11] Bamshad Mobasher and Jane Cleland-Huang. Recommender systems in requirements engineering. The AI magazine, 32(3):81–89, 2011.

[12] M. Allamanis, E. T. Barr, C. Bird, and C. Sutton. Learning natural coding conventions. arXiv preprint arXiv:1402.4182, 2014.

[13] J. Charles, A. Hindle, and J. N. Amaral. Syntax errors just aren’t natural: Improving error reporting with language models.

[14] R. Holmes and G.C. Murphy. Using structural context to recommend source code examples. In Proceedings of the 27th International Conference on Software Engineering, ICSE 2005, pages 117–125, St. Louis, MO, USA, May 2005.

[15] T. T. Nguyen, A. T. Nguyen, H. A. Nguyen, and T. N. Nguyen. A statistical semantic language model for source code. In FSE, 2013.

[16] S. Subramanian and R. Holmes. Making sense of online code snippets. In Proceedings of the 2013 10th IEEE Working Conference on Mining Software Repositories (MSR), pages 85–88, May 2013.

[17] A. Jiménez, F. Berzal, and J.-C. Cubero. Frequent tree pattern mining: A survey. Intelligent Data Analysis, 14(6):603–622, 01 2010.

[18] Bischoff, R., Guhl, T., Prassler, E., Nowak, W., Kraetzschmar, G., Bruyninckx, H., Soetens, P., Haegele, M., Pott, A., Breedveld, P., et al., 2010. BRICS-Best practice in robotics. In: Robotics (ISR), 2010 41st International Symposium on and 2010 6th German Conference on Robotics (ROBOTIK). VDE, pp. 1-8.

[19] Bererton, C., Khosla, P., 2002. An analysis of cooperative repair capabilities in a team of robots. In: Robotics and Automation, 2002. Proceedings. ICRA’02. IEEE International Conference on. Vol. 1. IEEE, pp. 476-482.

[20] Verma, V., Gordon, G., Simmons, R., Thrun, S., 2004. Real-time fault diagnosis [robot fault diagnosis]. Robotics & Automation Magazine, IEEE 11 (2), 56-66.

[21] Murphy, K. P., 2012. Machine learning: a probabilistic perspective. The MIT Press.

[22] Schoelkopf, B., Platt, J. C., Shawe-Taylor, J., Smola, A. J., Williamson, R. C., 2001. Estimating the support of a high-dimensional distribution. Neural computation 13 (7), 1443-1471.