AI bias doesn’t come from AI algorithms, it comes from people. What does that mean and what can we do about it?

Technology is not free of humans

No technology is free of its creators. Despite our fondest sci-fi wishes, there’s no such thing as ML/AI systems that are truly separate and autonomous… because they start with us.

All technology is an echo of the wishes of whoever built it.

This isn’t just about ML/AI; any technology you’re in danger of seeing as independent is simply the kind whose effect lingers longer after your button press.

No matter how complex its echo, technology always comes from and is designed by people, which means it’s no more objective than we are. This makes the definition of algorithmic bias problematic.

Algorithmic bias refers to situations where a computer system reflects the implicit values of the humans who created it, but doesn’t all technology reflect its creators’ implicit values? And if you think humans can be completely unbiased, take a stroll through this list…

Data and math don’t equal objectivity

If you’re looking to AI as your savior from human foibles, tread carefully.

Math can obscure the human element and give an illusion of objectivity.

Sure, data and math can increase the amount of information you use in decision-making and/or save you from heat-of-the-moment silliness, but how you use them is still up to you.

According to Wikipedia:

Objectivity is a philosophical concept of being true independently from individual subjectivity caused by perception, emotions, or imagination.

Tragicomically, a layer of math and data spread on top of the host of utterly subjective choices (What shall we apply AI to? Is it worth doing? In which circumstances? How shall we define success? How well does it need to work? etc.) obscures the ever-present human element and gives an illusion of objectivity.

It’s much flashier to say, “The AI learned to do this task all by itself…” than to tell the truth.

Look, I know sci-fi sells. It’s much flashier to say, “The AI learned to do this task all by itself…” than to tell the truth: “People used a tool with a cool name to help them write code. They fed in examples they considered appropriate, found some patterns in them, and turned those patterns into instructions. Then they checked whether they liked what those instructions do for them.”

The truth drips with human subjectivity — look at all those little choices along the way which are left up to people running the project. Wrapping them in a glamorous coat of math doesn’t make the core any less squishy.

When math is applied towards a purpose, that purpose is still shaped by the sensibilities of our times.

To make matters worse, the whole point of AI is to let you explain your wishes to a computer using examples (data!) instead of instructions. Which examples? Hey, that depends on what you’re trying to teach your system to do. Datasets are like textbooks for your student to learn from. Guess what? Textbooks have authors.

When I said that “bias doesn’t come from AI algorithms, it comes from people” some folks wrote to tell me that I’m wrong because bias comes from data. Well, we can both be winners… because people make the data.

Textbooks reflect the biases of their authors. Like textbooks, datasets have authors. They’re collected according to instructions made by people.

Other design choices like launch criteria, data population, and more are also entirely up to human decision-makers, which is why it’s so important that you take care to choose your project leaders wisely and train them well.

It’s safest to think of AI as a tool for writing code.

Please don’t fall victim to the sci-fi hype. You already know that computer code is written by people, so it reflects their implicit values. Think of AI as an excellent tool for writing code — because that’s what it is — so the same basics hold. Remember to design and test all technology carefully, especially when it scales.

This is no excuse to be a jerk

Machines are just tools. They’re extensions of their creators, who, according to the gory definition in the last bullet point of this list (“when our past experiences distort our perception of and reaction to information”), are biased.

All of us had our perceptions shaped by our past experience and all of us are products of our personal histories. In that sense, every single human is biased.

Philosophical arguments invalidating the existence of truly unbiased and objective technology don’t give anyone an excuse to be a jerk. If anything, the fact that you can’t pass the ethical buck to a machine puts more responsibility on your shoulders, not less.

Sure, our perceptions are shaped by our times. Societal ideas of virtue, justice, kindness, fairness, and honor aren’t the same today as they were for peoples living a few thousand years ago and they may keep changing. That doesn’t make the ideas unimportant, it only means we can’t outsource them to a heap of wires. They’re the responsibility of all of us, together. We should strive to do our best ethically and treat all people with the highest standards of respect and care.

The fact that you can’t pass the ethical buck to a machine puts more responsibility on your shoulders, not less.

Fairness in AI

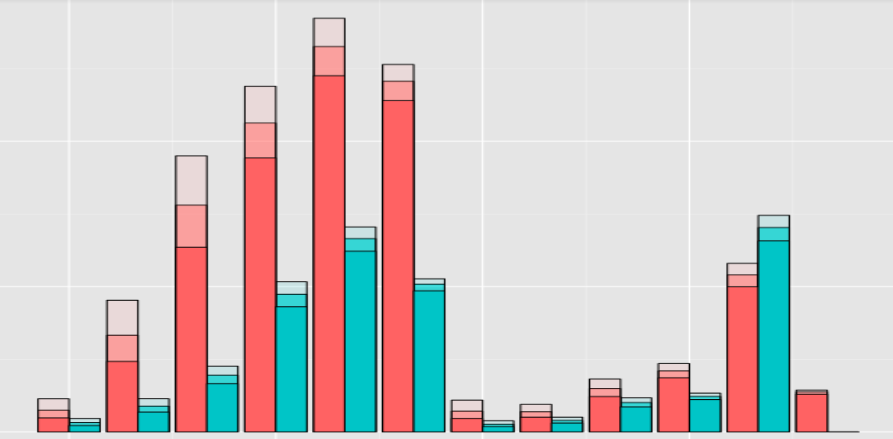

Another choice is which data to use for AI. You should expect better performance on examples that are similar to what your system learned from. If you choose not to use data from people like me, your system is more likely to make a mistake when I show up as your user. It’s your duty to think about the pain you could cause when that happens. Yes, it takes effort and imagination (and extensive analytics).

At a bare minimum, I hope you’d have the common sense to check whether the distribution of your user population matches the distribution in your data. For example, if 100% of your training examples come from residents of a single country, but your target users are global… expect a mess. Your system will treat that country’s residents less sloppily than everyone else. Is that fair?

If you’re keen to take your fairness analytics to the next level, there are specialized tools out there to help you. One of my favorites is the What-If Tool.

Fair and aware

There are many choices that people are responsible for along the way to AI. Half of the solution is striving to design systems to be good and fair and just to everyone. The other half is annihilating your ignorance of the consequences of your choices along the way. Think. Carefully. (And use analytics to help you think even more carefully.)

If you want to strive for fairness, work to annihilate your own ignorance of the consequences of your decisions.

I’ve written a lot of words here, when I could have just told you that most of the research on the topic of bias and fairness in ML/AI is about making sure that your system doesn’t have a disproportionate effect on some group of users relative to others. The primary focus is on distribution checks and similar analytics. The reason I wrote so much is that I want you to do even better. Automated distribution checks only go so far. No one knows a system better than its creators, so if you’re building one, take the time to think.

Think about how your actions will affect people and do your best to give those you’ll affect a voice to guide you through your blind spots.