Data Augmentation Method to improve SBERT Bi-Encoders for Pairwise Sentence Scoring Tasks (Semantic sentence tasks)

Background and challenges

Currently, the state-of-the-art architecture models for NLP usually reuse the BERT model which was pre-trained on large text corpora such as Wikipedia and the Toronto Books Corpus as the baseline [1]. By fine-tuning deep pre-trained BERT, a lot of alternative architectures were invented like DeBERT, RetriBERT, RoBERTa,… that achieved substantial improvements to the benchmarks on a variety of language understanding tasks. Among common tasks in NLP, pairwise sentence scoring has a wide number of applications in information retrieval, question answering, duplicate question detection, or clustering,… Generally, there are two typical approaches proposed: Bi-encoders and Cross-encoders.

- Cross-encoders [2]: performs full (cross) self-attention over a given input and label candidate, and tends to attain much higher accuracies than their counterparts. However, it must recompute the encoding for each input and label; as a result, they are not possible to retrieval end-to-end information cause they do not yield independent representations for the inputs and is prohibitively slow at test time. For example, the clustering of 10,000 sentences has a quadratic complexity and requires about 65 hours in training [4].

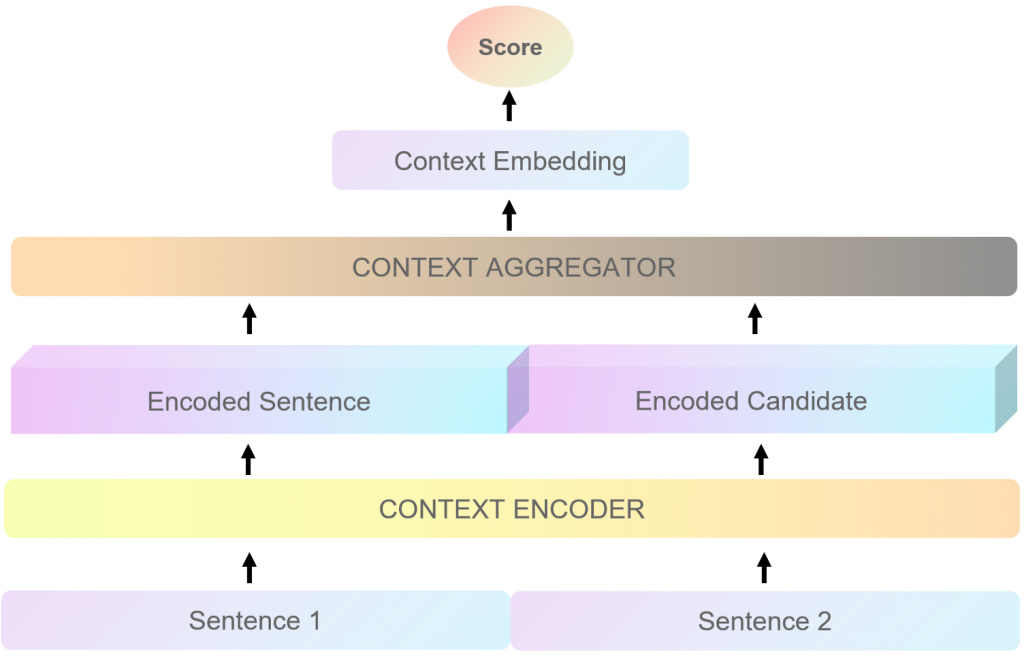

- Bi-encoders [3]: performs self-attention over the input and candidate label separately, maps them to a dense vector space, and then combines them at the end for a final representation. Therefore, Bi-encoders are able to index the encoded candidates and compare these representations for each input resulting in fast prediction times. At the same complexity of clustering 10,000 sentences, time is reduced from 65 hours to about 5 seconds [4]. The performance of the advanced Bi-encoder Bert model was presented by Ubiquitous Knowledge Processing Lab (UKP-TUDA), which is called Sentence-BERT (SBERT). For more details, this article indicates the hands’ tutorial of using SBert Bi-encoders.

On the other hand, no methodology is perfect in all aspects and Bi-encoders is not an exception. The Bi-encoders method usually achieves lower performance compared with the Cross-encoders method and requires a large amount of training data. The reason is Cross-encoders can compare both inputs simultaneously, while the Bi-encoders have to independently map inputs to a meaningful vector space which requires a sufficient amount of training examples for fine-tuning.

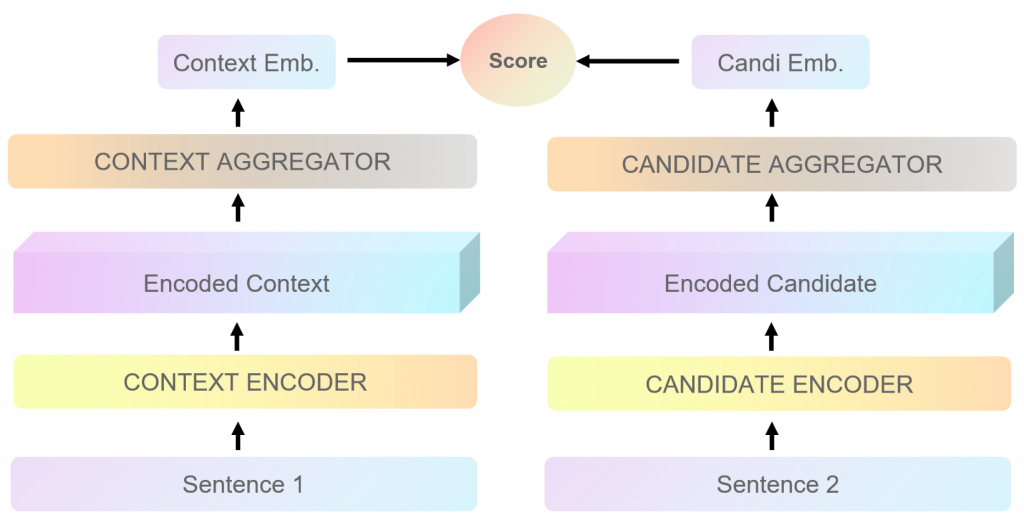

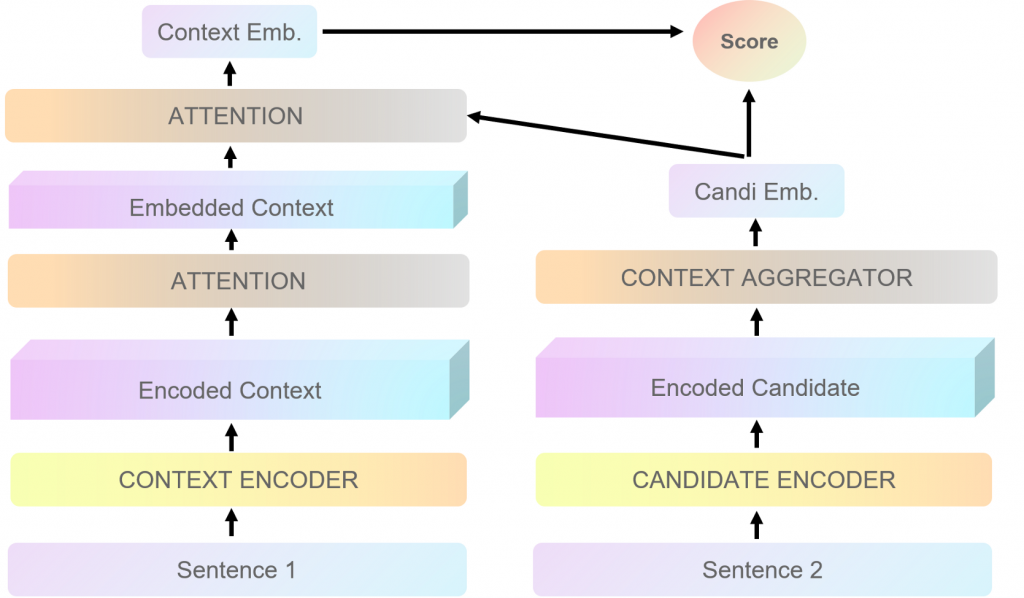

To solve this problem, Poly-encoders was invented [5]. Poly-encoders utilizes two separate transformers (similar to cross-encoders), but attention was applied between two inputs only at the top layer, resulting in better performance gains over Bi-encoders and large speed gains over Cross-encoders. However, Poly-encoders still have some drawbacks: they cannot be applied for tasks with symmetric similarity relations because of an asymmetrical score function and Poly-encoders representations cannot be efficiently indexed, causing issues for retrieval tasks with large corpora sizes.

In this article, I want to introduce a new approach that can use both Cross-encoders and Bi-encoders in an effective way – data augmentation. This strategy is known as Augmented SBERT (AugSBERT) [6], which uses BERT cross-encoders to label a larger set of input pairs to augment the training data for SBERT bi-encoders. Then, SBERT bi-encoders is fine-tuned on this larger augmented training set, which yields a significant performance increase. The idea is very similar to Self-Supervised Learning by Relational Reasoning in Computer Vision. Therefore, in a simple way, we can think that it is Self-Supervised Learning in Natural Language Processing. For more details, it will be presented in the next section.

Technique highlight

There are three major scenarios for the Augmented SBERT approach for either pairwise-sentence regression or classification task.

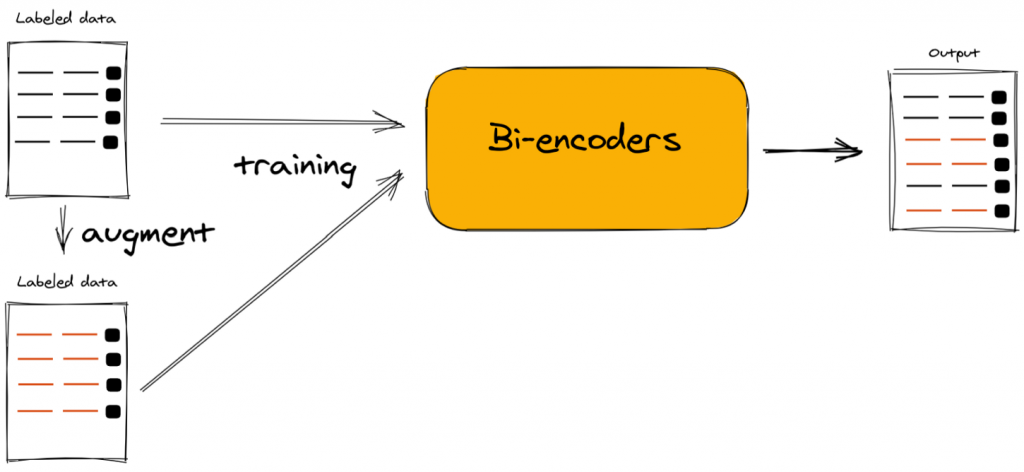

Scenario 1: Full annotated datasets (all labeled sentence-pairs)

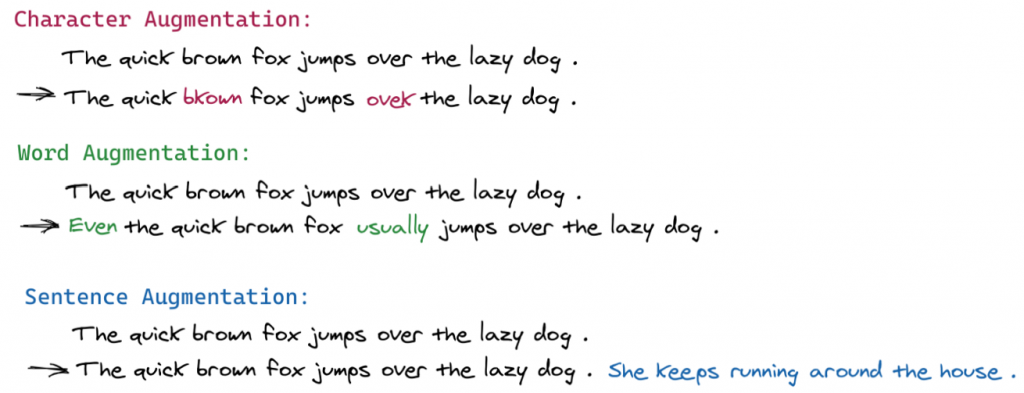

In this scenario, the straight forward data augmentation strategy is applied to prepare and extend the labeled dataset. There are three most common levels: Character, Word, Sentence.

However, the word level is the most suitable one for the sentence pair task. Based on the performance of training Bi-Encoders, there are few suggested methodologies: Insert/substitute word by contextual word embeddings (BERT, DistilBERT, RoBERTA or XLNet) or substitute word by synonym (WordNet, PPDB). After creating the augmented text data, it is then combined with the original one and fit into Bi-Encoders.

However, in the case of few labeled datasets or special cases, simple word replacement or increment strategies as shown are not helpful for data augmentation in sentence-pair tasks, even leading to worse performance compared to models without augmentation.

In short, the straight forward data augmentation strategy involves three steps:

- Step 1: Prepared the full labeled Semantic Text Similarity Dataset (gold data)

- Step 2: Replace the synonyms words in pair sentence (silver data)

- Step 3: Train a bi-encoder (SBERT) on the extended (gold + silver) training dataset

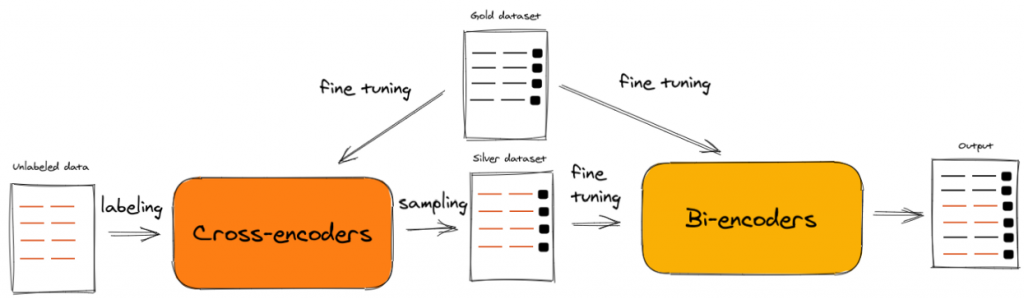

Scenario 2: Limited or small annotated datasets (few labeled sentence-pairs)

In this case, because of the limited labeled datasets (gold dataset), the pre-trained Cross-encoders are used to weakly label the unlabeled data (same domain). However, randomly selecting two sentences usually leads to a dissimilar (negative) pair; while positive pairs are extremely rare. This skews the label distribution of the silver dataset heavily towards negative pairs. Therefore, the two appropriate sampling approaches are suggested:

- BM25 Sampling (BM25): the algorithm is based on the lexical overlap and is commonly used as a scoring function by many search engines [7]. The top k similar sentences are queried and retrieved from unique indexed sentences.

- Semantic Search Sampling (SS): pre-trained Bi-encoders (SBERT) [4] are used to retrieve the top k most similar sentences in our collection. For large collections, approximate nearest neighbor search like Faiss could be used to quickly retrieve the k most similar sentences. It is able to solve the drawback of BM25 on synonymous sentences with no or little lexical overlap.

After that, the sampled sentence pairs will be weakly labeled by pre-trained Cross-encoders and be merged with the gold dataset. Then, Bi-encoders are trained on this extended training dataset. This model is called Augmented SBERT (AugSBERT). AugSBERT might improve the performance of existing Bi-encoders and reduce the difference with Cross-encoders.

In summary, AugSBERT for a limited dataset involves three steps:

- Step 1: Fine-tune a Cross-encoders (BERT) over the small (gold dataset)

- Step 2.1: Create pairs by recombination and reduce the pairs via BM25 or semantic search

- Step 2.2: Weakly label new pairs with Cross-encoders (silver dataset)

- Step 3: Train a Bi-encoders (SBERT) on the extended (gold + silver) training dataset

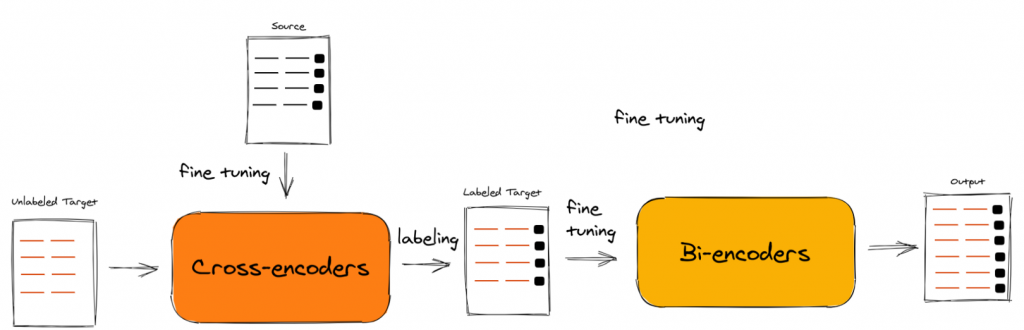

Scenario 3: No annotated datasets (Only unlabeled sentence-pairs)

This scenario happens when we want SBERT to attain high performance in different domain data (without annotation). Basically, SBERT fails to map sentences with unseen terminology to a sensible vector space. Hence, the relevant data augmentation strategy domain adaptation was proposed:

- Step 1: Train from scratch a Cross-encoders (BERT) over a source dataset, for which we contain annotations.

- Step 2: Use these Cross-encoders (BERT) to label your target dataset i.e. unlabeled sentence pairs

- Step 3: Finally, train a Bi-encoders (SBERT) on the labeled target dataset

Generally, AugSBERT benefits a lot when the source domain is rather generic and the target domain is rather specific. Vice-versa, when it goes from a specific domain to a generic target domain, only a slight performance increase is noted.

Experimental evaluation

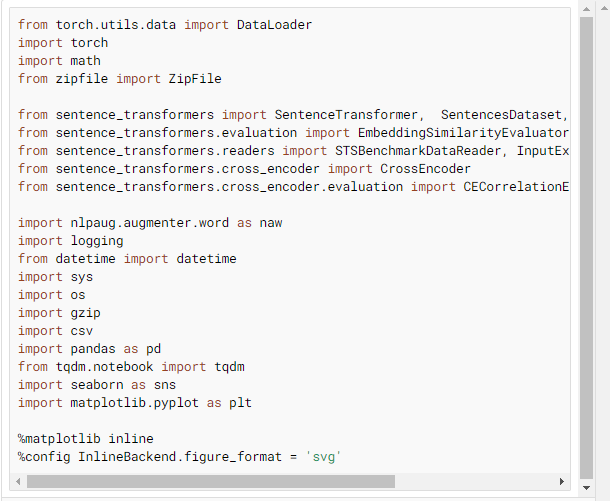

In this experiment, I will introduce a demo on how to apply AugSBERT with different scenarios. First, we need to import some packages

Scenario 1: Full annotated datasets (all labeled sentence-pairs)

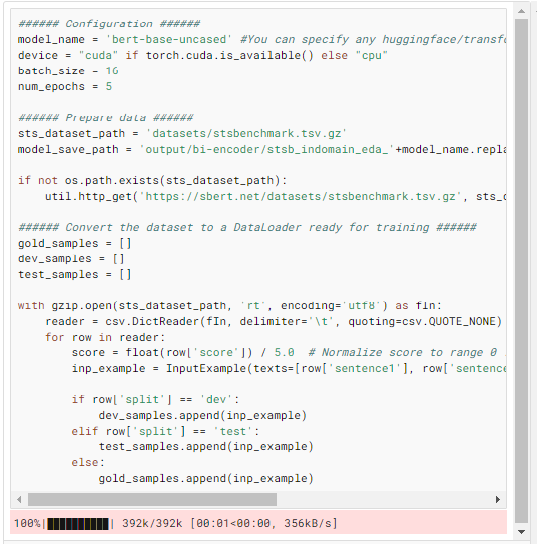

The main purpose of this scenario is extending the labeled dataset by the straight forward data augmentation strategies, therefore, we will prepare train, dev, test dataset on the Semantic Text Similarity dataset (link) and define batch size, epoch, and model name (You can specify any Huggingface/transformers pre-trained model)

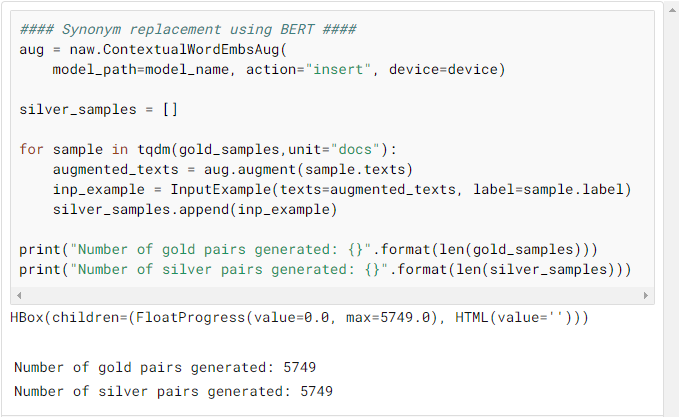

Then, we will insert words by our BERT model (you can apply another argumentation technique as I mentioned in the Technique highlight section) to create a silver dataset.

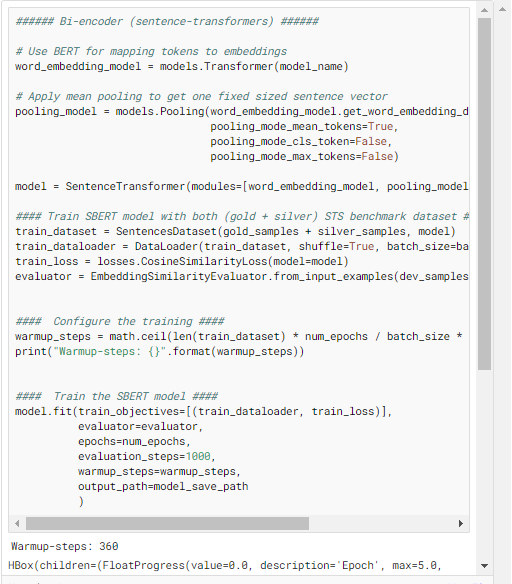

Next, we define our Bi-encoders with mean pooling with both(gold + silver) STS benchmark dataset

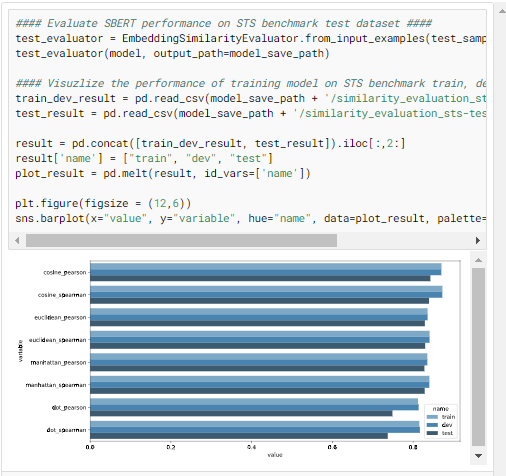

Finally, we will evaluate our model in the test STS benchmark

Scenario 2: Limited or small annotated datasets (few labeled sentence-pairs)

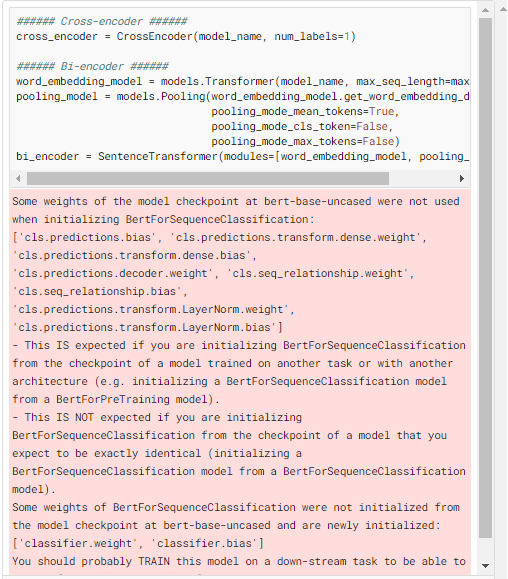

In this scenario, we will use Cross-encoders that were trained on the limited labeled dataset (gold dataset) to soft label the in-domain unlabeled dataset (silver dataset) and train Bi-encoders in both datasets (silver + gold). In this simulation, I also use again STS benchmark dataset and create new pairs of sentences by pre-trained SBERT model. First, we will define Cross-encoders and Bi-encoders.

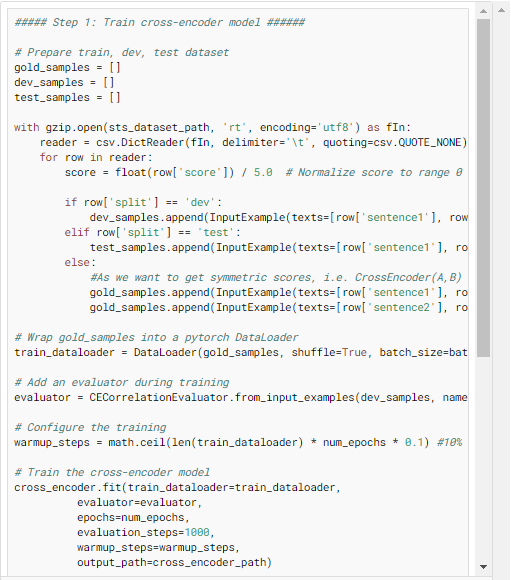

Step 1, we will prepare train, dev, test like before and fine-tune our Cross-encoders

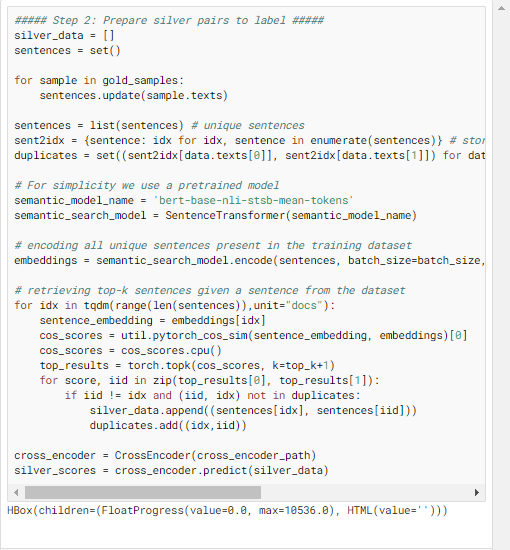

Step 2, we use our fine-tuned Cross-encoders to label unlabeled datasets.

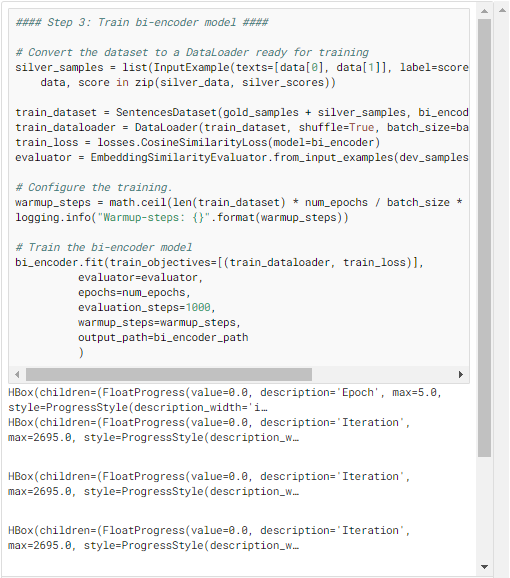

Step 3, we train our Bi-encoders in both gold and silver datasets

Finally, we will evaluate our model in the test STS benchmark dataset.

Scenario 3: No annotated datasets (Only unlabeled sentence-pairs)

In this scenario, all the steps are very similar to scenario 2 but in a different domain. Because of the capability of our Cross-encoders, we will use a generic source dataset (STS benchmark dataset) and transfer the knowledge to a specific target dataset (Quora Question Pairs)

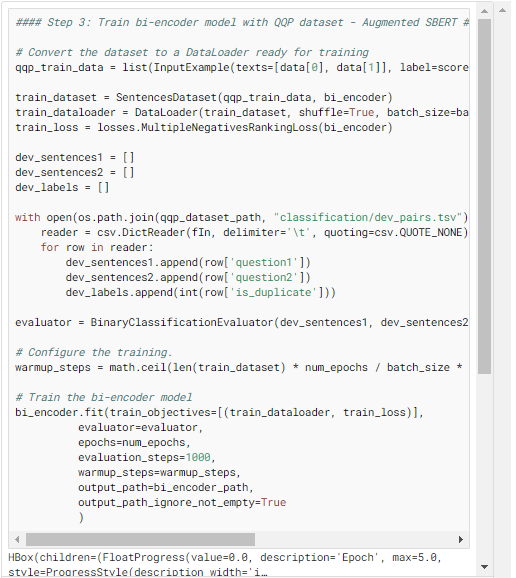

And train our Cross-encoders.

Labeling Quora Question Pairs dataset (silver dataset). In this case, the task is classification so we have to convert our score to binary scores.

Then, training our Bi-encoders

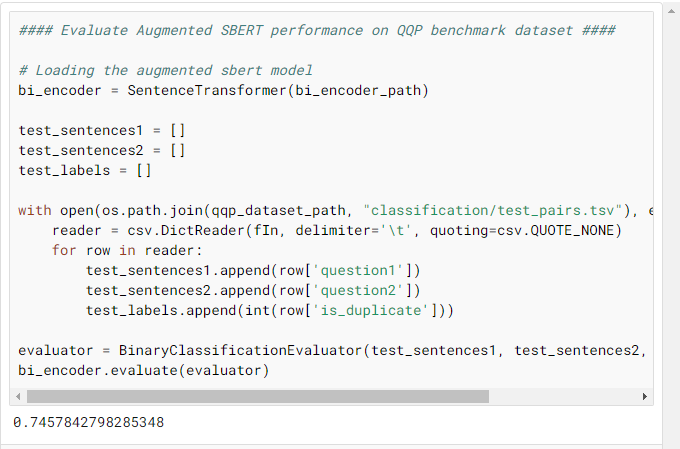

Finally, evaluating on test Quora Question Pairs dataset

Final Thoughts

AugSBERT is a simple and effective data augmentation to improve Bi-encoders for pairwise sentence scoring tasks. The idea is based on labeling new sentence pairs by using pre-trained Cross-encoders and combining them into the training set. Selecting the right sentence pairs for soft-labeling is crucial and necessary to improve the performance. The AugSBERT approach can also be used for domain adaptation, by soft-labeling data on the target domain.

References

[1] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding.

[2] Thomas Wolf, Victor Sanh, Julien Chaumond, and Clement Delangue. Transfertransfo: A transfer learning approach for neural network-based conversational agents.

[3] Pierre-Emmanuel Mazare, Samuel Humeau, Martin Raison, and Antoine Bordes. Training millions of personalized dialogue agents.

[4] Nils Reimers and Iryna Gurevych. SentenceBERT: Sentence Embeddings using Siamese BERTNetworks.

[5] Samuel Humeau, Kurt Shuster, Marie-Anne Lachaux, and Jason Weston. Poly-encoders: Architectures and pre-training strategies for fast and accurate multi-sentence scoring.

[6] Nandan Thakur, Nils Reimers, Johannes Daxenberge, and Iryna Gurevych. Augmented SBERT: Data Augmentation Method for Improving Bi-Encoders for Pairwise Sentence Scoring Tasks.

[7] Giambattista Amati. BM25, Springer US, Boston, MA.