Ready to learn Machine Learning? Browse courses like Uncertain Knowledge and Reasoning in Artificial Intelligence developed by industry thought leaders and Experfy in Harvard Innovation Lab.

This post is about people’s bias against AI’s performance and how realistic our expectations are from AI systems.

Human Intelligence vs. Artificial Intelligence

I love measuring and explaining things in numbers. The most common and quick measurement I do is with scores and sub-scores. If I need to compare two things, I list the variables and give them weights. With that list, I give scores to variables, multiply them with weights and sum up. This gives me a single number. For many things I find it easy to use. Picking which TV to buy, which task to work on next, or testing if a method is better than other.

While some things are easy to measure, intelligence is not one of them. Intelligence is a very abstract and complex thing to measure. Even the definition of intelligence is complex. What is intelligence and how to measure it are not the kind of questions I am after to answer. The curiosity that I have for this post is how do we, people, perceive artificial intelligence and what are our expectations from it. It seems to me that when people judge AI’s ability, we are harsh. We want AI to be perfect. We do not show the flexibility we provide to human mistakes proportionally to AIs. We want AI to be “like a human”. Maybe it is because of the recent “works like brain” advertisements (google “acts like brain”).

Whatever the reason is, it is a common pattern. We expect artificial intelligence to be comparable to human intelligence.

Wizard with an Artificial Intelligence in Electronic Oz

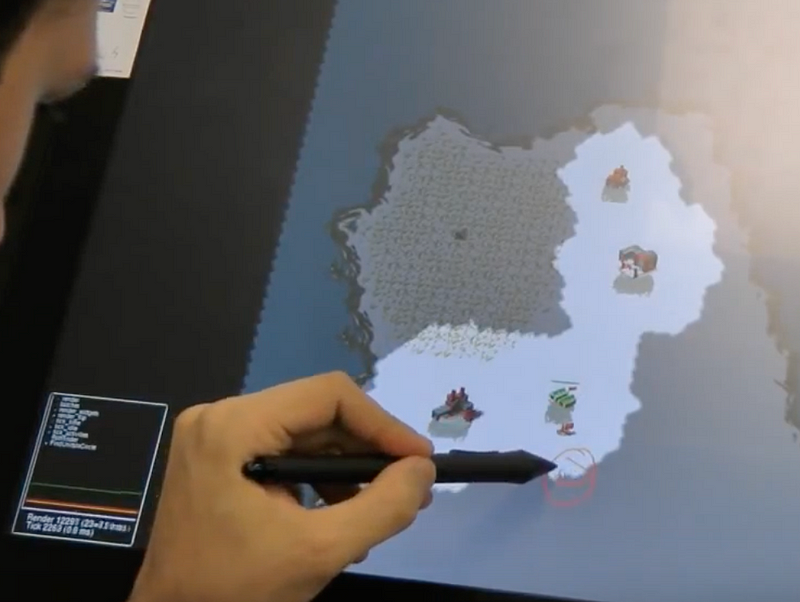

I conducted a series of data collection experiments that got me writing this post in 2012. I was conducting a research about sketch recognition (i.e. classifying hand written symbols and drawings) using machine learning. If you are curious about sketch recognizers or would like to have a more concrete idea, here is a great one you can try: https://quickdraw.withgoogle.com/ . One of the things I wanted to achieve was to convert a real-time strategy video game’s interaction model from mouse/keyboard to sketching. With a sketching interface, people would play the game via sketching instead of mouse clicks and keystrokes. Imagine when you want to construct a building, instead of picking it from a menu and placing it onto map with point-and-click, you would sketch a simple house onto the part of the map where you want to construct it.

A Snapshot from Experiment Records Where of a User Plays the Strategy Game via Sketching

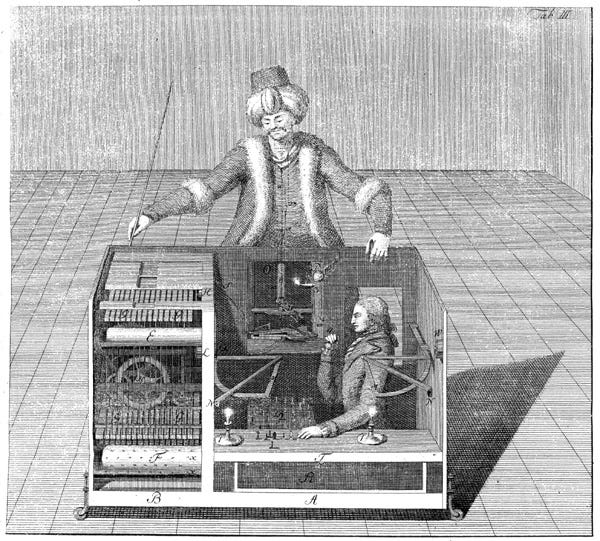

The plan was to develop a sketch recognizer with machine learning. In order to achieve it, the first step was to collect many hand drawn sketches from multiple people so I could use them to train a model. Instead of asking people to sketch what they see on the screen, I wanted to put them into a real environment. The environment that they would play the game with sketch like the AI was already in place. I thought that with this setting people would sketch more “realistically” than they would do in a relaxed, no-time constrained, and no-stress “copy the picture” environment. In order to collect data in that environment, I created a “wizard of oz” environment.

The idea is simple. You prepare a setting where there are two rooms/spaces. In one space you put the computer where the attendee will use. In the second room you put another computer that you will use. The idea is to connect those two computers so that you can see what the attendee is up to, and take actions accordingly from your own computer. In my case it was for sketch recognition. So if attendee draws a house, I was able to see it from my computer and give the command for the house drawing. The goal was to replace an AI system that was not in place, so it would look like there is an actual AI, which is actually a human in a different room.

“Mechanical Turk” was a chess playing machine from late 18th century that seems autonomous, but in reality is operated by a human. In that sense, it was a Wizard of Oz setting. https://en.wikipedia.org/wiki/The_Turk .

After the experiment, I asked them to fill a questionnaire followed by informal small talks as some people were interested in this way of playing a game. This is the part where I was confused. Many people told me and wrote down in the questionnaire the same thing:

“The AI that was recognizing my sketches did mistakes”

You can see why I was confused. I was the “AI” that was recognizing the sketches and they tell me that AI was underperforming! How come is this possible? Have I done mistakes recognizing the symbols? It might be the case but the symbols were simple enough so I did not think that I did a lot of mistakes.

These anecdotal evidences never went into the research results but got me puzzled for a long time. If I, as a human, failed the expectations of people as a recognizer how can we come up with an AI that satisfies the users? Would it be different if I would disclose I was the AI? Were they pre-judged that an AI would always do mistakes? What do we expect from an AI?

What is Your Expectation from the Machine?

90% accuracy is a milestone for most of the recognizers. It feels like a great number. “Your model got 90% accuracy”. I would expect myself that in most cases I would declare the problem solved and present it to stakeholders. I would also expect them to be happy with the result. Imagine you scored 90% in an exam.

However there is one thing. It actually may not be that good in real-life use. Imagine that you are sketching a circle and 9 times out of 10, the AI recognizes it correctly and takes action accordingly. But that 1 time out of 10, it recognizes 1 as another thing (e.g. triangle) and still takes an action. And there you are trying to stop the action that AI took and redo it. If you are using a voice assistant you may probably already had that: “NO!, STOP!, DO NOT PLAY THAT SONG!, THAT IS NOT EVEN CLOSE OF WHAT I ASKED! ABORT! ABORT!”. Do you think that speech recognition performance is not good enough? Microsoft announced 5.1% error rate (95.9% accurate) in its blog in 2017 (https://www.microsoft.com/en-us/research/blog/microsoft-researchers-achieve-new-conversational-speech-recognition-milestone/). They also measured the human’s error rate with the same metric as 5.1%. So even if you think that the performance of your speech assistant is bad, it is actually very close to a human’s.

When you start an AI project you need to determine the expectations correctly from the stakeholders. If you manage expectations, both parties will be happier. For instance if your stakeholder had a perfect image recognizer in her mind, you have already failed at start. Even if you develop a great recognizer it will not be as perfect as she have thought. So manage expectations, and think about the accuracy in terms of real life setting. If it is not acceptable to shout “ABORT” to your voice assistant every once a while, maybe you should think twice before you buy or start developing one.