Don’t get me wrong, I love machine learning and AI. But I don’t trust them blindly and neither should you, because the way you build effective and reliable ML/AIsolutions is to force each solution to earn your trust.

(Much of the advice in this article also applies to data insights and models made without ML/AI, especially the very last paragraph.)

Blind trust is a terrible thing.

Before you start thinking that this has anything to do with robots or sci-fi, stop! ML/AIsystems aren’t humanlike, they’re

Setting the scene for wishful thinking

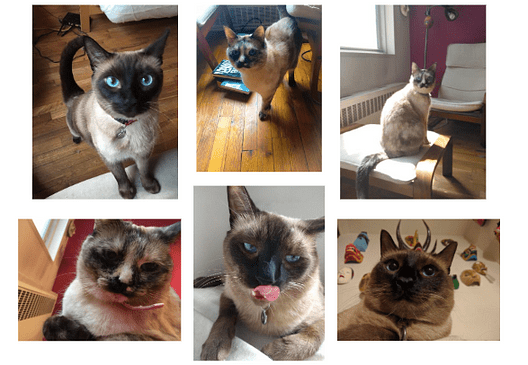

Welcome back to our cats, whom you might have already met when we built a classifier to split my original dataset of six photos into two groups.

Would you look at that? My amazing magical machine learning system succeeded in returning the exact results I was hoping for!

Bingo! These were two individual cats and the model returns their labels perfectly, making me misty-eyed with parental pride at my clever classifybot. This means I’ve just built a Tesla and Huxley Classifier!!! …Right?

Our minds play tricks on us

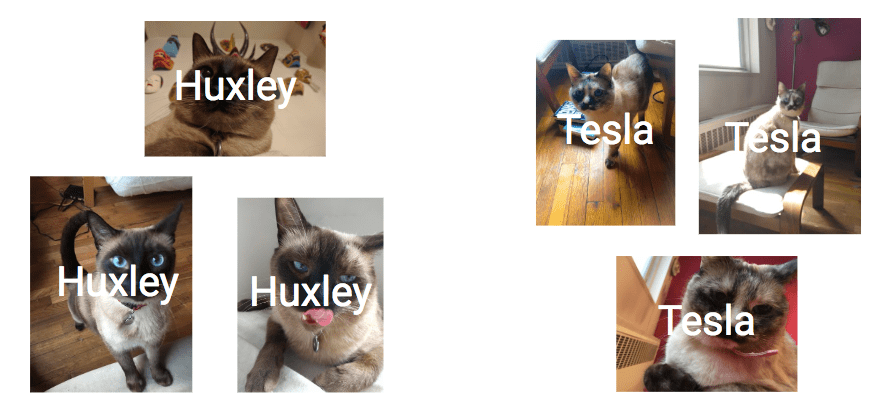

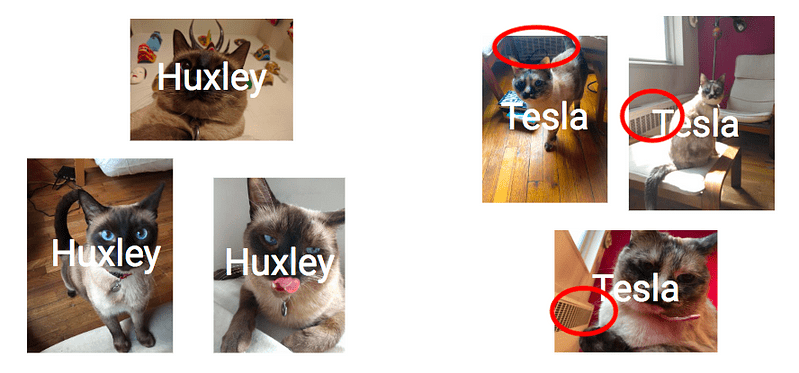

Not so fast! That’s our human wishful thinking playing tricks on us again. I got so emotionally caught up in my kitties that I might not have noticed that all the Tesla photos have a radiator in the background and all the Huxley photos don’t. Did you notice it? Look again.

Cat detector or radiator detector?

My solution— unbeknownst to me — is actually a radiator detector, not a Hux/Tes detector. Testing with new examples has a reputation for being helpful, so let’s see if we catch the problem that way…

Even if I tested it out on new data by showing it these new photos, there wouldn’t be so much as a peep of an alert. Oh dear. Is that a problem?

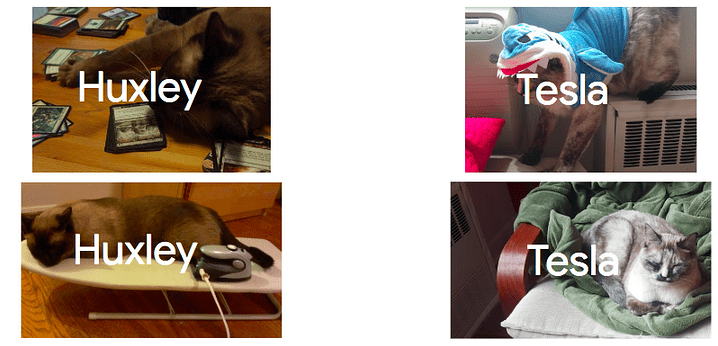

Not if Tes is always photographed with a radiator and Hux always isn’t. If that’s the case, who cares how it works? It always works. It’ll give the right cat every time. There’s no problem.

That was a big if. What if the cats move to another apartment (true story)? What if you inherit my classifier without my notes and use it on your photos?

In those cases, the label returned will be “Huxley” and any mission-critical systems relying on my Hux/Tes Detector will crash and burn horribly.

Whose fault is it?

While the fires rage, let’s do a quick autopsy:

- As is common with AI, the recipe converting pixels into labels is too complicated to wrap my head around.

- All I observe is the inputs (pixels) and the outputs (Hux / Tes label).

- Because I’m human, I don’t notice things that are right under my nose (radiator and Tes always coincide).

- The story I tell myself about how the inputs relate to the outputs is not only oversimplified, it’s also skewed away from the radiator explanation by wishful thinking.

- That’s okay. I don’t have to understand how it works as long as I can be sure it does work.

- The way to check whether it works is to evaluate how it does on a battery of relevant examples it hasn’t seen before.

So far, so good. There’s actually no problem yet. You trust plenty of things without knowing how they work, for example the paracetamol many of us take for our headaches. It does the job, yet science can’t tell you how. The important bit is that you can verify that paracetamol does work(unlike that whole thing with strapping a dead mole to your head).

Think about complex AI systems the same way as you think about headache cures. Make sure they work and you’ll be fine. Okay, where’s the unraveling? Drumroll, please!

Unfortunately, I check performance on examples that aren’t like the examples I want my system to operate in.

There it is. This last one is where it actually went horribly wrong. The rest are okay as long as we test the system appropriately with appropriate examples. So, the answer is: it’s my human fault.

If you test the system for one job and then apply it to another… what were you expecting?

If I teach and test a student using a set of examples that don’t cover the task I want that student learn, then why would I be surprised if there’s a mess later? If all my examples are from the world where Tes and the radiator always go together, then I can only expect my classifier to work in that world. When I move it to a different world, I’m putting it where it doesn’t belong. The application had better be low-stakes because there’s no excuse of “Oops, but I didn’t know it wouldn’t work outside the setting it was made for.” You know. And if you didn’t know before, you do now.

This is why it’s so important to have your goals and users in mind from the beginning. Specify the specs and setting before you begin. Please put a responsible adult adult in charge or stick to toy applications.

Where bigger things are at stake, don’t just throw cool buzzwords at irrelevant datasets.Without skilled and responsible leadership, well, I hope your application never has anyone’s health, safety, dignity, or future hanging on it…

Common sense, not magic

I’ve been using the word “examples” instead of “data” — they’re the same thing — to remind you that this isn’t magic. The point of ML/AI is that you’re expressing your wishes using examples instead of instructions. For it to work, the examples have to be relevant. The more complicated the task, the more examples you’ll need. You communicate using examples every day, so you knew this stuff already. Maybe you were wondering whether the math says something different; it doesn’t. Rest assured that common sense was your best algorithm all along.

If you want to teach with examples, the examples have to be good. If you want to trust your student, the test has to be good.

Blind trust is a terrible thing

You don’t know anything about the safety of your system outside the conditions you checked it in, so keep these reminders handy:

If you use a tool where it hasn’t been verified safe, any mess you make is your fault. AI is a tool like any other.